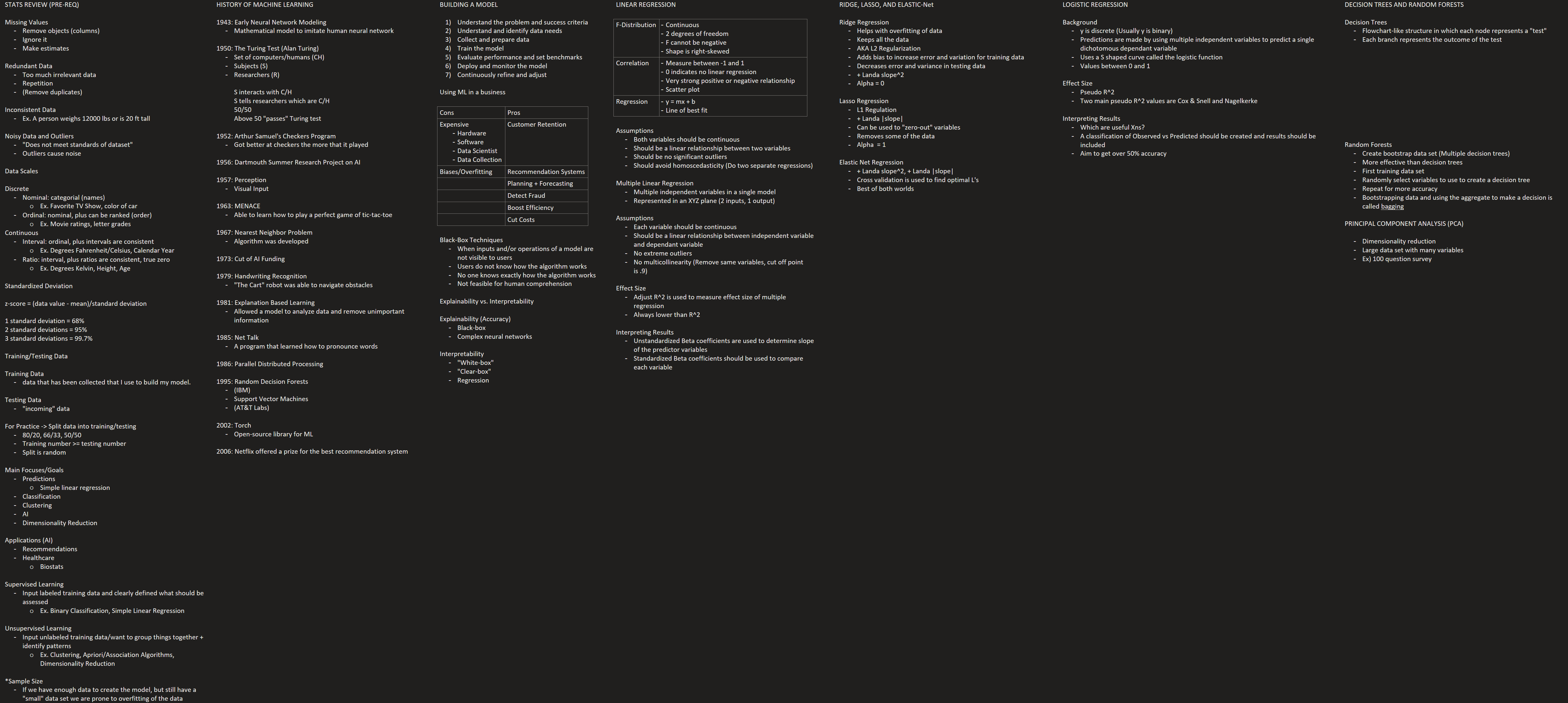

Machine Learning Notes

STATS REVIEW (PRE-REQ)

Missing Values

Remove objects (columns)

Ignore it

Make estimates

Redundant Data

Too much irrelevant data

Repetition

(Remove duplicates)

Inconsistent Data

Ex. A person weighs 12000 lbs or is 20 ft tall

Noisy Data and Outliers

"Does not meet standards of dataset"

Outliers cause noise

Data Scales

Discrete

Nominal: categorial (names)

Ex. Favorite TV Show, color of car

Ordinal: nominal, plus can be ranked (order)

Ex. Movie ratings, letter grades

Continuous

Interval: ordinal, plus intervals are consistent

Ex. Degrees Fahrenheit/Celsius, Calendar Year

Ratio: interval, plus ratios are consistent, true zero

Ex. Degrees Kelvin, Height, Age

Standardized Deviation

z-score = (data value - mean)/standard deviation

1 standard deviation = 68%

2 standard deviations = 95%

3 standard deviations = 99.7%

Training/Testing Data

Training Data

data that has been collected that I use to build my model.

Testing Data

"incoming" data

For Practice -> Split data into training/testing

80/20, 66/33, 50/50

Training number >= testing number

Split is random

Main Focuses/Goals

Predictions

Simple linear regression

Classification

Clustering

AI

Dimensionality Reduction

Applications (AI)

Recommendations

Healthcare

Biostats

Supervised Learning

Input labeled training data and clearly defined what should be assessed

Ex. Binary Classification, Simple Linear Regression

Unsupervised Learning

Input unlabeled training data/want to group things together + identify patterns

Ex. Clustering, Apriori/Association Algorithms, Dimensionality Reduction

*Sample Size

If we have enough data to create the model, but still have a "small" data set we are prone to overfitting of the data

HISTORY OF MACHINE LEARNING

1943: Early Neural Network Modeling

Mathematical model to imitate human neural network

1950: The Turing Test (Alan Turing)

Set of computers/humans (CH)

Subjects (S)

Researchers (R)

S interacts with C/H

S tells researchers which are C/H

50/50

Above 50 "passes" Turing test

1952: Arthur Samuel's Checkers Program

Got better at checkers the more that it played

1956: Dartmouth Summer Research Project on AI

1957: Perception

Visual Input

1963: MENACE

Able to learn how to play a perfect game of tic-tac-toe

1967: Nearest Neighbor Problem

Algorithm was developed

1973: Cut of AI Funding

1979: Handwriting Recognition

"The Cart" robot was able to navigate obstacles

1981: Explanation Based Learning

Allowed a model to analyze data and remove unimportant information

1985: Net Talk

A program that learned how to pronounce words

1986: Parallel Distributed Processing

1995: Random Decision Forests

(IBM)

Support Vector Machines

(AT&T Labs)

2002: Torch

Open-source library for ML

2006: Netflix offered a prize for the best recommendation system

BUILDING A MODEL

Understand the problem and success criteria

Understand and identify data needs

Collect and prepare data

Train the model

Evaluate performance and set benchmarks

Deploy and monitor the model

Continuously refine and adjust

Using ML in a business

Cons | Pros |

Expensive

| Customer Retention |

Biases/Overfitting | Recommendation Systems |

| Planning + Forecasting |

| Detect Fraud |

| Boost Efficiency |

| Cut Costs |

Black-Box Techniques

When inputs and/or operations of a model are not visible to users

Users do not know how the algorithm works

No one knows exactly how the algorithm works

Not feasible for human comprehension

Explainability vs. Interpretability

Explainability (Accuracy)

Black-box

Complex neural networks

Interpretability

"White-box"

"Clear-box"

Regression

LINEAR REGRESSION

F-Distribution |

|

Correlation |

|

Regression |

|

Assumptions

Both variables should be continuous

Should be a linear relationship between two variables

Should be no significant outliers

Should avoid homoscedasticity (Do two separate regressions)

Multiple Linear Regression

Multiple independent variables in a single model

Represented in an XYZ plane (2 inputs, 1 output)

Assumptions

Each variable should be continuous

Should be a linear relationship between independent variable and dependant variable

No extreme outliers

No multicollinearity (Remove same variables, cut off point is .9)

Effect Size

Adjust R^2 is used to measure effect size of multiple regression

Always lower than R^2

Interpreting Results

Unstandardized Beta coefficients are used to determine slope of the predictor variables

Standardized Beta coefficients should be used to compare each variable

RIDGE, LASSO, AND ELASTIC-Net

Ridge Regression

Helps with overfitting of data

Keeps all the data

AKA L2 Regularization

Adds bias to increase error and variation for training data

Decreases error and variance in testing data

+ Landa slope^2

Alpha = 0

Lasso Regression

L1 Regulation

+ Landa |slope|

Can be used to "zero-out" variables

Removes some of the data

Alpha = 1

Elastic Net Regression

+ Landa slope^2, + Landa |slope|

Cross validation is used to find optimal L's

Best of both worlds

LOGISTIC REGRESSION

Background

y is discrete (Usually y is binary)

Predictions are made by using multiple independent variables to predict a single dichotomous dependant variable

Uses a S shaped curve called the logistic function

Values between 0 and 1

Effect Size

Pseudo R^2

Two main pseudo R^2 values are Cox & Snell and Nagelkerke

Interpreting Results

Which are useful Xns?

A classification of Observed vs Predicted should be created and results should be included

Aim to get over 50% accuracy

DECISION TREES AND RANDOM FORESTS

Decision Trees

Flowchart-like structure in which each node represents a "test"

Each branch represents the outcome of the test

Random Forests

Create bootstrap data set (Multiple decision trees)

More effective than decision trees

First training data set

Randomly select variables to use to create a decision tree

Repeat for more accuracy

Bootstrapping data and using the aggregate to make a decision is called bagging

PRINCIPAL COMPONENT ANALYSIS (PCA)

Dimensionality reduction

Large data set with many variables

Ex) 100 question survey