NotesSAA-C03

- The shared responsibility model

- Customer is responsible for security IN the loud

- AWS is responsible for security OF the cloud

- 6 Pillars of the Well-Architected Framework

- Operation Excellence

- Running and monitoring systems to deliver business value, and continually improving processes and procedures

- Performance Efficiency

- Using IT and computing resources efficiently

- Security

- Protecting info and systems

- Cost Optimization

- Avoiding unnecessary costs

- Reliability

- Ensuring a workload performs its intended function correctly and consistently

- Sustainability

- Minimizing the environmental impacts of running cloud workloads

- Operation Excellence

- IAM

- To secure the root account:

- Enable MFA on root acct

- Create an admin group for admins and assign appropriate privileges

- Create user accounts for admins - don't share

- Add appropriate users to admin groups

- IAM Policy documents

- JSON - key pairs

- IAM does not work at regional level, it works at global level

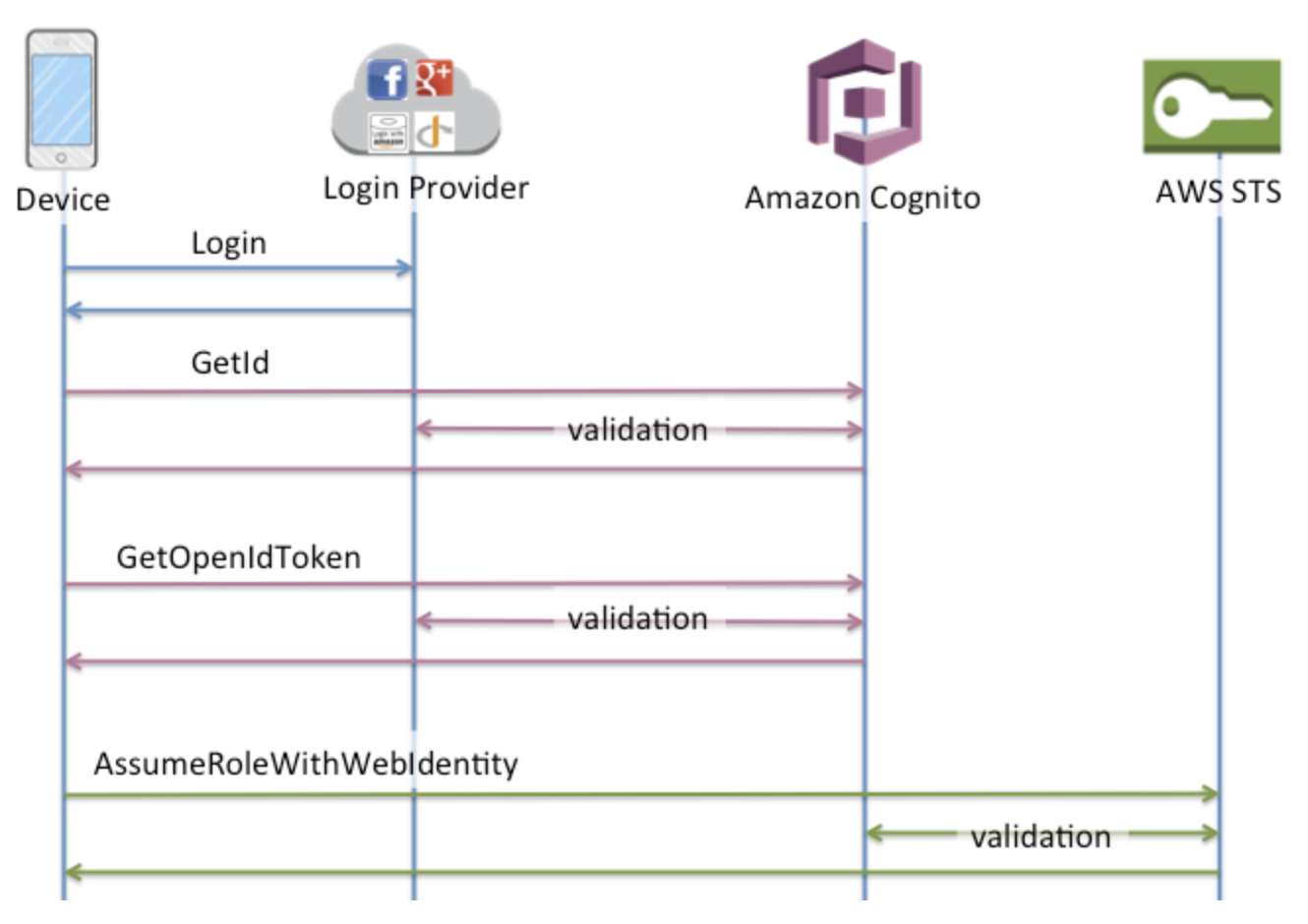

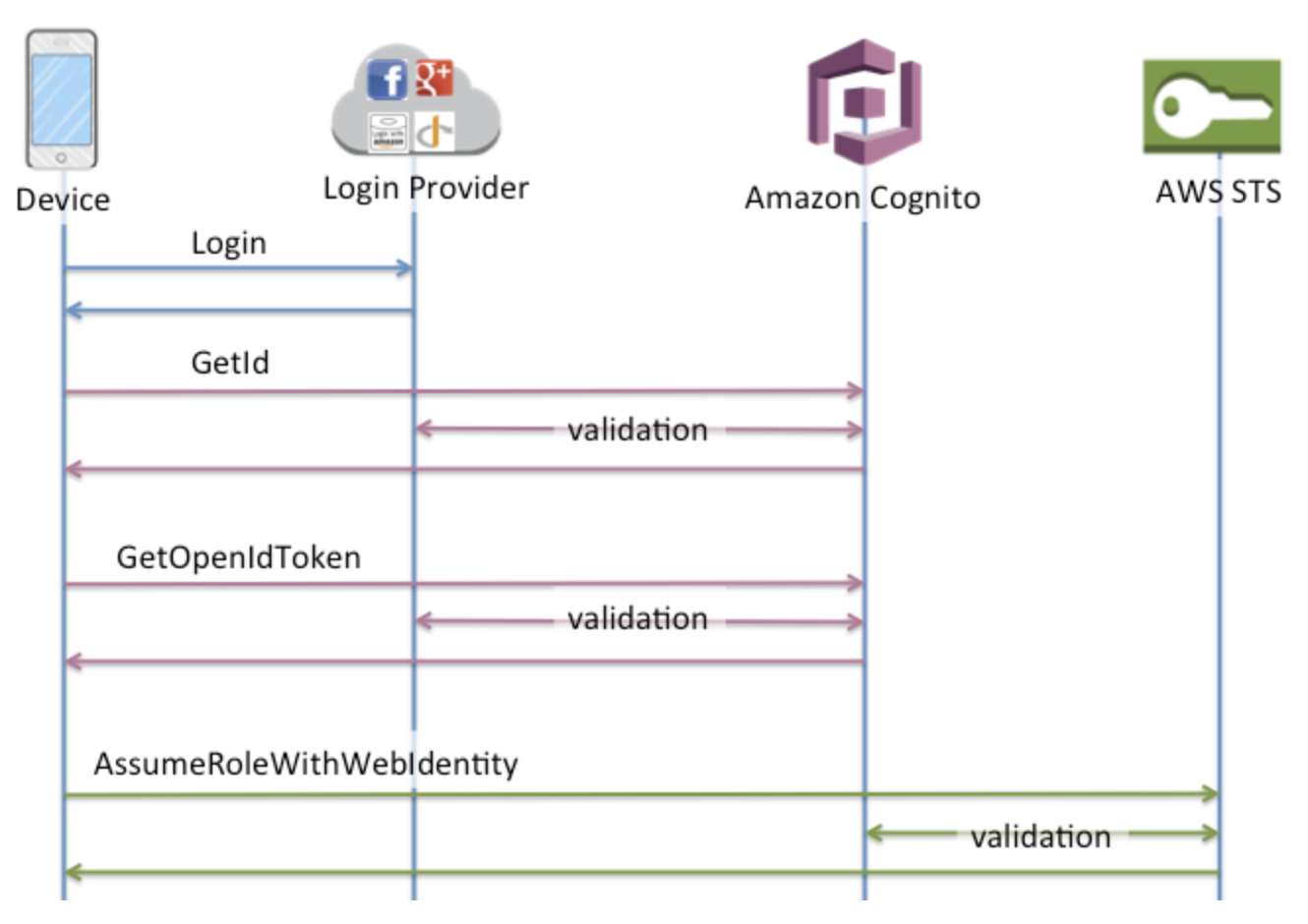

- Identity Providers > Federation Services

- AWS SSO

- Can add a provider/configure a provider

- Most common provider type is SAML

- Uses: AD Federation Services

- SAML provider establishes a trust between AWS and AD Federation Services

- Most common provider type is SAML

- IAM Federation

- Uses the SAML standard, which is Active Directory

- How to apply policies:

- EAR: Effect, Action, Resource

- Why are IAM users considered “permanent”?

- Because once their password, access key, or secret key is set, these credentials do not update or change without human interaction

- IAM Roles

- = an identity in IAM with specific permissions

- Temporary

- When you assume a role, it provides you with temporary security credential for your role session

- Assign policy to role

- More secure to use roles instead of credentials - don't have to hardcode credentials

- Preferred option from security perspective

- Can attach/detach roles to running EC2 instances without having to stop or terminate those instances

- To secure the root account:

- Simple Storage Solution

- S3 is object storage

- Manages data as objects rather than in file systems or data blocks

- Any type of file

- Cannot be used to run OS or DB, only static files

- Unlimited S3 storage

- Individual objects can be up to 5 TBs in size

- Universal Namespace

- All AWS accounts share S3 namespace so buckets must be globally unique

- S3 URLS:

- https://bucket-name.s3.Region.amazonaws.com/key-name

- When you successfully upload a file to an S3 bucket, you receive a HTTP 200 code

- S3 works off of a key-Value store

- Key = name of object

- Value = data itself

- Version id

- Metadata

- Lifecycle management

- Versioning

- All versions of an object are stored and can be retrieved, including deleted ones

- Once versioning is enabled, you cannot disable it, only suspend it

- Way to protect your objects from being accidentally deleted

- Turn versioning on

- Enable MFA

- Securing you S3 data

- Server-Side Encryption

- Can set default encryption on a bucket to encrypt all new objects when they are stored in that bucket

- Access Control Lists (ACLs)

- Define which AWS accounts or groups are granted access and type of access

- Way to get fine-grained access control - can assign ACLS to individual objects within a bucket

- Bucket Policies

- Bucket-wide policies that define what actions are allowed or denied on buckets

- In JSON format

- Server-Side Encryption

- Data Consistency Model with S3

- Strong Read-After-Write Consistency

- After a successful write of a new object (PUT) or an overwrite of an existing object, any subsequent read request immediately receives the latest version of the object

- Strong consistency for list operations, so after a write, you can immediately perform a listing of the objects in a bucket with all changes reflected

- Strong Read-After-Write Consistency

- ACLs vs Bucket Policies

- ACLs

- Work at an individual object level

- Ie: public or private object

- Bucket Policies

- Apply bucket-wide

- ACLs

- Storage Classes in S3

- S3 standard

- Default

- Redundantly across greater than or equal to 3 AZs

- Frequent access

- S3 Standard - Infrequently Accessed (IA)

- Infrequently accessed data, but data must be rapidly accessed when needed

- Pay to access data - per GB storage price and per-GB retrieval fee

- S3 One Zone - IA

- Like S3 standard IA but data is stored redundantly within single AZ

- Great for long-lived, IA NON critical data

- S3 Intelligent Tiering

- 2 Tiers:

- Frequent Access

- Infrequent Access

- Optimizes Costs - automatically moves data to most cost-effective tier

- 2 Tiers:

- Glacier

- Way of archiving your data long-term

- Pay for each time you access your data

- Cheap storage

- 3 Glacier options:

- Glacier Instant Retrieval

- S3 standard

- S3 is object storage

Long-term data archiving with instant retrieval

- Glacier Flexible Retrieval

Ideal storage class for archive data that does not require immediate access but needs the flexibility to retrieve large data sets at no cost,m such as backup or DR

Retrieval -minutes to 12 hours

- Glacier Deep Archive

Cheapest

Retain data sets for 7-10 years or longer to meet customer needs and regulatory requirements

Retrieval is 12 hours for standard and 48 hours for bulk

- Lifecycle mgmt in S3

- Automates moving objects between different storage tiers to max cost-effectiveness

- Can be used with versioning

- S3 Object Lock

- Can use object lock to store objects using a Write Once Read Many (WORM) model

- Can help prevent objects from being deleted or modified for a fixed amount of time OR indefinitely

- 2 modes of S3 Object Lock:

- Governance Mode

- Users cannot overwrite or delete an object version or alter its lock settings unless they have special permissions

- Compliance Mode

- A protected object version cannot be overwritten or deleted by any user

- When object is locked in compliance mode, its retention cannot be changed/object cannot be overwritten/deleted for duration of period

- Retention Period:

- Governance Mode

- Can use object lock to store objects using a Write Once Read Many (WORM) model

- Lifecycle mgmt in S3

Protect an object version for a fixed period of time

- Legal Holds:

Enables you to place a lock/hold on an object without an expiration period- remains in effect until removed

- Glacier Vault Locks

- Easily deploy and enforce compliance controls for individual Glacier vaults with a vault lock policy

- Can specify controls, such as WORM, in a vault lock policy and lock the policy from future edits

- Once locked, the policy can no longer be changed

- S3 Encryption

- TYpes of encryption available:

- Encryption in transit

- HTTPS-SSL/TLS

- Encryption at rest: Server Side Encryption

- Enabled by default with SSE-S3

- Encryption in transit

- TYpes of encryption available:

- Glacier Vault Locks

This setting applies to all objects within S3 buckets

If the file is to be encrypted at upload time, the x-amz-server-side-encryption parameter will be included in the request header

You can create a bucket policy that denies any s3 PUT (upload) that does not include this parameter in the request header

- 3 Types:

SSE-S3

S3-managed keys, using AES 256-bit encryption

Most common

SSE-KMS

AWS key mgmt service-managed keys

If you use SSE-KMS to encrypt you objects in S3, you must keep in mind the KMS region limits

Uploading AND downloading will count towards the limit

SSE-C

Customer-provided keys

- Encryption at rest: Client-Side Encryption

- You encrypt the files yourself before you upload them to S3

- Encryption at rest: Client-Side Encryption

- More folders/subfolder you have in S3, the better the performance

- S3 Performance:

- Uploads

- Multipart Uploads

- Recommended for files over 100 MB

- Required for files over 5GB

- = Parallelize uploads to increase efficiency

- Multipart Uploads

- Downloads

- S3 Byte-Range Fetches

- Parallelize downloads by specifying byte ranges

- If there is a failure in the download, it is only for that specific byte rance

- Used to speed up downloads

- Can be used to download partial amounts of a file - for ex: header info

- S3 Byte-Range Fetches

- Uploads

- S3 Replication

- Can replicate objects from one bucket to another

- Versioning MUST be enabled on both buckets (source and destination buckets)

- Turn on replication, then replication is automatic afterwards

- S3 Bach Replication

- Allows replication of existing objects to different buckets on demand

- Delete markers are NOT replicated by default

- Can enable it when creating the replication rule

- EC2: Elastic Cloud Compute

- Pricing Options

- On-Demand

- Pay by hour or second, depending on instance

- Flexible - low cost without upfront cost or commitment

- Use Cases:

- Apps with short-term, spikey, or unpredictable workloads that cannot be interrupted

- Compliance

- Licencing

- Reserved

- For 1-3 years

- Up to 72% discount compared to on-demand

- Use Cases:

- Predictable usage

- Specific capacity requirements

- Types of RIs:

- Standard RIs

- Convertible RIs

- On-Demand

- Pricing Options

Up to 54% off on-demand

You have the option to change to a different class of RI type of equal or greater value

- Scheduled RIs

Launch within timeframe you define

Match your capacity reservation to a predictable recurring schedule that only requires a fraction of day/wk/mo

- Reserved Instances operate at a REGIONAL level

- Spot

- Purchase unused capacity at a discount of up to 90%

- Prices fluctuate with supply and demand

- Say which price you want the capacity at and when it hits that price, you have your instance, when it moves away from that price, you lose it

- Use Cases:

- Flexible start and end times

- Cost sensitive

- Urgent need for large amounts of additional capacity

- Spot Fleet

- A collection of spot instances and (optionally) on-demand instances

- Attempts to launch that number of spot instances and on-demand instances to meet the target capacity you specified

It is fulfilled if there is available capacity and the max price you specified in the request exceeds the spot price

Launch pools - different details on when to launch

- 4 strategies with spot fleets available:

Capacity optimized

Spot instances come from the pool with optimal capacity for the number of instances launched

Diversified

Spot instances are distributed across all pools

Lowest price

Spot instances come from the pool with the lowest price

This is the default strategy

InstancePoolsToUseCount

Distributed across the number of spot instance pools you specify

This parameter is only valid when used in combo with lowestPrice

- Dedicated

- Physical EC2 server dedicated for your use

- Most expensive

- Dedicated

- Pricing Calculator

- Can use to estimate what your infrastructure will cost in AWS

- Bootstrap Scripts

- Script that runs when the instance first runs, has root privileges

- Starts with a shebang : #!/bin/bash

- EC2 Metadata

- Can use curl command in bootstrap to save metadata into a text file, for example

- Networking with EC2

- 3 different types of networking cards

- Elastic Network Interface (ENI)

- For basic, day-to-day networking

- Use cases:

- Elastic Network Interface (ENI)

- 3 different types of networking cards

Create a management network

Use network and security appliances in VPC

Create dual-homed instances with workloads and roles on distinct subnets

Create a low budget, HA solution

- EC2s by default will have ENI attached to it

- Enhanced Networking (EN)

- For single root I/O virtualization (SR-10V) to provide high performance

- For high performance networking between 10 Gbps-100 Gbps

- Types of EN

Elastic Network Adapter (ENA)

Supports network speeds of up to 100 Gbps for supported instance types

Intel 82599 Virtual Function (VF) Interfaces

Used in Older instances

Always choose ENA over VF

- Elastic Fabric Adapter (EFA)

- Accelerates High performance Computing (HPC) and ML apps

- Can also use OS-Bypass

- Elastic Fabric Adapter (EFA)

Enables HPC and ML apps to bypass the OS Kernal and communicate directly with the EFA device - only linux

- Optimizing EC2 with Placement Groups

- 3 types of placement groups

- Cluster Placement Groups

- Grouping of instances within a single AZ

- Recommended for apps that need low network latency, high network throughput or both

- Only certain instance types can be launched into a cluster PG

- Cannot span multiple AZs

- AWS recommends homogenous instances within cluster

- Spread Placement Group

- Each placed on distinct underlying hardware

- Recommended for apps that have small number of critical instances that should be kept separate

- Used for individual instances

- Can span multiple AZs

- Partition Placement Group

- Each partition PG has its own set of racks, each rack has its own network and power source

- No two partitions within PG share the same racks, allowing you to isolate impact of HW failure

- EC2 divides each group into logical segments called partitions (basically = a rack)

- Can span multiple AZs

- Cluster Placement Groups

- You can't merge PGs

- You can move an existing instance into a PG

- Must be in stopped state

- Has to be done via CLI or SDK

- 3 types of placement groups

- EC2 Hibernation

- When you hibernate an EC2 instance, the OS is told to perform suspend-to-disk

- Saves the contents from the instance memory (RAM)to your EBS root volume

- We persist the instance EBS root volume and any attached EBS data volumes

- Instance RAM must be less than 150GB

- Instance families include - C, M, and R instance families

- Available for Windows, Linux 2 AMI, Ubuntu

- Instances cannot be hibernated for more than 60 days

- Available for on-demand and reserved instances

- When you hibernate an EC2 instance, the OS is told to perform suspend-to-disk

- Deploying vCenter in AWS with VMWare Cloud on AWS

- Used by orgs for private cloud deployment

- Use Cases - why VMWare on AWS

- Hybrid Cloud

- Cloud Migration

- Disaster Recovery

- Leverage AWS Services

- AWS Outposts

- Brings the AWS data center directly to you, on-prem

- Allows you to have AWS services in your data center

- Benefits:

- Allows for hybrid cloud

- Fully managed by AWS

- Consistency

- Outposts Family members

- Outposts Racks - large

- Outposts Servers - smaller

- Optimizing EC2 with Placement Groups

- Elastic Block Storage

- Elastic Block Storage

- Virtual disk, storage volume you can attach to EC2 instances

- Can install all sorts, use like any system disk, including apps, OS’s, run DBs, store data, create file systems

- Designed for mission critical workloads - HA and auto replicated within single AZ

- Different EBS Volume Types

- General Purpose SSD

- gp2/3

- Balance of prices and performance

- Good for boot volumes and general apps

- Provisioned IOPS SSD (PIOPS)

- io1/2

- Super fast, high performance, most expensive

- IO intensive apps, high durability

- Throughput Optimized HDD (ST1)

- Low-cost HDD volume

- Frequently accessed, throughput-intensive workloads

- Throughput = used more for big data, data warehouses, ETL, and log processing

- Cost effective way to store mountains of data

- CANNOT be a boot volume

- Cold HDD (SC1)

- Lowest cost option

- Good choice for colder data requirement fewer scans/day

- Good for apps that need lowest cost and performance is not a factor

- CANNOT be a boot volume

- Only static images, file system

- General Purpose SSD

- IOPS vs Throughput

- IOPS

- Measures the number of read and write Operations/second

- Important for quick transactions, low-latency apps, transactional workloads

- Choose provisioned IOPS SSD (io1/2)

- Throughput

- Measures the number of bits read or written per sec (MB/s)

- Important metrics for large datasets, large IO sizes, complex queries

- Ability to deal with large datasets

- Choose throughput optimized HDD (ST1)

- IOPS

- Volumes vs Snapshots

- Volumes exist on EBS

- Must have a minimum of 1 volume per EC2 instance - called root device volume

- Snapshots exist on S3

- Point in time copy of a volume

- Are incremental

- For consistent snapshots: stop instance

- Can only share snapshots within region they were created, if want to share outside, have to copy to destination region first

- Volumes exist on EBS

- Things to know about EBS’s:

- Can resize on the fly, just resize the filesystem

- Can change volume types on the fly

- EBS will always be in the same AZ as EC2

- If we stop an instance, data is kept on EBS disk

- EBS volumes are NOT encrypted by default

- EBS Encryption

- Uses KMS customer master keys (CMK) when creating encrypted volumes and snapshots

- Data at rest is encrypted in volume

- Data inflight between instance and volume is encrypted

- All volumes created from the snapshot are encrypted

- End-to-end encryption

- Important to remember: copying an unencrypted snapshot allows encryption

- 4 steps to encrypt an unencrypted volume:

- Create a snapshot of the unencrypted volume

- Create a copy of the snapshot and select the encrypt option

- Create an AMI from the encrypted snapshot

- Use that AMI to launch new encrypted instances

- 4 steps to encrypt an unencrypted volume:

- Elastic Block Storage

- Elastic File System (EFS)

- Managed NFS (Network File System) that can be mounted on many EC2 instances

- Shared storage

- EFS are NAS files for EC2 instances based on Network File System (NFSv4)

- EC2 has a mount target that connects to the EFS

- Use Cases:

- Web server farms, content mgmt systems, shared db access

- Uses NFSv4 protocol

- Linux-Based AMIs only (not windows)

- Encryption at rest with KMS

- Performance

- Amazing performance capabilities

- 1000s concurrent connections

- 10 Gbps throughput

- Scales to petabytes

- Set the performance characteristics:

- General Purpose - web servers, content management

- Max I/O - big data, media processing

- Storage Tiers for EFS

- Standard - frequently accessed files

- Infrequently Accessed

- FSx For Windows

- Provides a fully managed native Microsoft Windows file system so you can easily move your windows-based apps that require file storage to AWS

- Built on Windows servers

- If see anything regarding:

- sharepoint service

- shared storage for windows

- Active directory migration

- Managed Windows Server that runs windows server message block (SMB) - based file services

- Supports AD users, ACLs, groups, and security policies, along with Distributed File System (DFS) namespaces and replication

- FSx for Lustre:

- Managed file system that is optimized for compute-intensive workloads

- Use Cases:

- High performance computing, AI, ML, Media Data processing workflows, electronic design automation

- With a Lustre, you can launch and run a Lustre file system that can process massive datasets at up to 100s of Gbps of throughput, millions of IOPS, and sub-millisec latencies

- When To pick EFS vs FSx for Windows vs FSx for Lustre

- EFS

- Need distributed, highly resilient storage for Linux

- FSx for Windows

- Central storage for windows (IIS server, AD, SQL Server, Sharepoint)

- FSx for Lustre

- High speed, high-capacity, AI, ML

- IMPORTANT: Can store data directly on S3

- EFS

- Amazon Machine Images: EBS vs Instance Store

- An AMI provides the info required to launch an instance

- *AMIs are region-specific

- 5 things you can base your AMIs on:

- Region

- OS

- Architecture (32 vs 64-bit)

- Launch permissions

- Storage for the root device (root volume)

- All AMIs are categorized as either backed by one of these:

- EBS

- The root device for an instance launched from the AMI is an EBS volume created from EBS snapshot

- CAN be stopped

- Will not lose data if instance is stopped

- By default, root volume will be deleted on termination, but you can tell AWS to keep the deleted root volume with EBS volume

- PERMANENT storage

- Instance Store

- Root device for an instance launched from the AMI is an instance store volume created from a template stored in S3

- Are ephemeral storage

- Meaning they cannot be stopped

- If underlying host fails, you will lose your data

- EBS

CAN reboot your data without losing your data

- If you delete your instance, you will lose the instance store volume

- AWS Backup

- Allows you to consolidate your backups across multiple AWS Services such as EC2, EBS, EFS, FSx for Lustre, FSx for Windows file server and AWS Storage Gateway

- Backups can be used with AWS Organizations to backup multiple AWS accounts in your org

- Gives you centralized control across all services, in multiple AWS accounts across the entire AWS org

- Benefits

- Central management

- Automation

- Improved Compliance

- Policies can be enforced, and encryption

- Auditing is easy

- Relational Database Service

- 6 different RDS engines

- SQL Server

- Oracle

- MySQL

- PostgreSQL

- MariaDB

- Aurora

- When to use RDS’s:

- Generally used for Online Transaction Processing (OLTP) workloads

- OLTP: transaction

- Large numbers of small transactions in real-time

- Different than OLAP (Online Analytical Processing)

- OLAP:

- OLTP: transaction

- Generally used for Online Transaction Processing (OLTP) workloads

- 6 different RDS engines

Processes complex queries to analyze historical data

All about data analysis using large amounts of data as well as complex queries that take a long time

RDS’s are NOT suitable for this purpose → data warehouse option like Redshift which is optimized for OLAP

- Multi-AZ RDSs

- Aurora cannot be single AZ

- All others can be configured to be multi-AZ

- Creates an exact copy of your prod db in another AZ, automatically

- When you write to your prod db, this write will automatically synchronize to the standard db

- Unplanned Failure or Maintenance:

- Amazon auto detects any issues and will auto failover to the standby db via updating DNS

- Multi-AZ is for DISASTER RECOVERY, not for performance

- CANNOT connect to standby db when primary db is active

- Aurora cannot be single AZ

- Increase read performance with read replicas

- Read replica is a read-only copy of the primary db

- You run queries against the read-only copy and not the primary db

- Read replicas are for PERFORMANCE boosting

- Each read replica has its own unique DNS endpoint

- Read replicas can be promoted as their own dbs, but it breaks the replication

- For analytics for example

- Multiple read replicas are supported = up to 5 to each db instance

- Read replicas require auto backups to be turned on

- Aurora

- MySQL and Postgre-compatible RD engine that combines speed and availability of high-end commercial dbs with the simplicity and cost-effectiveness of open-source db

- 2 copies of data in each AZ with minimum of 3 AZs → 6 copies of data

- Aurora storage is self-healing

- Data blocks and disks are continuously scanned for errors and repaired automatically

- 3 types of Aurora Replicas Available:

- Aurora Replicas = 15 read replicas

- MySQL Replicas = 5 read replicas with Aurora MySQL

- PostgreSQL = 5 read replicas with Aurora PostgreSQL

- Aurora Serverless

- An on-demand, auto-scaling configuration for the MySQL-compatible and PostgreSQL-compatible editions of Aurora

- An Aurora serverless db cluster automatically starts up, shuts down, and scales capacity up or down based on your app’s needs

- Use Cases:

- For spikey workloads

- Relatively simple, cost-effective option for infrequent, intermittent, or unpredictable workloads

- Multi-AZ RDSs

- DynamoDB

- Proprietary NON-relational DB

- Fast and flexible NoSQL db service for all applications that need constant, single-digit millisecond latency at any scale

- Fully managed db and supports both document and key-value data modules

- Use Cases:

- Flexible data model and reliable performance make it great fit for mobile, web, gaming, ad-tech, IoT, etc

- 4 facts on DynamoDB:

- All stored on SSD Storage

- Spread across 3 geographically distinct data center

- Eventually consistent reads by default

- This means that all copies of data is usually reached within a second. Repeating a read after a short time should return the updated data. Best read performance

- Can opt for strongly consistent reads

- This means that all copies return a result that reflects all writes that received a successful response prior to that read

- DynamoDB Accelerator (DAX)

- Fully managed, HA, in-memory cache

- 10x performance improvement

- Reduces request time from milliseconds to microseconds

- Compatible with DynamoDB API calls

- Sits in front of DynamoDB

- DynamoDB Security

- Encryption at rest with KMS

- Can connect with site-to-site VPN

- Can connect with Direct Connect (DX)

- Works with IAM policies and roles

- Fine-grained access

- Integrates with CloudWatch and CloudTrail

- VPC endpoints-compatible

- DynamoDB Transactions

- ACID Diagram/Methodology

- Atomic

- All changes to the data must be performed successfully or not at al

- Consistent

- Data must be in a constant state before and after the transaction

- Isolated

- No other process can change the data while the transaction is running

- Durable

- The changes made by a transaction must persist

- Atomic

- ACID basically means if anything fails, it all rolls back

- DynamoDB transactions provide developers ACID across 1 or more tables within a single aws acct and region

- You can use transactions when building apps that require coordinated inserts, deletes, or updates to multiple items as part of a single logical business operation

- DynamoDB transactions have to be enabled in DynamoDB to use ACID

- Use Cases:

- Financial Transactions, fulfilling orders

- 3 options for reads

- Eventual consistency

- Strong consistency

- Transactional

- 2 options for writes:

- Standard

- Transactional

- ACID Diagram/Methodology

- DynamoDB Backups

- On-Demand Backup and Restore

- Point-In-Time Recovery (PITR)

- Protects against accidental writes or deletes

- Restore to any point in the last 35 days

- Incremental backups

- NOT enabled by default

- Latest restorable: 5 minutes in the past

- DynamoDB Streams

- Are time-ordered sequence of item-level changes in a table (FIFO)

- Data is completely sequenced

- These sequences are stored in DynamoDB Streams

- Stored for 24 hours

- Sequences are broken up into shards

- A shard is a bunch of data that has sequential sequence numbers

- Everytime you make a change to DynamoDB table, that change is going to be stored sequentially in a stream record, which is broken up into shards

- Can combine streams with Lambda functions for functionality like stored procedures

- DynamoDB Global Tables

- Managed multi-master, multi-region replication

- Way of replicating your DynamoDB tables from one region to another

- Great for globally distributed apps

- This is based on DynamoDB streams

- Streams must be turned on to enable Global Tables

- Multi-region redundancy for disaster recovery or HA

- Natively built into DynamoDB

- Mongo-DB-compatible DBs in Amazon DocumentDB

- DocumentDB

- Allows you to run MongoDB in the AWS cloud

- A managed db service that scales with your workloads and safely and durably stores your db info

- NoSQL

- Direct move for MongoDB

- Cannot run Mongo workloads on DynamoDB so MUST use DocumentDB

- DocumentDB

- Amazon Keyspaces

- Run Apache Cassandra Workloads with Keyspaces

- Cassandra is a distributed (runs on many machines) database that uses NoSQL, primarily for big data solutions

- Keyspaces allows you to run cassandra workloads on AWS and is fully managed and serverless, auto-scaled

- Amazon Neptune

- Implement GraphDBs - stores nodes and relationships instead of tables or documents

- Amazon Quantum Ledger DB (QLDB)

- For Ledger DB

- Are NoSQL dbs that are immutable, transparent, and have a cryptographically verifiable transaction log that is owned by one authority

- QLDB is fully managed ledger db

- For Ledger DB

- Amazon Timestream

- Time-series data are data points that are logged over a series of time, allowing you to track your data

- A serverless, fully managed db service for time-series data

- Can analyze trillions of events/day up to 1000x faster and at 1/10th the cost of traditional RDSs

- Virtual Private Cloud (VPC) Networking

- VPC Overview

- Virtual data center in the cloud

- Logically isolated part of AWS cloud where you can define your own network with complete control of your virtual network

- Can additionally create a hardware VPN connection between your corporate data center and your VPC and leverage the AWS cloud as an extension of your corporate data center

- Attach a Virtual Private Gateway to our VPC to establish a VPN and connect to our instances over private corporate data center

- By default we have 1 VPC in each region

- What can we do with a VPC

- Use route table to configure between subnets

- Use Internet Gateway to create secure access to internet

- Use Network Access Control Lists (NACLs) to block specific IP addresses

- Default VPC

- User friendly

- All subnets in default VPC have a route out to the internet

- Each EC2 instance has a public and private IP address

- Has route table and NACL associated with it

- Custom VPC

- Steps to set up a VPC Connection:

- Choose IPv4 CIDER

- Note: first 4 IP addresses and last IP address in CIDR block are reserved by Amazon

- Choose Tenancy

- By default, creates:

- Security Group

- Route Table

- NACL

- Create subnet associations

- Create internet gateway and attach to VPC

- Set up route table with route out to internet

- Associate subnet with VPC

- Create Security group

- With inbound, outbound rules

- Associate EC2 instance with Security Group

- Choose IPv4 CIDER

- VPC Overview

- Using NAT Gateways for internet access within private subnet

- For example, we need to patch db server

- NAT Gateway:

- You can use Network Access Translation (NAT) gateway to enable instances in a private subnet to connect to the internet or other AWS services while preventing the internet from initiating a connection with those instances

- How to do this:

- Create a NAT Gateway in our public subnet

- Allow our EC2 instance (in private subnet) to connect to the NAT Gateway

- 5 facts to remember:

- Redundant inside the AZ

- Starts at 5 Gbps and scales to 45 Gbps

- No need to patch

- Not associated with any security groups

- Automatically assigned a public IP address

- Security Groups

- Are virtual firewalls for EC2 instances

- By default, everything is blocked

- Are stateful - this means that if you send a request from your instance, the response traffic for that request is allowed to flow in, regardless of inbound security group rules

- Ie: responses to allowed inbound traffic are allowed to flow out regardless of outbound rules

- Are virtual firewalls for EC2 instances

- Network ACLs

- Are frontline of defense, optional layer of security for your VPC that acts as a firewall for controlling traffic in and out of one or more subnets

- You may match NACL rules similar to Security Groups as an added layer of security

- Overview:

- Default NACLs

- VPC automatically comes with default NACL and by default it allows all inbound and outbound traffic

- Custom NACLs

- By default block all inbound and outbound traffic until you add rules

- Each subnet in your VPC must be associated with a NACL

- If you don't explicitly associate a subnet with a NACL, the subnet is auto associated with the default NACL

- Can associate a NACL with multiple subnets, but each subnet can only have a single NACL associated with it at a time

- Have separate inbound and outbound NACLs

- Default NACLs

- Block IP addresses with NACLs NOT with Security Groups

- NACLs contain a numbered list of rules that are evaluated in order, starting with lowest numbered rule

- Once a match is found, stop going through list

- If you want to deny a single IP address, you have to deny FIRST before you allow all

- NACLs are stateless

- This means that responses to allowed inbound traffic are subject to the rules for outbound traffic and visa versa

- NACLs contain a numbered list of rules that are evaluated in order, starting with lowest numbered rule

- VPC Endpoints

- Enables you to privately connect your VPC to supported AWS services and VPC endpoint services powered by PrivateLink without requiring an internet gateway, NAT device, VPN connection, or AWS Direct Connect connection

- Like a NAT gateway but it doesnt use the public internet, it uses Amazon’s backbone network - stays within AWS environment

- Endpoints are virtual devices

- Horizontally scaled, redundant, and HA VPC components that allow communication between instances on your VPC and services without imposing availability risks or bandwidth constraints on your network traffic

- Remember that NAT gateways have 5-45 Gbps restriction - you dont want that restriction if you have an EC2 instance writing to S3, so you may have it go through the virtual endpoint (VPC endpoint)

- 2 Types of endpoints:

- Interface Endpoint

- An ENI with a private IP address that serves as an entry point for traffic headed to a supported service

- Gateway Endpoint

- Similar to NAT gateway

- Virtual device that supports connection to S3 and DynamoDB

- Interface Endpoint

- Use Case: you want to connect to AWS services without leaving the Amazon internal network = VPC endpoint

- VPC Peering

- Allows you to connect 1 VPC with another via a direct network route using private IP addresses

- Instances behave as if they were on the same private network

- Can peer VPCs with other AWS accounts as well as other VPCs in same account

- Can peer between regions

- Is in a star configuration with one central VPC

- No transitive peering

- Cannot have overlapping CIDR address ranges between peered VPCs

- Allows you to connect 1 VPC with another via a direct network route using private IP addresses

- PrivateLink

- Opening up your services in a VPC to another VPC can be done in two ways:

- Open VPC up to internet

- Use VPC Peering - whole network is accessible to peer

- Best way to expose a service VPC to tens, hundreds, thousands of customer VPC is through PrivateLink

- Does Not require peering, no route tables, no NAT gateways, no internet gateways, etc

- DOES require a Network Load Balancer on the service VPC and and ENI on the customer VPC

- Opening up your services in a VPC to another VPC can be done in two ways:

- CloudHub

- Useful if you have multiple sites, each with its own VPN connection, use CH to connect those sites together

- Overview:

- Hub and spoke model

- Low cost and easy to manage

- Operates over public internet, but all traffic between customer gateway and CloudHub is encrypted

- Essentially aggregating VPN connections to single entry point

- Direct Connect (DX)

- A cloud service solution that makes it easy to establish a dedicated network connection from your premises to AWS

- Private connectivity

- Can reduce network costs, increase bandwidth throughput, and provide a more consistent network experience than internet-based connections

- Instead of VPN

- Two types of Direct Connect Connections:

- Dedicated Connection

- Physical ethernet connection associated with a single customer

- Hosted Connection

- Physical ethernet connection that an AWS Direct Connect Partner (verizon, etc) provisions on behalf of a customer

- Dedicated Connection

- Transit Gateway

- Connects VPCs and on-prem networks through a central hub

- Simplifies network by ending complex peering relationships

- Acts as a cloud router - each connection is only made once

- Connect VPCs to Transit Gateway

- Everything connected to TG will be able to talk directly

- Facts

- Allows you to have transitive peering between thousands of VPCs and on-prem datacenters

- Works on regional basis, but can have it across multiple regions

- Can use it across multiple AWS account using RAM (Resource Access Manager)

- Use route table to limit how VPCs talk to one another

- Supports IP Multicast which is not supported by any other AWS Service

- Wavelength

- Embeds AWS compute and storage service within 5G networks, providing mobile edge computing infrastructure for developing, deploying, and scaling ultra-low-latency applications

- Route53

- Overview

- Domain Registrars: are authorities that can assign domain names directly under one or more top-level Domain

- Common DNS Record Types

- SOA: Start Of Authority Record

- Stores info about:

- Name of server that supplied the data for the zone

- Administrator of the zone

- Current version of the data file

- The default number of seconds for the TTL file on resource records

- How it works:

- Starts with NS (Name Server) records

- Stores info about:

- SOA: Start Of Authority Record

- Overview

NS records are used by top-level domain servers to direct traffic to the content DNS server that contains the authoritative DNS records

- So browser goes to top level domain first (.com) and will look up ‘ACG’,

- TLD will give the browser an NS record where the SOA will be stored

- Browser will browse over to the NS records and get SOA

- Start of Authority contains all of our DNS records

- A Record (or address record)

- The fundamental type of DNS record

- Used by a computer to translate the name of the domain to an IP address

- Most common type of DNS record

- TTL = time to live

- Length that a DNS record is cached on either the resolving server or the user’s own local PC

- The lower the TTL, the faster changes to DNS records take to propagate through the internet

- CNAME

- Canonical name can be used to resolve one domain name to another

- Ex m.acg.com and mobile.acg.com resolve to same

- Canonical name can be used to resolve one domain name to another

- Alias Records

- Used to map resource sets in your hosted zone to load balancers, CloudFront distros, or S3 buckets that are configured as websites

- Works like a CNAME record in that it can map one dns name to another, but

- CNAMES cannot be used for naked domain names/zone apex record

- Alias Records CAN be used to map naked domain names/zone apex record

- Route53 Overview

- Amazon’s DNS service, that allows you to register domain names, create hosted zones, and manage and create DNS records

- 7 Routing Policies available with Route53:

- Simple Routing Service

- Can only have one record with multiple IP addresses

- If you specify multiple values in a record, route53 returns all values to the user in a random order

- Weighted Routing Policy

- Allows you to split your traffic based on assigned weights

- Health Checks

- Simple Routing Service

Can set health checks on individual record sets/servers

So if a record set/server fails a health check, it will be removed from route53 until it passes the check

While it is down, no traffic will be routed to it, but will resume when it passes

- Create a health check for each weighted route that we are going to create to monitor the endpoint, monitor by IP address

- Failover Routing Policy

- When you want to create an active/passive setup

- Route53 will monitor the health of your primary site using health checks and auto-route traffic if primary site fails the check

- Geolocation Routing

- Lets you choose where your traffic will be sent based on the geographical location of your users

- Based on the location from which DNS queries originate; the end location of your user

- Geoproximity Routing Policy

- Can route traffic flow to build a routing system that uses a combo of:

Geographic location

Latency

Availability to route traffic from your users to your close or on-prem endpoints

- Can build from scratch or use templates and customize

- Latency Routing Policy

- Allows you to route your traffic based on the lowest network latency for your end user

- Create a latency resource record set for the EC2 (or ELB) resource in each region that hosts your website

When route53 receives a query for your site, it selects the latency resource record set for the region that gives you the lowest latency

- Multivalue Answer Routing Policy

- Lets you configure route53 to return multiple values, such as IP addresses for your web server, in response to DNS queries

- Basically similar to simple routing, however, it allows you to put health checks on each record set

- Multivalue Answer Routing Policy

- Elastic Load Balancers (ELBs)

- Auto distributes incoming traffic across multiple targets

- Can also be done across AZs

- 3 types of ELBs

- Application Load Balancer

- Best suited for balancing of HTTP and HTTPs Traffic

- Operates at layer 7

- Application-aware load balancer

- Intelligent load balancer

- Network Load Balancer

- Operates at the connection level (Level 4)

- Capable of handling millions of requests/sec, low latencies

- A performance load balancer

- Classic Load Balancer

- Legacy load balancer

- Can load balance HTTP/HTTPs applications and use Layer-7 specific features such as X-forwarded and sticky sessions

- For Test/Dev

- Application Load Balancer

- ELBs can be configured with Health Checks

- They periodically send requests to the load balancer’s registered instances to test their states [InService vs OutOfService returns]

- Application Load Balancers

- Layer 7, App-Aware Load Balancing

- After the load balancer receives a request, it evaluates the listener rules in priority order to determine which rule to apply and then selects a target from the target group for the rule action

- Listeners

- A listener checks for connection requests from clients when using the protocol and port you configure

- You define the rules that determine how the load balancer routes requests to its registered targets

- Each rule consists of priority, one or more actions, and one or more conditions

- Rules

- When conditions of rule are met, actions are performed

- Must define a default rule for each listener

- Target Group

- Each target group routes requests to one or more registered targets using the protocol and port you specify

- Path-Based Routing

- Enable path patterns to make load balancing decisions based on path

- /image → certain EC2 instance

- Limitations of App Load Balancers:

- Can ONLY support HTTP/HTTPS listeners

- Can enable sticky sessions with app load balancers, but traffic will be sent at the target group level, not specific EC2 instance

- Layer 7, App-Aware Load Balancing

- Network Load Balancer

- Layer 4, Connection layer

- Can handle millions of requests/sec

- When network load balancer has only unhealthy registered targets, it routes requests to ALL the registered targets - known as fail-open mode

- How it works

- Connection request received

- Load balancer selects a target from the target group for the default rule

- It attempts to open a TCP connection to the selected target on the port specified in the listener configuration

- Listeners

- A listener checks for connection requests from clients using the protocol and port you configure

- The listener on a network load balancer then forwards the request to the target group

- Connection request received

- Auto distributes incoming traffic across multiple targets

There are NO rules, unlike the Application load balancers - cannot do intelligent routing at level 4

- Target Groups

- Each target group routes requests to one or more registered targets

- Supported protocols: TCP, TLS, UDP, TCP_UDP

- Encryption

- You can use a TLS listener to offload the work of encryption and decryption to your load balancer

- Target Groups

- Use Cases:

- Best for load balancing TCP traffic when extreme performance is required

- Or if you need to use protocols that aren't supported by app load balancer

- Classic Load Balancer

- Legacy

- Can load balance HTTP/HTTPs apps and use Layer 7-spec features

- Can also use strict layer 4 load balancing for apps that rely purely on TCP protocol

- X-Forwarded-For Header

- When traffic is sent from a load balancer, the server access logs contain the IP address of the proxy or load balancer only

- To see the original IP address of the client the x-forwarded-for request header is used

- Gateway Timeouts with Classic load balancer

- If your application stops responding, the classic load balancer responds with a 504 error

- This means that the application is having issue

- Means the gateway has timed out

- If your application stops responding, the classic load balancer responds with a 504 error

- Sticky Sessions

- Typically the classic load balancer routes each request independently to the registered EC2 instance with smallest load

- But with sticky sessions enabled, user will be sent to the same EC2 instance

- Problem could occur if we remove one of our EC2 instances while the user still has a sticky session going

- Load balancer will still try to route our user to that EC2 instance and they will get an error

- To fix this, we have to disable sticky sessions

- Deregistration Delay

- Aka Connection Draining with Classic load balancers

- Allows load balancers to keep existing connections open if the EC2 instances are deregistered or become unhealthy

- Can disable this if you want your load balancer to immediately close connections

- CloudWatch

- Monitoring and observability platform to give us insight into our AWS architecture

- Features

- System metrics

- The more managed a service is, the more you get out of the box

- Application Metrics

- By installing CloudWatch agent, you can get info from inside your EC2 instances

- Alarms

- No default alarms

- Can create an alarm to stop, terminate, reboot, or recover EC2 instances

- System metrics

- 2 kinds of metrics:

- Default

- CPU util, network throughput

- Custom

- Will need to be provided with CloudWatch agent installed on the host and reported back to CloudWatch because AWS cannot see past the hypervisor level for EC2 instances

- Ex: EC2 memory util, EBS storage capacity

- Default

- Standard vs Detailed monitoring

- Standard/Basic monitoring for EC2 provides metrics for your instances every 5 minutes

- Detailed monitoring provides metrics every 1 minute

- A period is the length of time associated with a specific CloudWatch stat - default period is 60 seconds

- CloudWatch Logs

- Tool that allows you to monitor, store, and access log files from a variety of different sources

- Gives you the ability to query your logs to look for potential issues or relevant data

- Terms:

- Log Event: data point, contains timestamp and data

- Log Stream: collection of log events from a single source

- Log Group: collection of log streams

- Ex may group all Apache web server host logs

- Features:

- Filter Patterns

- CloudWatch Log Insights

- Allows you to query all your logs using SQL-like interactive solution

- Alarms

- What services act as a source for CloudWatch logs?

- EC2, Lambda, CloudTrend, RDS

- CloudWatch is our go-to log tool, except for if the exam asks for a real-time solution (then it will be kinesis)

- Amazon Managed Grafana

- Fully managed service that allows us to securely visualize our data for instantly querying, correlating, and visualizing your operational metrics, logs, and traces from different sources

- Overview

- Grafana made easy

- Logical separation with workspaces

- Workspaces are logical Grafana servers that allow for separation of data visualizations and querying

- Data Sources for Grafana: CloudWatch, Managed Service for Prometheus, OpenSearch Service, Timestream

- Use Cases:

- Container metrics visualizations

- Connect to data sources like Prometheus for visualizing EKS, ECS, or own Kube cluster metrics

- IoT

- Container metrics visualizations

- Amazon Managed Service for Prometheus

- Serverless, Prometheus-compatible service used for securely monitoring container metrics at scale

- Overview

- Still use open-source prometheus, but gives you AWS managed scaling and HA

- Auto Scaling

- HA- replicates data across three AZs in same region

- EKS and self-managed Kubernetes clusters

- PromQL: the open source query language for exploring and extracting data

- Data Retention:

- Data store in workspaces for 150 days, after that, deleted

- VPC Flow Logs

- Can configure to send to S3 bucket

- Horizontal and Vertical Scaling

- Launch Templates

- Specifies all of the needed settings that go into building out an EC2 instance

- More than just auto-scaling

- More granularity

- AWS recommends Launch templates over Configurations

- Configurations are only for auto-scaling, are immutable, limited configuration options, don't use them

- Create template for Auto Scaling Group

- Launch Templates

- Auto Scaling

- Auto Scaling Groups

- Contains a collection of EC2 instances that are treated as a collective group for the purposes of scaling and management

- What goes into auto scaling group?

- Define your template

- Pick from available launch templates or launch configurations

- Pick your networking and purchasing

- Pick networking space and purchasing options

- ELB configuration

- ELB sits in front of auto scaling group

- EC2 instances are registered behind a load balancer

- Auto scaling can be set to respect the load balancer health checks

- Set Scaling policies

- Min/Max/Desired capacity

- Notifications

- SNS can act as notification tool, alert when a scaling event occurs

- Define your template

- Step Scaling Policies

- Increase or decrease the current capacity of a scalable target based on scaling adjustments, known as step adjustments

- Adjustments vary based on the size of the alarm breach

- All alarms that are breached are evaluated by application auto scaling

- Instance Warm-Up and Cooldown

- Warm-up period - time for EC2s to come up before being placed behind LB

- Cooldown - pauses auto scaling for a set amount of time (default is 5 minutes)

- Warmup and cooldown help to avoid thrashing

- Scaling types

- Reactive Scaling

- Once the load is there, you measure it and then determine if you need to create more or less resources

- Respond to data points in real-time, react

- Scheduled Scaling

- Predictable workload, create a scaling event to handle

- Predictive Scaling

- AWS uses ML algorithms to determine

- They are reevaluated every 24 hours to create a forecast for the next 48

- Reactive Scaling

- Steady Scaling

- Allows us to create a situation where the failure of a legacy codebase or resource that cant be scaled down can auto recover from failure

- Set Min/Max/Desired = 1

- CloudWatch is your number one tool for alerting auto scaling that you need more or less of something

- Scaling Relational DBs

- Most scaling options

- 4 ways to scale Relational DBs/4 types of scaling we can use to adjust our RD performance

- Vertical Scaling

- Resizing the db from one size to another, can create greater performance, increase power

- Scaling Storage

- Storage can be resized up, not down

- Except aurora which auto scales

- Read Replicas

- Way to scale “horizontally” - create read only copies of our data

- Aurora Serverless

- Can offload scaling to AWS - excels with unpredictable workloads

- Vertical Scaling

- Scaling Non Relational DBs

- DynamoDB

- AWS managed - simplified

- Provisioned Model

- Use case: predictable workload

- Need to overview past usage to predict and set limits

- Most cost effective

- On-Demand

- Use case: sporadic workload

- Pay more

- Can switch from on-demand to provisioned only once per 24 hours per table

- Non-Relational DB scaling

- Access patterns

- Design matters

- Avoiding hot keys will lead to better performance

- DynamoDB

- Auto Scaling Groups

- Simple Queue Service (SQS)

- Fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless apps

- Can sit between frontend and backend and kind of replace the Load Balancer

- Web front end dumps messages into the queue and then backend resources can poll that queue looking for that data whenever it is ready

- Does not require that active connection that the load balancer requires

- Poll-Based Messaging

- We have a producer of messages, consumer comes and gets message when ready

- Messaging queue that allows asynchronous processing or work

- SQS Settings

- Delivery Delay - default 0, up to 15 minutes

- Message Size - up to 256 KB text in any format

- Encryption - encrypted in transit by default, now added encryption at rest default with SSE-SQS

- SSE-SQS = Server-Side Encryption using SQS-owned encryption

- Encryption at rest using the default SSE-SQS is supported at no charge for both standard and FIFO using HTTPS endpoints

- SSE-SQS = Server-Side Encryption using SQS-owned encryption

- Message Retention

- Default retention is 4 days, can be set from 1 minute - 14 days, then purged

- Long vs Short Polling

- Long polling is not the default, but should be

- Short Polling

- Connect, checks if work, immediately disconnects if no work

- Burns CPU, additional API calls cost money

- Long Polling

- Connect, check if work, waits a bit

- Mostly will be the right answer

- Queue Depth

- This value can be a trigger for auto scaling

- Visibility Timeout

- Used to ensure proper handling of the message by backend EC2 instances

- Backend polls for the message, sees it, downloads that message from SQS to do work - after backend downloads message, SQS puts a lock on that message called the visibility timeout, where the message remains in the queue but no one else can see it

- So if other instances are polling that queue, the will not see the locked message

- Default visibility timeout is 30 seconds, but can be changed

- If that EC2 instance that downloaded the message fails to process that message and reach out to the queue to tell SQS that it is done, and tells it to purge that message, that message is going to reappear in the queue

- Dead-Letter Queues

- If there is a problem with the message, if the message cannot be processed by our backend process and we did not implement DLQ - the message would get pulled by a backend EC2 for processing, the EC2 would have an error processing it, so we would hit our 30 sec visibility timeout. So, the message would unlock, another EC2 would pick it up, etc, etc, until we hit our message retention period, then that message would be deleted

- By implementing DLQ: we create another SQS Queue that we can temporarily sideline messages into

- How it works:

- Set up a new queue and select it as the DLQ when setting up the primary SQS queue

- Set a number for retries in the primary queue

Once the message hits that limit, it gets moved to the DLW where it stays until message retention period, then deleted

- Can create SQS DLQ for SNS topics

- SQS FIFO

- Standard SQS offers best effort ordering and tries not to duplicate, but may - nearly unlimited transactions/sec

- SQS FIFO guarantees the order and that no duplication will occur

- Limited to 300 messages/sec

- How it works:

- Message group ID field is a tag that specifies that a message belongs to a specific message group

- Message Deduplication ID is the token used to ensure no duplication within the deduplication interval: a unique value that ensures that your messages are unique

- More expensive than standard SQS

- Simple Notification Service (SNS)

- Used to push out notifications - proactively deliver the notification to an end-point rather than leaving it in a queue

- Fully managed messaging service for both application-to-application (A2A) and application-to-person (A2P) communication

- Texts and emails to users

- Push-Based Messaging

- Consumer does not have control to receive when ready, the sender sends it all the way to the consumer

- Will proactively deliver messages to the endpoints that are Subscribed to it

- SNS topics are subscribed to

- SNS Settings

- Subscribers

- what/who is going to receive the data from the topic

- Ex: Kinesis firehose, SQS, Lambda, email, HTTP(S), etc

- what/who is going to receive the data from the topic

- Message Size

- Max size of 256 text in any format

- SNS does not retry, even if they fail to deliver

- Can store in an SQS DLQ to handle

- SNS FIFO only supports SQS as a subscriber

- Messages are encrypted in transit by default, and you can add encryption at rest

- Access Policies

- Can control who/what can publish to those SNS topics

- A resource policy can be added to a topic, similar to S3

- Have to make sure AP is set up properly with SNS to SQS so that SNS can have access to SQS Queue

- Subscribers

- CloudWatch uses SNS to deliver alarms

- API Gateway

- Fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale

- “Front-door” to our apps so we can control what users talk to our resources

- Key features:

- Security

- Add security- one of the main reasons for using API Gateway in front of our applications

- Allows you to easily protect your endpoints by attaching a WAF; Can front API Gateway with a WAF - security at the edge

- Stop Abuse

- Set up rate limiting, DDoS protection

- Static stuff to S3, basically everything else to API Gateway

- Security

- Preferred method is to get API calls into your application and AWS environment

- Avoid hardcoding our access keys/secret keys with API Gateway

- Do not have to generate an IAM user to make calls to the backend, just send API call to API Gateway in front

- API Gateway is versioning supported

- AWS Batch

- AWS Managed service that allows us to run batch computing workloads within AWS- these workloads run on either EC2 or Fargate/ECS

- Capable of provisioning accurately sized compute resources based on number of jobs submitted and optimizes the distribution of workloads

- Removes any heavy lifting for configuration and management of infrastructure required for computing

- Components:

- Jobs = units of work that are submitted to Batch (ie: shell scripts, executables, docker images)

- Job Definitions = specify how your jobs are to be run, essentially the blueprint for the resources in the job

- Job Queues = jobs get submitted to specific queues and reside there until scheduled to run in a compute environment

- Compute Environment = set of managed or unmanaged compute resources used to run your jobs (EC2 or ECS/Fargate)

- Fargate or EC2 Compute Environments

- Fargate is the recommended way of launching most batch jobs

- Scales and matches your needs with less likelihood of over provisioning

- EC2 is sometimes the best choice, though:

- When you need a custom AMI (can only be run via EC2)

- When you have high vCPU requirements

- When you have high GiB requirements

- Need GPU or Graviton CPU requirement

- When you need to use linusParameters parameter field

- When you have a large number of jobs, best to run on EC2 because jobs are dispatched at a higher rate than Fargate

- Fargate is the recommended way of launching most batch jobs

- Batch vs Lambda

- Lambda has a 15 minute execution time limit, batch does not

- Lambda has limited disk space

- Lambda has limited runtimes, batch uses docker so any runtime can be used

- AWS Managed service that allows us to run batch computing workloads within AWS- these workloads run on either EC2 or Fargate/ECS

- Amazon MQ

- Managed message broker service allowing easier migration of existing applications to the AWS Cloud

- Makes it easy for users to migrate to a message broker in the cloud from an existing application

- Can use a variety of programming languages, OS’s, and messaging protocols

- MQ Engine types:

- Currently supports both Apache ActiveMQ or RabbitMQ engine types

- SNS with SQS vs AmazonMQ

- Both have topics and queues

- Both allow for one-to-one or one-to-many messaging designs

- MQ is easy application migration: so if you are migrating an existing application, likely want MQ

- If you are starting with new Application - easier and better to use SNS with SQS

- AmazonMQ requires that you have private networking like VPC, Direct Connect, or VPN while SNS and SQS are publicly accessible by default

- MQ has NO default AWS integrations and does not integrate as easily with other services

- Both have topics and queues

- Configuring Brokers

- Single_Instance Broker

- One broker lives within one AZ

- RabbitMQ has a network load balancer in front in a single instance broker environment

- MQ Brokers

- Offers HA architectures to minimize downtime during maintenance

- Architecture depends on broker engine type

- AmazonMQ for Apache ActiveMQ

- With active/standby deployments, one instance will remain available at all times

- Configure network of brokers with separate maintenance windows

- AmazonMQ for RabbitMQ

- Cluster deployment are logical groupings of three broker nodes across multiple AZs sitting behind a Network LB

- MQ is good for specific messaging protocols: JMS or messaging protocols like AMQP0-9-1, AMQP 1.0, MQTT, OpenWire, and STOMP

- Single_Instance Broker

- AWS Step Functions

- A serverless orchestration service combining different AWS services for business applications

- Provides a graphical console for easier application workflow views and flows

- Components

- State Machine: a particular workflow with different event-driven steps

- Tasks: specific states within a workflow (state machine) representing a single unit of work

- States: every single step within a workflow = a state

- Two different types of workflows with Step Functions:

- Standard

- Exactly-once execution

- Can run for up to 1 year

- Useful for long-running workflows that need to have auditable history

- Rates up to 2000 executions/sec

- Pricing based per state transition

- Express

- Have an ‘at-least-once’ execution → means possible duplication you have to handle

- Only run for up to 5 minutes

- Useful for high-event-rate workloads

- Use Case: IoT data streaming

- Pricing based on number of executions, durations, and memory consumed

- Standard

- States and State Machines

- Individual states are flexible

- Leverage states to either make decisions based on input, perform certain actions, or pass input

- Amazon States Language (ASL)

- States and workflows are defined in ASL

- States are elements within your state machines

- States are referred to by name, name in unique within workflow

- Individual states are flexible

- Integrates with Lambda, Batch, Dynamo, SNS, Fargate, API Gateway, etc

- Different States that exist:

- Pass - no work

- Task - single unit of work performed

- Choice - adds branching logic to state machines

- Wait - time delay

- Succeed - stops executions successfully

- Fail - stops executions and mark as failures

- Parallel - runs parallel branches of executions within state machines

- Map - runs a set of steps based on elements of an input array

- Amazon AppFlow

- Fully managed service that allows us to securely exchange data between a SaaS App and AWS

- Ex: Salesforce migrating data to S3

- Entire purpose is to ingest data

- Pulls data records from third-party SaaS vendors and stores them in S3

- Bi-directional: allows for bi-directional data transfers with some combinations of source and destination

- Concepts:

- Flow: transfer data between sources and destinations

- Data Mapping: determines how your source data is stored within your destination

- Filters: criteria set to control which data is transferred

- Trigger: determines how the flow is started

- Multiple options/supported types:

- Run on demand

- Run on event

- Run on schedule

- Multiple options/supported types:

- Fully managed service that allows us to securely exchange data between a SaaS App and AWS

- Redshift Databases

- Fully managed, petabyte-scale data warehouse service in the cloud

- Very large relational db traditionally used in big data

- Because it is relational, you can use standard SQL and BI tools to interact with it

- Best use is for BI applications

- Can store massive amounts of data - up to 16 PB of data

- Means you do not have to split up your large datasets

- Not a replacement for a traditional RDS - it would fall apart as the backend of your web app, for example

- Elastic Map Reduce (EMR)

- ETL tool

- Managed big data platform that allows you to process vast amounts of data using open-source tools, such as Spark, Hive, HBase, Flink, Hudi, and Presto

- Quickly use open source tools and get them running in our environment

- For this exam, EMR will be run on EC2 instances and you pick the open-source tool for AWS to manage on them

- Open-source cluster, managed fleet of EC2 instances running open-source tools

- Amazon Kinesis

- Allows you to ingest, process, and analyze real-time streaming data

- 2 forms of Kinesis:

- Data Streams

- Real-time streaming for ingesting data

- You are responsible for creating the consumer and scaling the stream

- Process for Kinesis Data Streams:

- Producers creating data

- Connect producers to Data Stream

- Decide how many shards you are going to create

- Data Streams

Shards can only handle a certain amount of data

- Consumer takes data in, processes it, and puts it into endpoints

You have to create the consumer

Endpoint could be S3, Dynamo, Redshift, EMR, …

You have to use the Kinesis SDK to build the consumer application

Handle scaling with the amount of shards

- Data Firehose

- Data transfer tool to get info into S3, Redshift, ElasticSearch, or Splunk

- Near-real-time

- Plug and play with AWS architecture

- Process for Kinesis Data Firehose:

- Limited supported endpoints- ElasticSearch service, S3, and Redshift, some 3rd party endpoints supported as well

- Place Data Firehose in between input and endpoint

- Handles the scaling and the building out of the consumer

- Data Firehose

- Kinesis Data Analytics

- Paired with Data Stream/Firehose, it does the analysis using standard SQL

- Makes it easy to tie Data Analytics into your pipeline

- Data comes in with Streams/Firehose and Data Analytics can transform/sanitize/format data in real-time as it gets pushed through

- Serverless, fully managed, auto scaling

- Kinesis vs SQS

- SQS does NOT provide real-time message delivery

- Kinese DOES provide real-time message delivery

- Amazon Athena

- An interactive query that makes it easy to analyze data in S3 using SQL

- Allows you to directly query data in your S3 buckets without loading it into a database

- “Serverless SQL”

- Can use Athena to query logs stored in S3

- Amazon Glue

- A serverless data ingestion service that makes it easy to discover, prepare, and combine data

- Allows you to perform ETL workloads without managing underlying servers

- Effectively replaces EMR - and with Glue, you don't have to spin up EC2 instances or use 3rd party tools to ETL

- Using Athena and Glue together:

- AWS S3 data is unstructured, unformatted – deploy Glue Crawlers to build a catalog/structure for that data

- Glue produces Data Catalog

- After glue, we have some options:

- Can use Amazon Redshift Spectrum - allows us to use Redshift without having to load data into Redshift db

- Athena - use to query the data catalog, and can even use Quicksight to visualize data

- AWS S3 data is unstructured, unformatted – deploy Glue Crawlers to build a catalog/structure for that data

- Amazon QuickSight

- Amazon's version of Tableau

- Fully managed BI data visualization service, easily create dashboards

- AWS Data Pipeline

- A managed ETL service for automating management and transformation of your data, automatic retries for data-driven workflow

- Data driven web-service that allows you to define data-driven workflows

- Steps are dependent on previous tasks completing successfully

- Define parameters for data transformations - enforces your chosen logic

- Auto retries failed attempts

- Configure notifications via SNS

- Integrates easily with Dynamo, Redshift, RDS, S3 for data storage, and integrates with EC2 and EMR for compute needs

- Components:

- Pipeline Definition = specify the logic of you data management

- Managed Compute = service will create EC2 instances to perform your activities or leverage existing EC2

- Task Runners = (EC2) poll for different tasks and perform them when found

- Data Nodes= define the locations and types of data that will be input and output

- Activities = pipeline components that define the work to perform

- Use Cases:

- Processing data in EMR using Hadoop streaming

- Importing or exporting DynamoDB data

- Copying CSV files or data between S3 buckets

- Exporting RDS data to S3

- Copying data to Redshift

- Amazon Managed Streaming for Apache Kafka (Amazon MSK)

- Fully managed service for running data streaming apps that leverage Apache Kafka

- Provides control-plane operations; creates, updates, and deletes clusters as required

- Can leverage the Kafka data-plane operations for production and consuming streaming data

- Good for existing operations; allows support for existing apps, tools, and plugins

- Components:

- Broker Nodes

- Specify the amount of broker nodes per AZ you want at time of cluster creation

- Zookeeper Nodes

- Created for you

- Producers, Consumers, and Topics

- Kafka data-plane operations allow creation of topics and ability to produce/consume data

- Flexible Cluster Operations

- Perform cluster operations with the console, AWS CLI, or APIs within any SDK

- Broker Nodes

- Resiliency in AmazonMSK:

- Auto Recovery

- Detected broker failures result in mitigation or replacement of unhealthy nodes

- Tries to reduce storage from other brokers during failures to reduce data needing replication

- Impact time is limited to however long it takes MSK to complete detection and recovery

- After successful recovery, producer and consumer apps continue to communicate with the same IP as before

- Features:

- MSK Serverless

- Cluster type within AmazonMSK offering serverless cluster management - auto provisioning and scaling

- Fully compatible with Apache Kafka - use the same client apps for prod/cons data

- MSK Connect

- Allows developers to easily stream data to and from Apache Kafka clusters

- MSK Serverless

- Security

- Integrates with Amazon KMS for SSE requirements

- Will always encrypt data at rest by default

- TLS1.2 by default in transit between brokers in clusters

- Logging

- Broker logs can be delivered to services like CloudWatch, S3, Data Firehose

- By default, metrics are gathered and sent to CloudWatch

- MSK API calls are logged to CloudFront

- Amazon OpenSearch Service

- Managed service allowing you to run search and analytics engines for various use cases

- It is the successor to Amazon ElasticSearch Service

- Features:

- Allows you to perform quick analysis - quickly ingest, search, and analyze data in your clusters - commonly a part of an ETL process

- Easily scale cluster infrastructure running the OpenSearch services

- Security: leverage IAM for access control, VPC security groups, encryption at rest and in transit, and field-level security

- Multi-AZ capable service with Master nodes and automated snapshots

- Allows for SQL support for BI apps

- Integrates with CloudWatch, CloudTrail, S3, Kinesis - can set log streams to OpenSearch Service

- Logging solution involving creating visualization of log file analytics or BI tools/imports

- Serverless Overview

- Benefits

- Easy of use: we bring code, AWS handles everything else

- Event-Based: can be brought online in response to an event then go back offline

- True “pay for what you use” architecture: pay for provisioned resources and the length of runtime

- Example serverless services: Lambda, Fargate

- Benefits

- Lambda

- Serverless compute service that lets you run code without provisioning or managing the underlying server

- How to build a lambda function:

- Runtime selection: pick from an available run-time or bring your own. This is the environment your code will run in

- Set permissions: if your lambda function needs to make an API call in AWS, you need to attach a role