The Field of Clinical Psychology - Chapter 2

Principles of Research:

What elements are most important to make sure that psychological research leads to new knowledge?

Evidence Based Practice

Quantitive and Qualitative Methods

The Scientific part of a Theory

Parismony

The importance of integrating both quantitative and qualitative methods to provide a comprehensive understanding of psychological phenomena

is essential, as it allows researchers to explore complex behaviors and experiences from multiple perspectives, enhancing the validity and applicability of their findings while also addressing the limitations inherent in relying on a single methodological approach

Falsifiability:

The principle that a theory must be able to be tested and potentially disproven to be considered scientific, thus ensuring that psychological theories are not only robust but also subject to rigorous scrutiny.

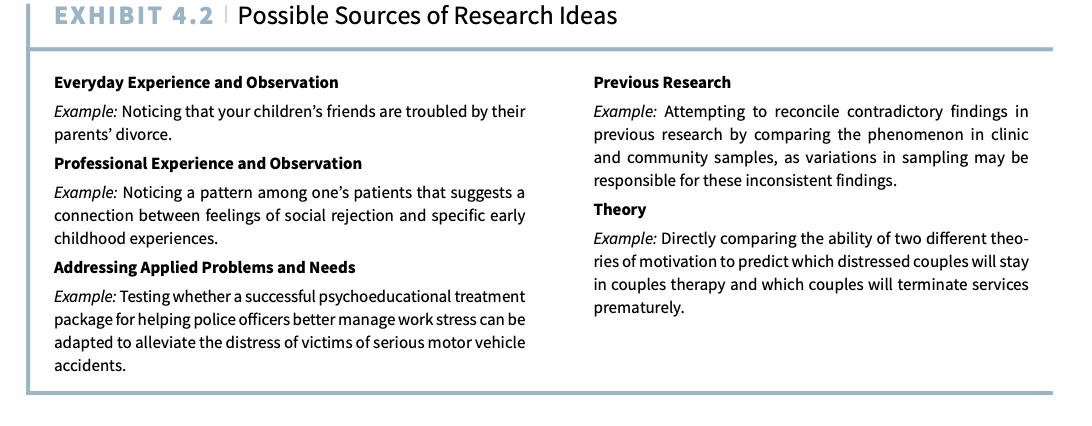

Planning Research

Inductive Reasoning

Definition: Inductive reasoning involves deriving general principles from specific observations.

Example in Research: A researcher notices that patients with high anxiety tend to struggle more in social interactions. From repeated observations, they formulate a general hypothesis that increased anxiety leads to more social errors.

Key Point: Inductive reasoning is not explicitly guided by a formal theory but is influenced by the researcher’s informal beliefs and prior experiences.

Excerpt from the text:

"In other instances, the researcher follows an inductive process—for example, deriving an idea from repeated observations of everyday events. Even though the inductive process is not explicitly guided by theory, it is influenced by the researcher’s informal theories, including his or her theoretical orientation and general world view."

Deductive Reasoning

Definition: Deductive reasoning starts with a general theory or principle and tests specific predictions based on it.

Example in Research: A psychologist working from Cognitive Load Theory might predict that increased anxiety impairs working memory, leading to more social interaction errors. They design a study to test this prediction.

Key Point: Deductive reasoning moves from broad theories to specific testable hypotheses.

Excerpt from the text:

"In some instances, the researcher uses a formal theory to generate a research idea. This is known as following a deductive process."

Key Difference

Reasoning Type | Process | Example in Research |

|---|---|---|

Inductive | Observation → Pattern → Hypothesis → Theory | Noticing that anxious individuals make more social errors and developing a hypothesis based on repeated observations. |

Deductive | Theory → Hypothesis → Experiment → Confirmation or Rejection | Using a psychological theory to predict a relationship (e.g., anxiety affects social skills) and testing it in an experiment. |

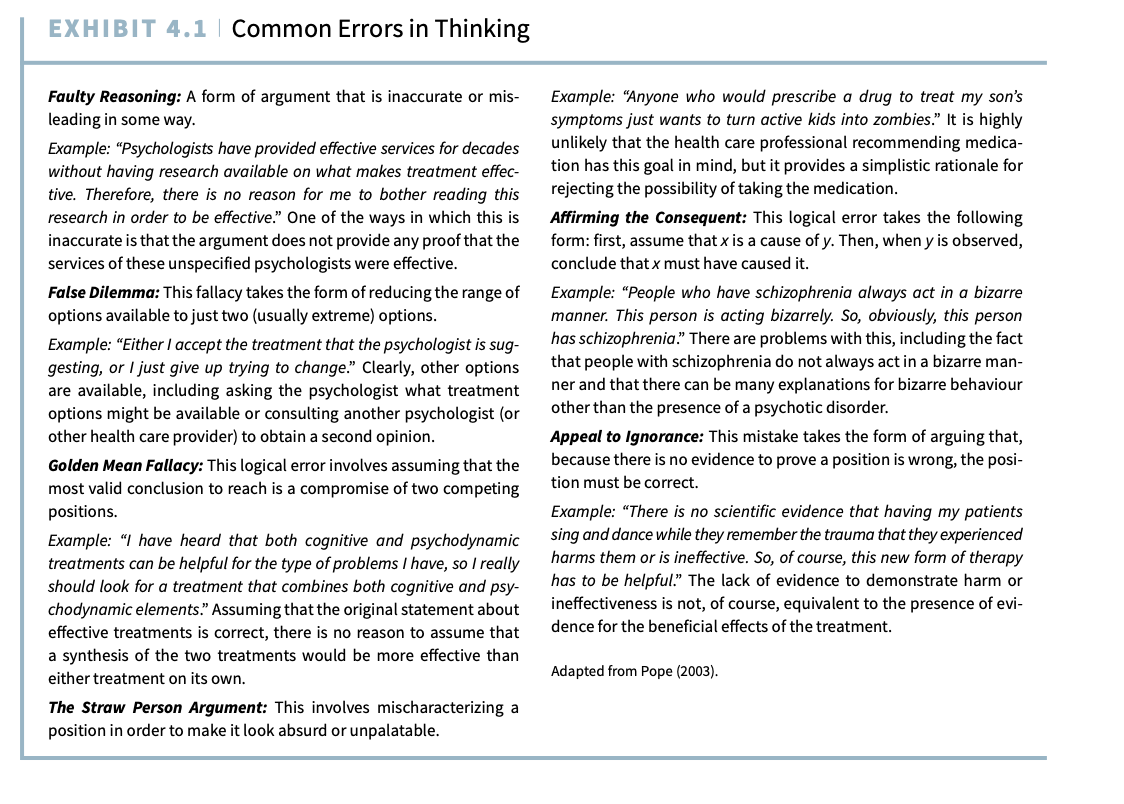

Thinking Errors

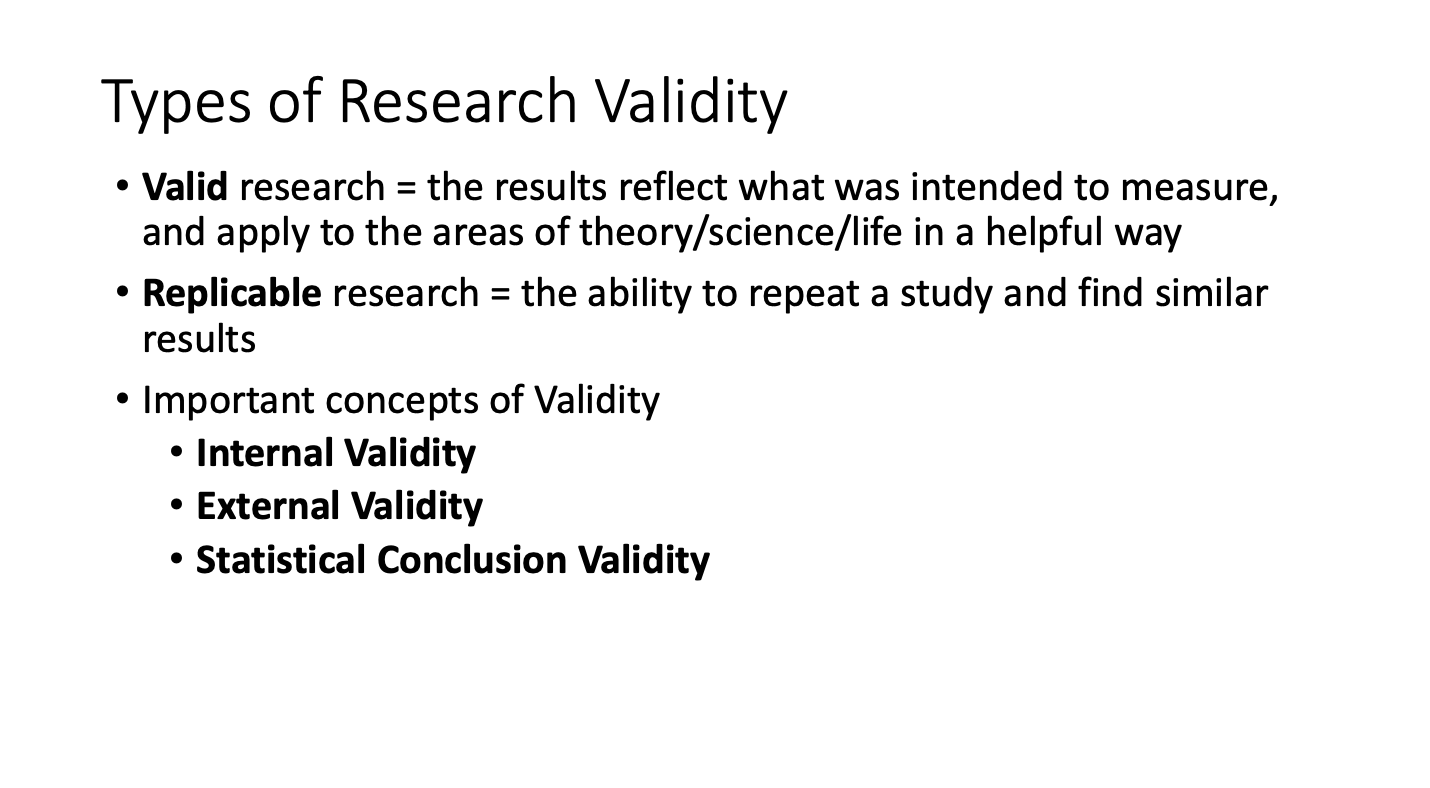

Types of Research Validity

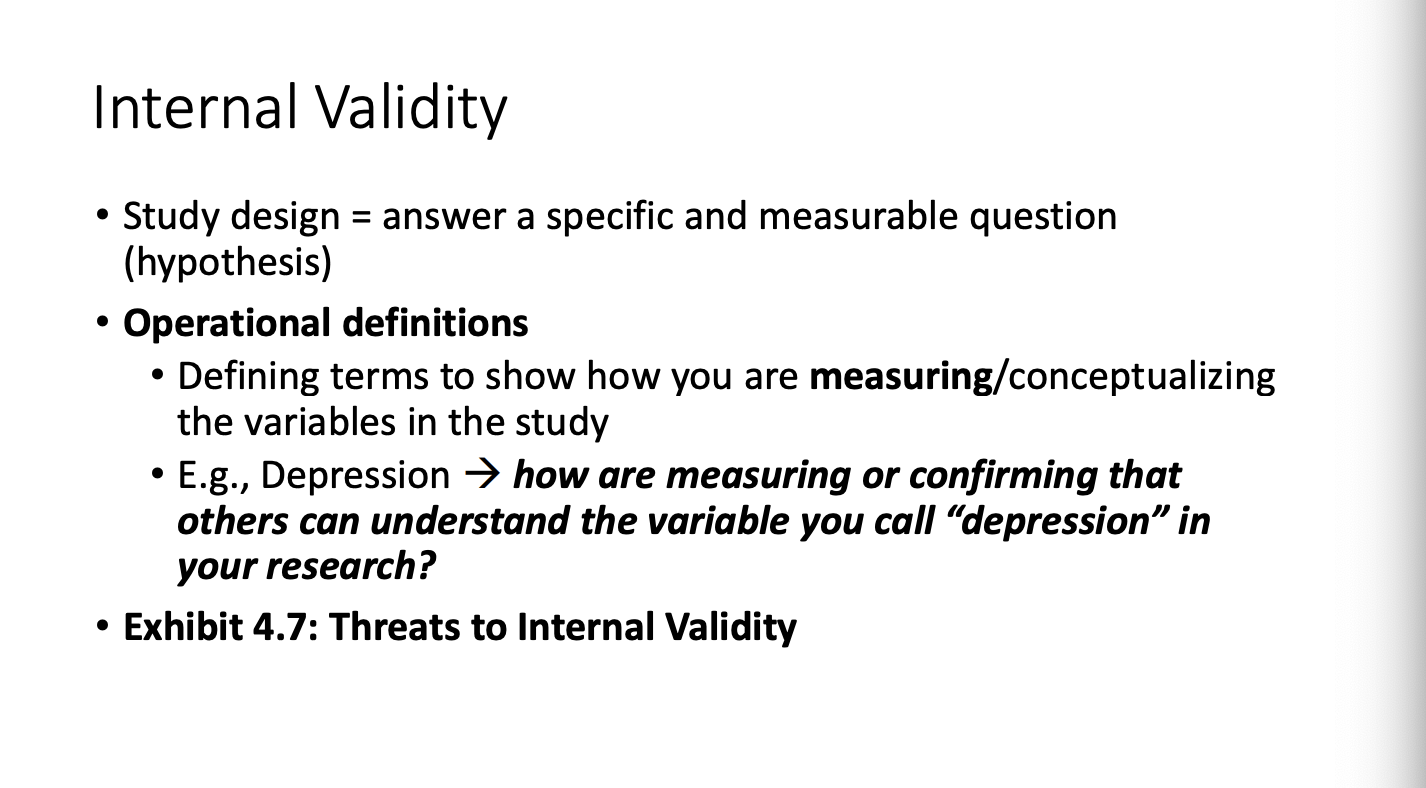

Internal Validity

Definition: The extent to which a study establishes a cause-and-effect relationship between variables.

Key Question: Does the independent variable (IV) really cause changes in the dependent variable (DV), or could other factors explain the results?

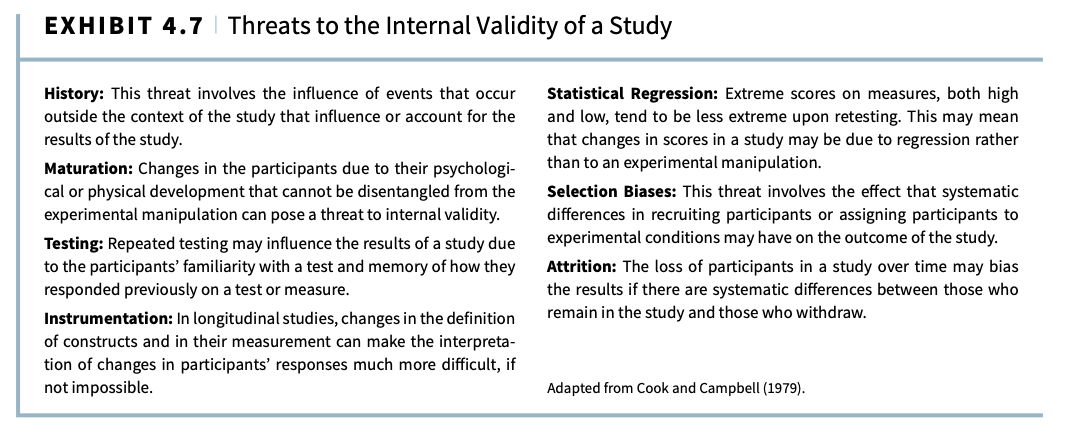

Threats to Internal Validity:

Confounding variables (other factors influencing the DV)

Selection bias (non-random assignment of participants)

History effects (outside events affecting results)

Maturation effects (natural changes in participants over time)

Example: A study finds that a new therapy reduces anxiety. If the study lacks a control group, we cannot be sure whether the therapy caused the improvement or if it was due to placebo effects or participants naturally feeling better over time.

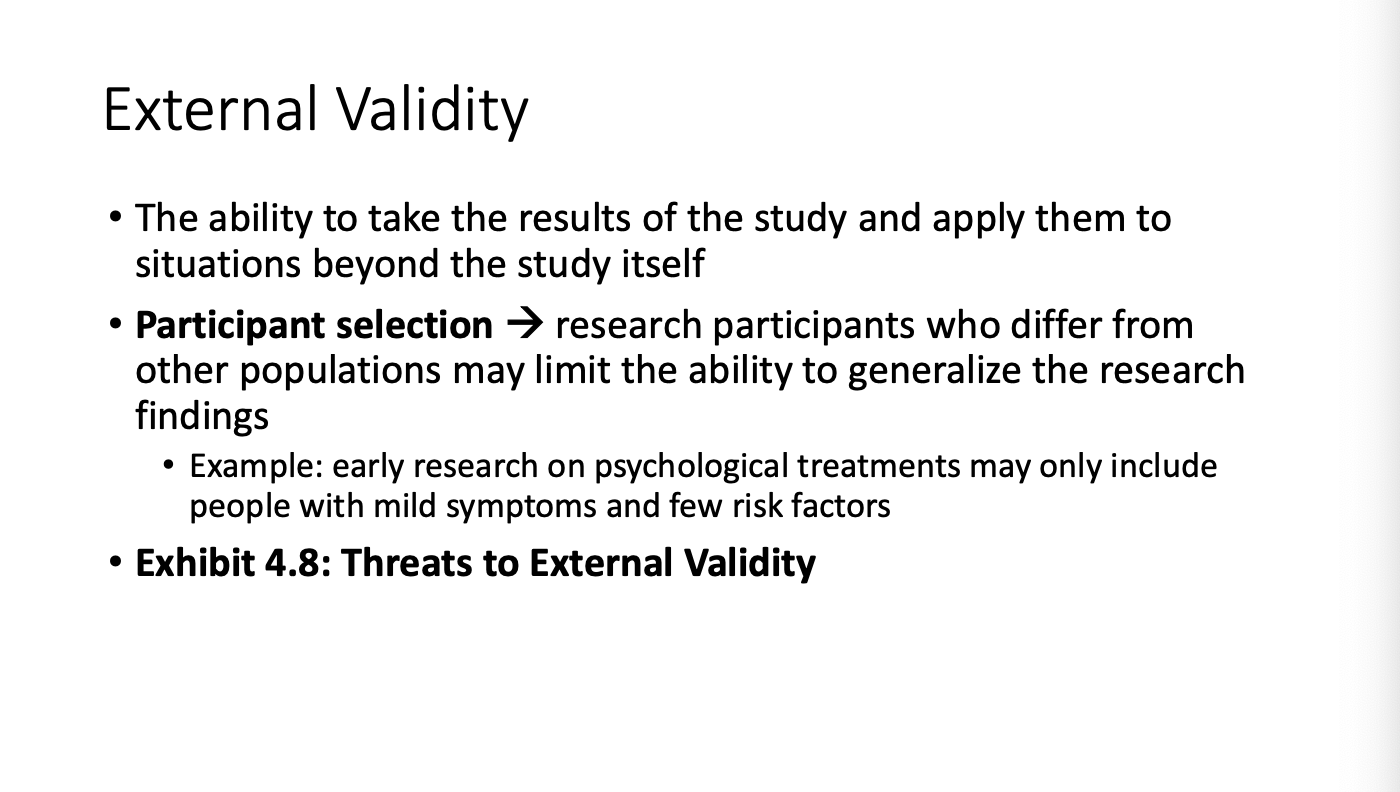

External Validity

External Validity

Definition: The extent to which the study’s results can be generalized to other people, settings, and situations.

Key Question: Can the findings apply to real-world scenarios beyond the study’s sample?

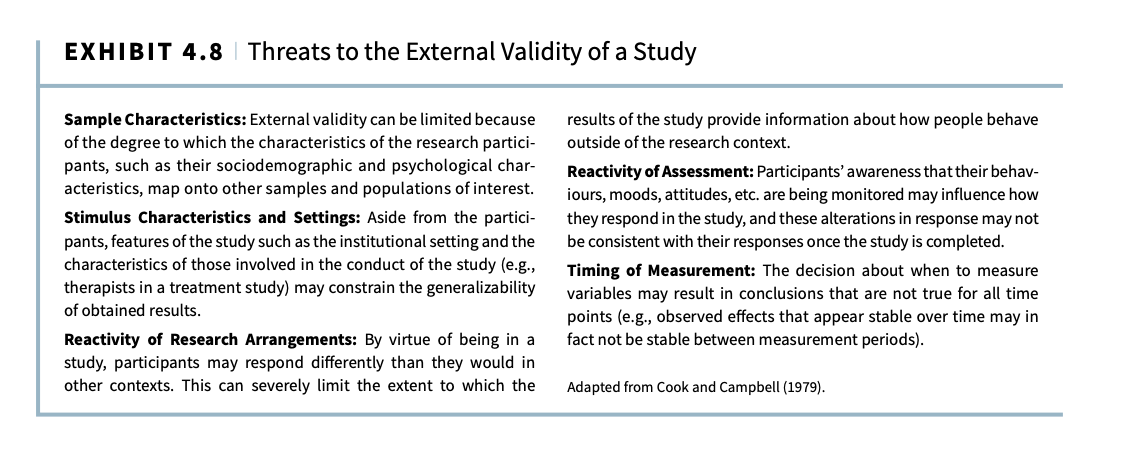

Threats to External Validity:

Sampling bias (participants are not representative of the population)

Artificial setting (e.g., a lab study may not reflect real-life behaviors)

Time period effects (results may not apply in the future)

Example: A study on anxiety treatment conducted only on university students may not apply to older adults or different cultures, limiting external validity.

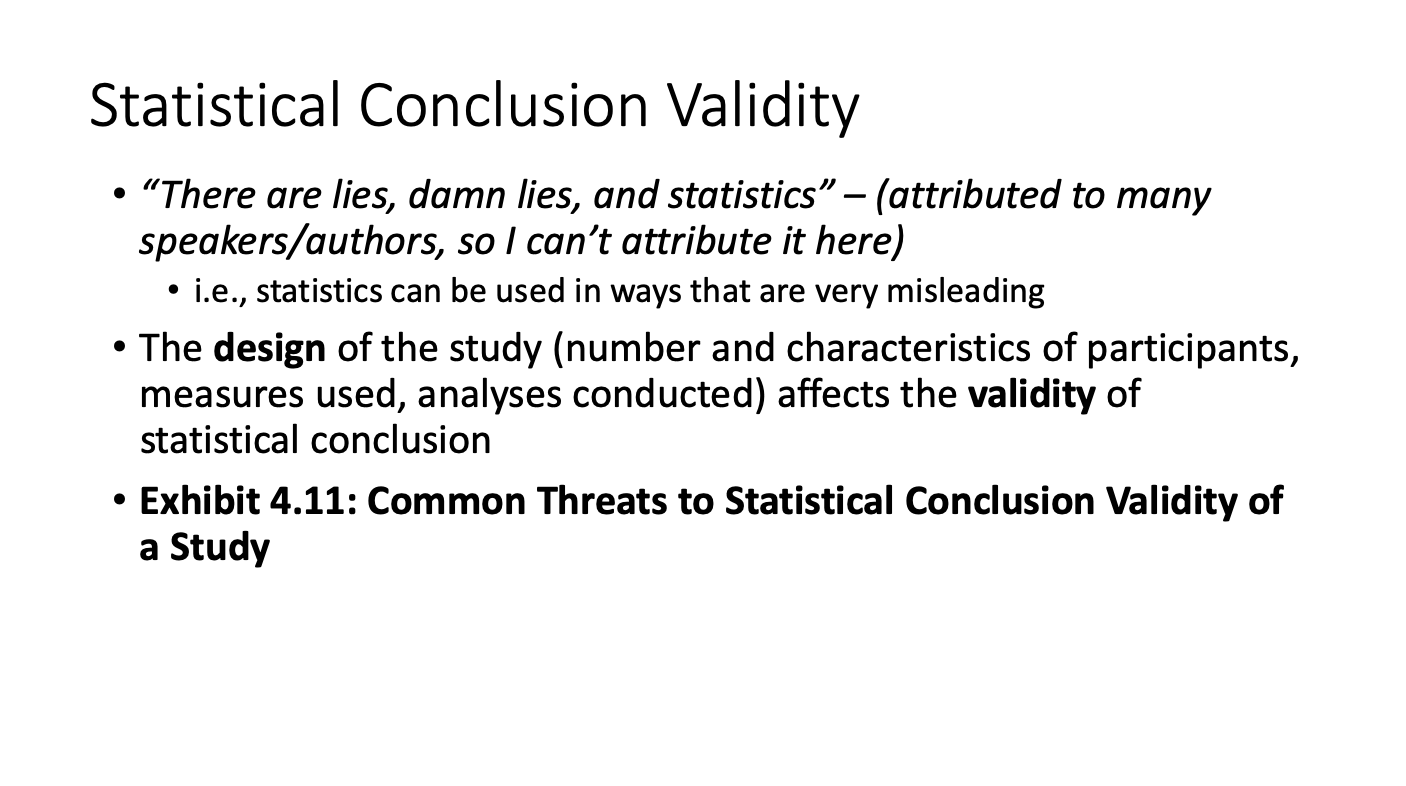

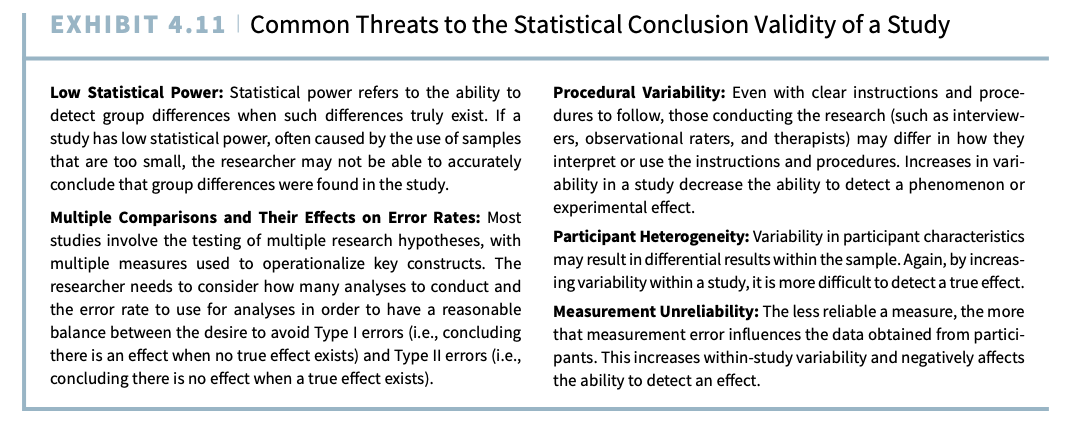

Statistical Conclusion Validity

Statistical Conclusion Validity

Definition: The extent to which correct statistical analyses are used to determine whether a real relationship exists between variables.

Key Question: Are the results statistically accurate and not due to random chance or measurement error?

Threats to Statistical Conclusion Validity:

Low statistical power (small sample size may lead to missing real effects)

Incorrect statistical tests (e.g., using the wrong type of analysis)

Violation of assumptions (e.g., assuming data is normally distributed when it’s not)

Example: A study finds that therapy improves anxiety symptoms, but if the sample size is too small, the statistical test may not detect a real effect, leading to incorrect conclusions.

Key Differences

Validity Type | Focus | Key Question |

|---|---|---|

Internal Validity | Ensuring that the IV truly causes changes in the DV | Does the study eliminate alternative explanations for the results? |

External Validity | Generalizing results to other populations and settings | Can the study’s findings apply beyond the research setting? |

Statistical Conclusion Validity | Accuracy of statistical analyses | Are the results statistically sound and reliable? |

Statistical and Clinical Differences

Research Designs

Case Studies

Dr. Paul Meehl

Paul Meehl’s 1954 review revolutionized clinical psychology by challenging the effectiveness of traditional clinical assessment and advocating for a more statistical approach. His work highlighted key weaknesses in clinically based assessment and demonstrated the advantages of statistical models for making psychological diagnoses and predictions

Meehl’s Key Arguments

Clinical vs. Statistical Assessment

Clinical assessment relied on interviews, subjective judgment, and sometimes standardized tests to describe and predict behavior.

Statistical assessment used objective data (e.g., demographics, test scores) in mathematical models to generate predictions.

Meehl’s analysis found that statistical methods consistently outperformed clinical judgment in diagnosing and predicting behavior.

The “Illusion” of Clinical Judgment

Clinicians often believed that their intuition and expertise led to better decisions, but Meehl demonstrated that human judgment is prone to cognitive biases.

He argued that clinical intuition was unreliable because it lacked systematic validation.

Insurance Model Comparison

Meehl likened statistical assessment to how insurance companies calculate risk:

They don’t rely on intuition; they use data and formulas to estimate probabilities.

Psychology, he argued, should follow the same principle by using standardized, empirical methods.

Role of Clinical Experience

Meehl did not dismiss clinical expertise entirely.

He emphasized that clinicians should use their experience to generate hypotheses about clients.

However, once a hypothesis is formed, it should be tested using scientific methods, including psychological assessments with proven reliability and validity.

Meehl’s Lasting Impact on Psychology

His work contributed to the rise of evidence-based assessment and the use of structured diagnostic tools.

He paved the way for actuarial (data-driven) decision-making in clinical psychology.

His findings still influence debates on the role of intuition vs. data in psychology, medicine, and other fields

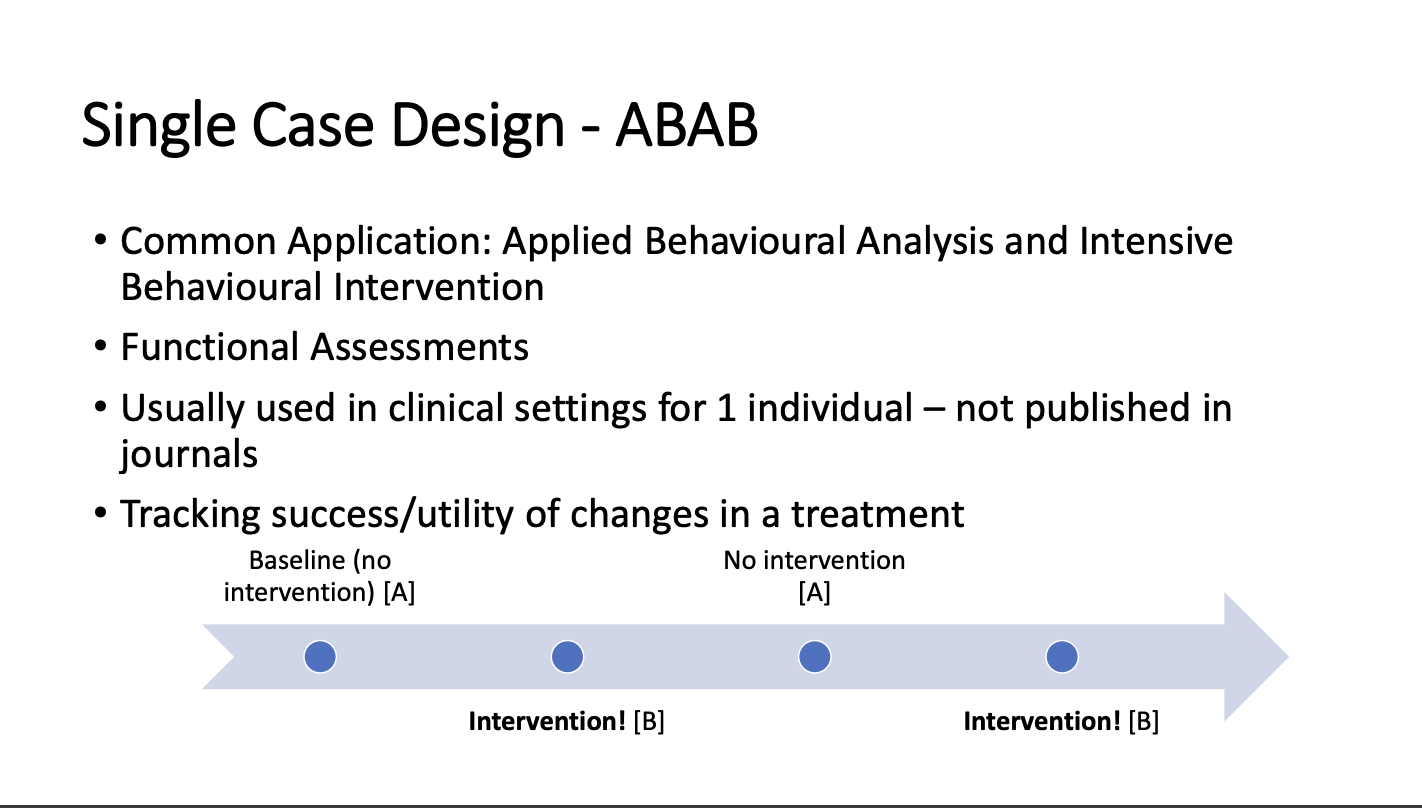

Single Case Design - ABAB

Correlational Design

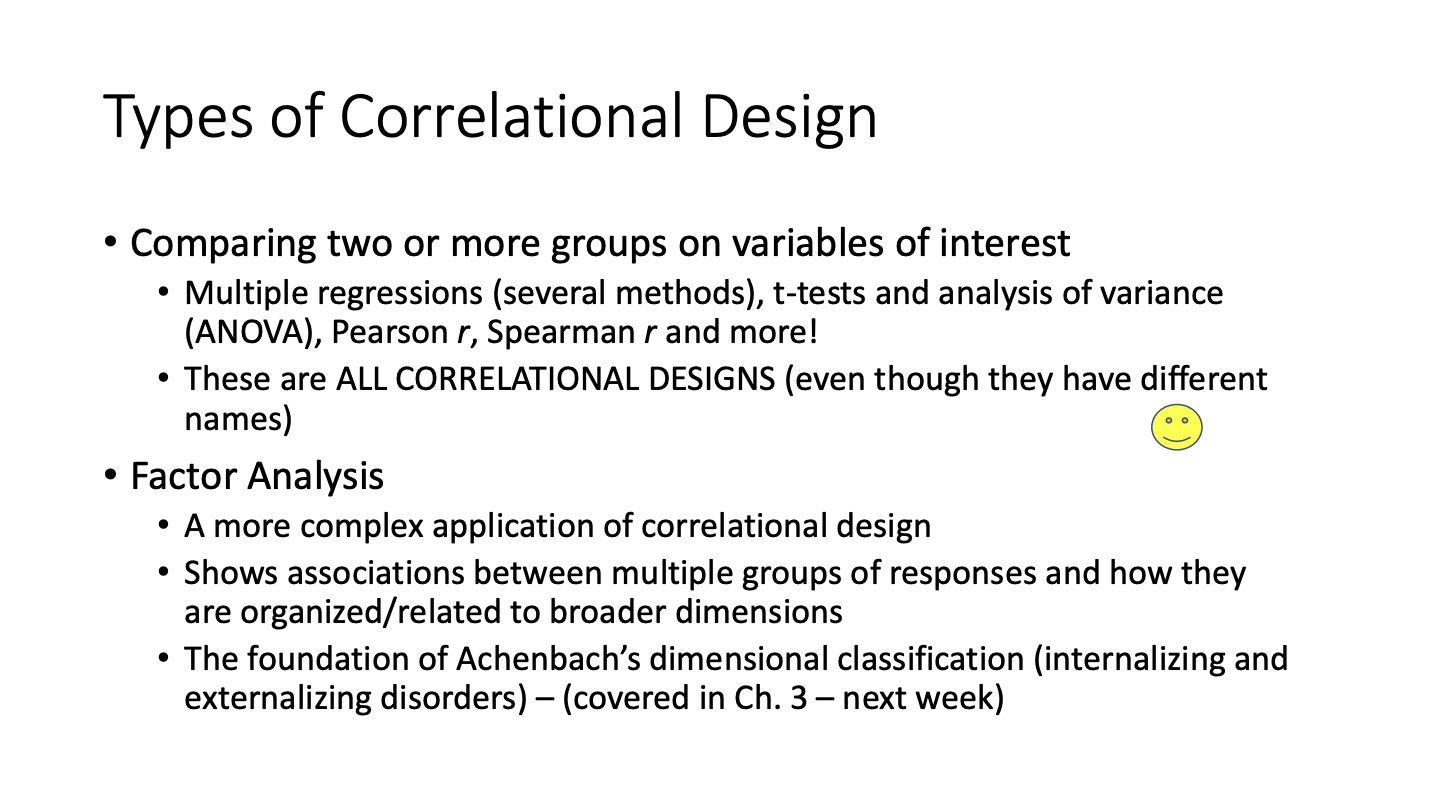

Types of Correlational Design

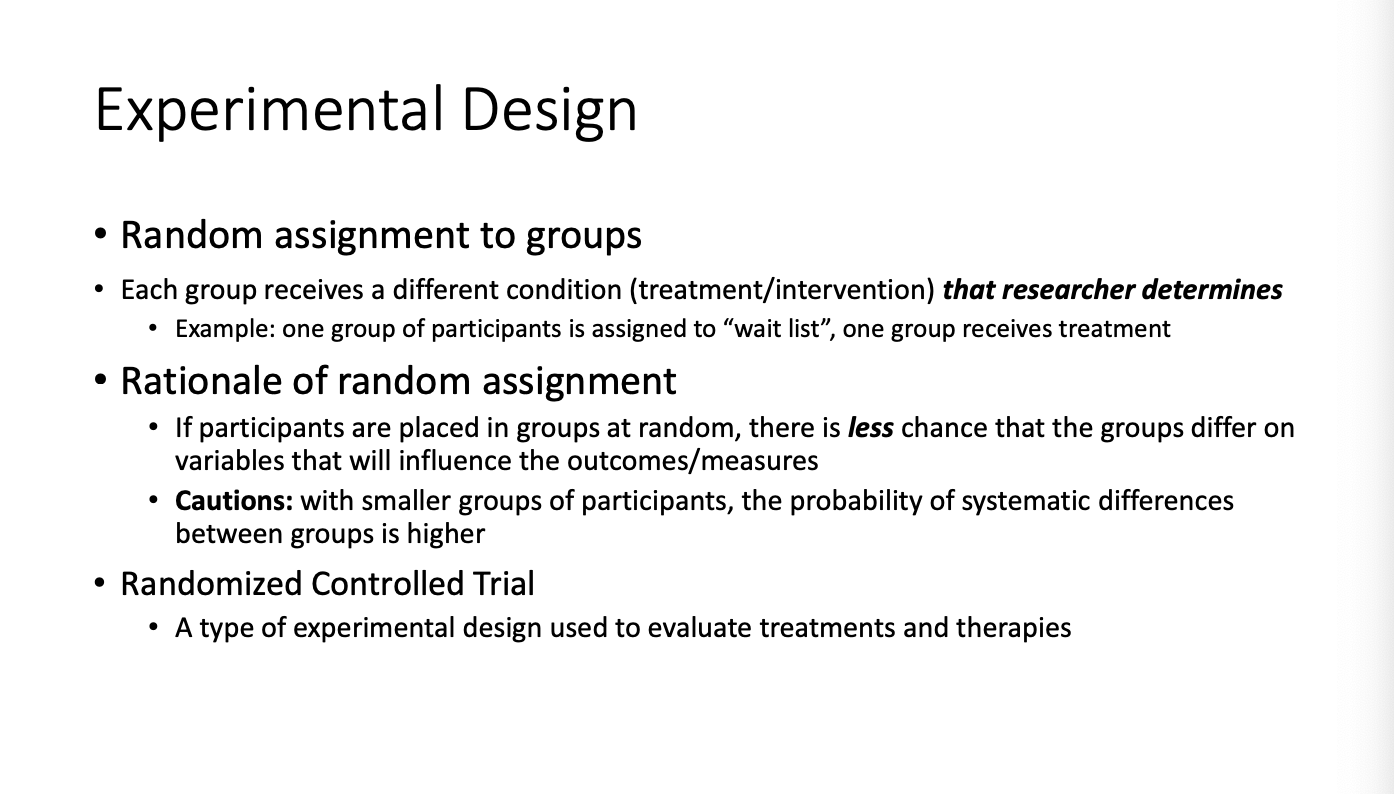

Experimental Design

Bringing Research Together

Ethics: Research and Clinical Practice

Ethical Principles

Research Ethics Board Review

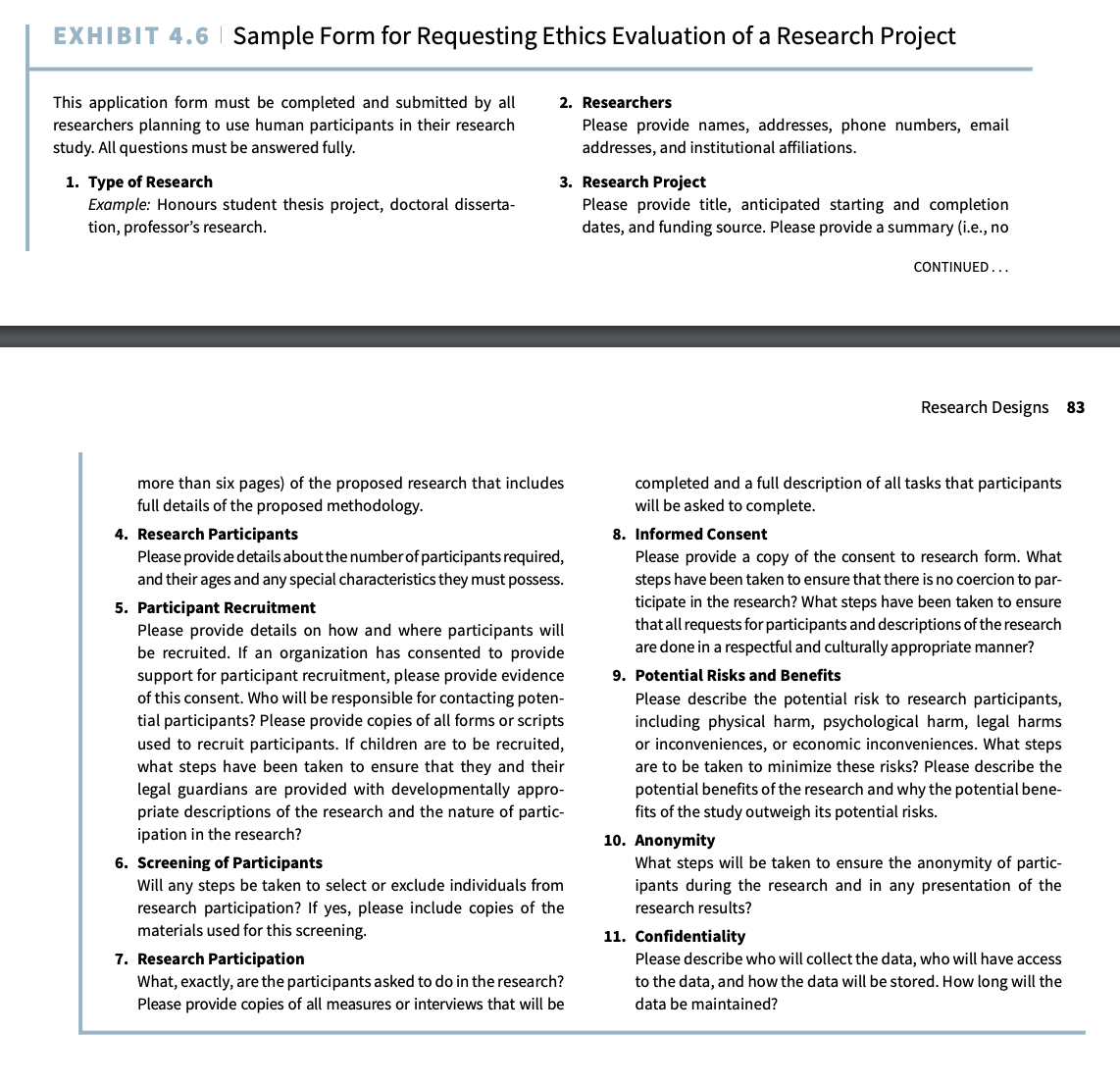

Areas of research ethics review

Other Elements of Research Ethics

Research vs. Clinical Ethics

Guarding against misleading results

Terms

clinical consultation:

the provision of information, advice, and recommendations about how best to assess, understand, or treat a client.

organizational consultation:

services to an organization focused on developing a prevention or intervention program, evaluating how well an organization is doing in providing a health care or related service, or providing an opinion on policies on health care services set by an organization.

practicum:

the initial supervised training in the provision of psychological services that is a requirement of the doctoral degree; usually part-time

informed consent:

an ethical principle to ensure that the person who is offered services or who participates in research understands what is being done and agrees to participate.

scientist-practitioner model:

a training model that emphasizes competencies in both research and provision of psychological services.

clinical scientist model:

a training model that strongly promotes the development of research skills.

practitioner scholar model:

a training model that emphasizes clinical skills and competencies as a research consumer.

accreditation:

a process designed to ensure that training programs maintain standards that meet the profession’s expectations for the education of clinical psychologists.

licensure:

regulation to ensure minimal requirements for academic and clinical training are met and that practitioners provide ethical and competent services; regulation of the profession helps to ensure the public is protected when receiving services.

Viewpoint Boxes

Knowt

Knowt