IB Digital Society - Paper 1 - Section 3: Content (3.7: Robotics and Autonomous Technologies)

3.7: Robotics and Autonomous Technologies

Key ideas: Robots and autonomous technologies;

Demonstrate a capacity to sense, think and/or act with some degree of independence.

Have evolved over time. They are increasingly ubiquitous, pervasive and woven into everyday lives of peoples and communities.

Introduce significant opportunities and dilemmas in digital society.

Robot: a programmable machine that can complete a set task with little to no human intervention.

3.7A: Types of Robots and Autonomous Technologies

The introduction of robots transformed manufacturing by increasing efficiency, safety and accuracy, although at the cost of threatening jobs.

Robots have evolved overtime in two categories: robots used in manufacturing and robots designed for human interaction

Industrial robots

Demand for robots in the manufacturing sector has existed since the 1960s.

Robots would normally perform tasks such as drilling, painting, welding, assembly and material handling.

Businesses incorporate industrial robots to achieve greater efficiency and accuracy in their production lines.

Industrial robots differ from service robots – they replace human workers on the production line, while service robots assist humans by performing tasks.

Real - World Example: Automation in car production:

2014 - Great Wall Motors: A Chinese automotive manufacturer increased its efficiency by having robots collaborate in different parts of production.

For instance, one robot would position a panel and a second one would do the welding.

Foxconn, a Taiwanese electronics company, announced its intention to increase automation by 30%, resulting in the loss of 60,000 jobs.

Service robots

Service Robots: robots designed to assist humans in completing less desirable or dangerous tasks.

This type of robots can either be for domestic or professional use, such as:

Personal Service Robots: used at home. Robots can be programmed to autonomously complete a task.

Demand for them is growing, as they are more affordable and because they can assist elderly people.

Example: Robot Vacuum - they are programmed to clean the house and some even have a self-emptying bin feature, which makes it more autonomous.

Virtual Personal Assistants: voice-controlled helpers which are typically found in smart speakers or mobile phones.

Once connected, a user can command different activities like providing weather updates or setting a timer.

Examples: Google Home, Amazon Echo, Apple HomePod

Real-World Example: Virtual Assistants - Hampshire, UK (2018)

The Hampshire County Council trialed the use of virtual assistants to help carers for elderly people and people with disabilities.

The use of virtual assistant was beneficial to users, especially those with limited mobility and enabled the carers to focus on other tasks.

Professional Service Robot: semi-autonomous or fully autonomous robots which assist humans in commercial settings.

Most of these robots have some mobility and can interact with people.

They are used in a range of industries, such as retail, hospitality but can also perform harsh work in challenging environments like space, defense and agriculture.

A benefit of using service robots is that they can handle dangerous and repetitive tasks efficiently with little downtime.

Examples: Robots which make deliveries, assist in surgery.

Real - World Example: Flippy 2 (2017)

CaliBurger, an American fast-food chain, introduced Flippy, a ‘grill chef’ robot to counter staff problems and assist in the kitchen

Flippy 2, the second generation of the robot, expanded its tasks and features to incorporate an integrated kitchen ticket system. Additionally, it had sensors and machine learning to instruct itself how to fry food.

Social robots

Robots developed to interact and communicate with humans in a friendly manner.

These types of robots are in increasing demand in the workplace, to assist in customer service and at home, to be companions for elderly people.

The main setback is the robot’s lack of empathy or emotion, unexpected response to unknown situations and the potential threat of becoming fully autonomous.

Social robots differ from service robots, which are mainly used to perform undesired (either dangerous or unpleasant) tasks.

Real - World Example: Jibo

A social robot designed for the home environment, to provide friendship and companionship through facial and voice recognition.

Real - World Example: Aerobot

A robot assistant at Istanbul Sabiha Gökçen International Airport which reminds passengers on health policies, answers inquiries and guides passengers to boarding gates.

Passengers can receive a more personalized experience, since the robot can communicate in 20 languages and uses AI to identify approaching passengers to tailor its conversations.

Internet of Things (IoT)

Business previously used earlier forms of IoT, such as radio-frequency identification (RFID) tags to track delivery orders.

IoT uses have expanded, with the top five industrial uses being;

Predictive maintenance: use of wireless IoT to alert employees when a machine needs maintenance.

Location tracking: use of wireless technology such as GPS, RFID in order to track stock, components and/or equipment.

Since data is obtained in real-time, it allows employees to find items faster.

Workplace analytics: use of data analytics software to provide information to optimize operations and increase efficiency.

Remote quality monitoring: use of IoT devices to monitor quality of resources and products.

For instance, an organization can examine water or air quality and offer a faster response to potential pollution.

Energy optimization: use of IoT devices to measure energy consumption.

This technology is normally seen in manufacturing. It can warn of excessive consumption so employees adjust equipment to use less electricity.

Autonomous vehicles (AV)

Autonomous vehicle: a vehicle that has the ability to operate without human intervention.

There are different levels of autonomy.

Levels 0 - 3 are designed to assist a driver and show low levels of autonomy. Levels 4 to 5, on the other hand, do not require a driver.

Levels of autonomy:

Level 0: A traditional car which may have features such as cruise control activated for long distance or warning signs, when reversing. It has zero automation.

Level 1 - Driver Assistance: Has features such as adaptive cruise control to keep a safe distance between cars and lane keep assistance, to prevent vehicles from veering out of its lane. The majority of newer cars have these features.

Level 2 - Partial Automation: Enhanced assistance to drivers by having features such as controlling steering and speed. It is similar to autopilot but requires drivers to be aware of when they need to take control.

Real - World Example: Mercedes-Benz PARKTRONIC system

Mercedes-Benz launched a self-parking car using its PARKTRONIC system which uses a range of sensors to determine if a car can fit into spot and which automatically steer the car.

Drivers still need to control brakes and gears.

Level 3 - Conditional Automation: vehicles that can drive themselves under certain conditions but still need drivers to be behind the wheel.

Example: a vehicle driving automatically in a traffic jam.

Level 4 - High Automation: vehicles with no human driver, steering wheels or pedals and that are available for public use.

Such vehicles would only operate on certain conditions, for instance, they can only work under certain weather conditions.

Trialed services include driverless taxis and public transport, instances when a vehicle can be programmed to travel between two points.

Real - World Example: Driverless Taxis - Singapore (2016)

Singapore tried driverless taxis in 2016 in 12 locations and was planned to be widely available in 2018. Users needed to call the taxi through a smartphone app.

However, the service was limited to 10 people and available to non-peak hours.

Level 5 - Full Automation: has no restriction and would be fully responsive to real-time conditions such as weather and aware of other vehicles. Additionally, the vehicle could travel to any geographical location, without the need to program it.

This level has not been achieved but would be the highest level of automation.

Despite the rapid technological advances, there are obstacles which prevent full and high automation from happening

Sensors: which work by ‘seeing’ their environment, thus can be affected by bad weather, heavy traffic and road signs with graffiti.

Machine Learning: which is used to detect and classify objects, informing the vehicle how to act. However, there is a lack of consensus on industry standards for training and testing artificial intelligence.

Deep Learning: the vehicle continuously learns on the road, therefore the industry needs to draw standards that will make the vehicle safe to others.

Regulations and Standards: agreements between government and manufacturers which can allow autonomous vehicles to operate. International standards need to be considered for vehicles to operate across borders.

Social Acceptability: the general public needs to trust these vehicles, especially after several high-profile accidents.

Drones

Drone: also known as unmanned aerial vehicle (UAV) – a remote controlled or autonomous flying robot.

Initially, these were used in the military in three main activities: anti-aircraft practice, gathering data from sensors and used as weapons in military attacks.

Nowadays, drones are for commercial and private use and have two main functions: flight and navigation.

Examples: commercial drones - used for delivery, search and rescue operations, private drones - capturing video footage.

Real-World Example: Commercial Drones

Drones are used to make deliveries, especially to remote locations.

For example, Zipline, a company in Ghana, used drones to deliver medical supplies to 2000 medical centers.

3.7B: Characteristics of Robots and Autonomous Technologies

Robots have become more intelligent and can move with fluidity.

This is due to developments in artificial intelligence, which makes robots perceive and understand data.

Sensory Inputs for Spatial, Environmental and Operational Awareness

Technologies nowadays have made robots perceive surrounding by emulating humans’ five senses:

Vision: robots use digital cameras to capture images but need two cameras (or stereo vision camera) to create depth perception, enabling image recognition.

In addition, other technologies are needed to make robots aware of their surroundings.

Light Sensors: used to detect light levels, informing robots whether it is day or night.

Simple robots use infrared or ultrasound sensors, which emit beams of infrared light or sound signals. These calculate distance between objects after measuring the time it takes for light to bounce off an object.

More sophisticated robots (e.g. autonomous vehicles) use other sensors such as;

Lidar: light detection and ranging - measures shape, contour and height of objects

Sonar: sound navigation and ranging - measures the depth of water.

Radar: radio detection and ranging - detects moving objects and draws out the environment.

Although these sensors perform specialized tasks, they work like an infrared sensor, bouncing off light.

Hearing: use of a microphone to collect sound which is converted into electrical signals. When coupled with voice recognition, robots can ‘understand’ what is being spoken.

Smell and taste: robots use a chemical sensor, which collects data later transformed into an electrical signal. Robots use pattern recognition to identify the origin of smell or taste.

Touch: robots feature end effectors, which are grippers with pressure sensors or resistive touch sensors. These sensors help robots determine how much strength they need to hold an object

Capacitive touch sensors, on the other hand, detect objects which conduct electricity (including humans).

The Ability to Logically Reason with Inputs, Often Using Machine Vision and/or Machine Learning

Robot’s computerized control systems are used for decision-making – the actions are communicated to sensors, which collect data, process it and send an instruction to actuators and end effectors.

Robots with basic artificial intelligence are designed to solve problems in limited domains.

Example: inspection robot (production line) - determine if a produce is within approved limits

Modern robots use machine learning to develop a new skill within a limited capacity. This feature makes robots more intelligent, thus more useful in addition to improving and adapting to their environments.

Example: industrial robots - can learn to select different part types, especially through supervised learning, a subcategory of machine learning which uses a labeled database to produce algorithms.

Machine vision has enhanced depth and image recognition, making robots more useful and accurate on a production line.

The Ability to Interact and Move in Physical Environments

Robots have the ability to move in a physical space, whether they have a robotic arm or motorized wheels.

Actuator: a part of a device that moves or controls a mechanism. These control the ‘joints’ of a robot.

Actuators can be an electric motor, a hydraulic system (driven by incompressible fluid) or a pneumatic system (driven by compressed gas). All need power to operate.

Example: mobile robots used a battery to move

End effector: a peripheral device attached at the end of an actuator in order to grip objects and attach tools or sensors. End effectors can be either mechanical or electromechanical.

This device can include the following at their end;

Grippers: allow robots to pick up and manipulate objects. These are the most common form of end effectors.

Process tools: tools designed for specific tasks - e.g. welding, spray, drilling.

Sensors: used for applications such as robot inspections.

The Demonstration of Some Degree of Autonomy

Level of autonomy is determined by how independently a robot can operate without human intervention (a controller).

Semi-autonomous Robots

Have some level of intelligence - they can react to some conditions without the need of guidance.

Example: basic robotic vacuum - work autonomously with sensors to map space, path and detect debris. However, to change its settings it needs human intervention.

Fully Autonomous Robots

Operate independently, can accomplish complex tasks and have greater mobility.

Nonetheless they are still restricted to one working environment.

3.7C: Evolution of Robots and Autonomous Technologies

Early Forms of Robots and Autonomous Technology

The idea of robots is not new, being recorded in Egyptian and Greek civilizations. It is through the development of science, technology and artificial intelligence that robots have become a reality.

By 1500 BC, Egyptian water clocks (such as the Al-Jazari's Scribe Clock) worked by rotating figurines placed on top when water was poured. The figurine acted like clock hands.

Around 400 BC, Greek mathematician Archytas built a steam-powered mechanical bird.

1948: William Grey Walter creates Elmer and Elsie, autonomous robots which used wheels for mobility and could return to their recharging station when needed.

1958: A Stanford Research Institute team led by Charles Rosen develops Shakey, a robot which had some mobility through the use of wheels and had television ‘eyes’ to inspect the roam

Additionally, Shakey could make simple responses to its surroundings.

Robots in Science Fiction and Philosophy

The idea of robots was commonly found in science fiction stories.

1921: Czech author Karel Capek formally introduces the term ‘robot’ in his play Rossum’s Universal Robot.

The play’s protagonist created a robot and was later killed by it - a common theme reflecting anxieties which are still present.

1941: Russian author Isaac Asimov wrote three laws of robotics, which stressed robots must not attack humans although it also allowed robots to protect their existence and disobey orders if these threaten to harm humans.

Robots Designed for Industry and Manufacturing

Robots have been preferred in manufacturing since they are able to perform repetitive tasks and are capable of lifting heavy objects.

Some landmark developments include:

1951 - SNARC by Marvin Minsky: First neural network simulator.

1954-61 - Unimate by Georg Devol (USA): First programmable industrial robot, which was capable of transporting diecast and welding. It was designed for General Motors, a car manufacturer

1974 - IRB-6 by ABB (Sweden): First electric industrial robot controlled by a microcomputer. It was used for welding and polishing steel tubes.

1981 - Consight, General Motors: Three separate robots were set up to select up to six different auto parts at a time. The robots used visual sensors.

1981 - SCARA: Selective Compliance Robot Arm (Japan): An articulated robotic arm which was capable of loading and unloading items from one part of the manufacturing process to another.

1994: Motomarman MRC System, Yaskawa America, Inc: Robotic arms with up to 21 axes (degree of freedom) and the ability to synchronize two robots.

2008: UR5, Linatex (Denmark): The first collaborative robot (or ‘cobot’, intended to engage in direct human-robot interaction) to be placed in a production floor.

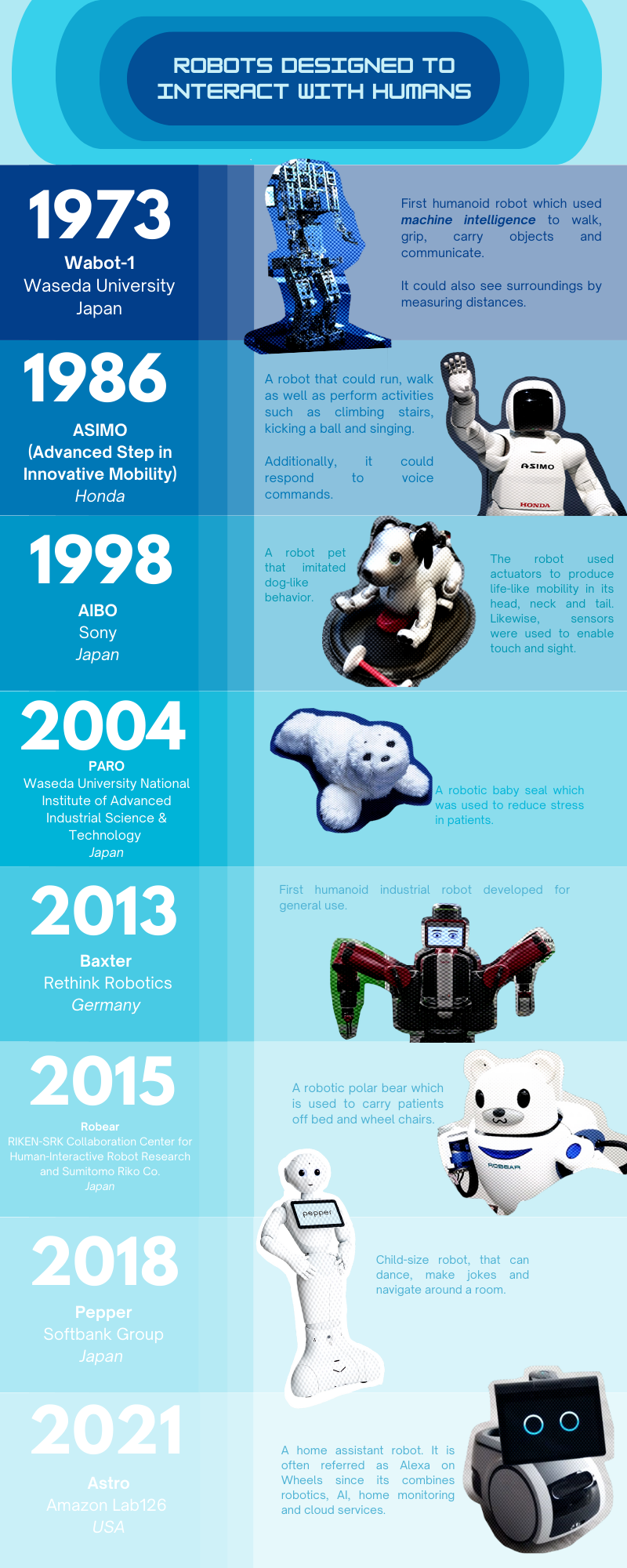

Robots Designed to Interact with Humans

Machine Consciousness, Cognitive Robotics and Robot Rights

Cognitive robotics: an emerging field which aims to design robots with human-like intelligence, which can perceive their environment, plan their actions and deal with uncertain situations.

The goal for this field is to develop predictive capabilities and the ability to view the world from different perspectives.

Earlier versions of robots are thought to lack consciousness and to only simulate intelligence.

As developments enable more sophisticated robots, the rights of robots are being discussed.

Real-World Example: Sophia (2016)

Sophia, a robot originally designed to help elderly people given its human-like features, became the first robot to be granted legal personhood after Saudi Arabia granted her citizenship.

This event propelled her to fame but also raised concern of the limitations of rights and autonomy granted to robots.

3.7D: Robots and Autonomous Technology Dilemmas

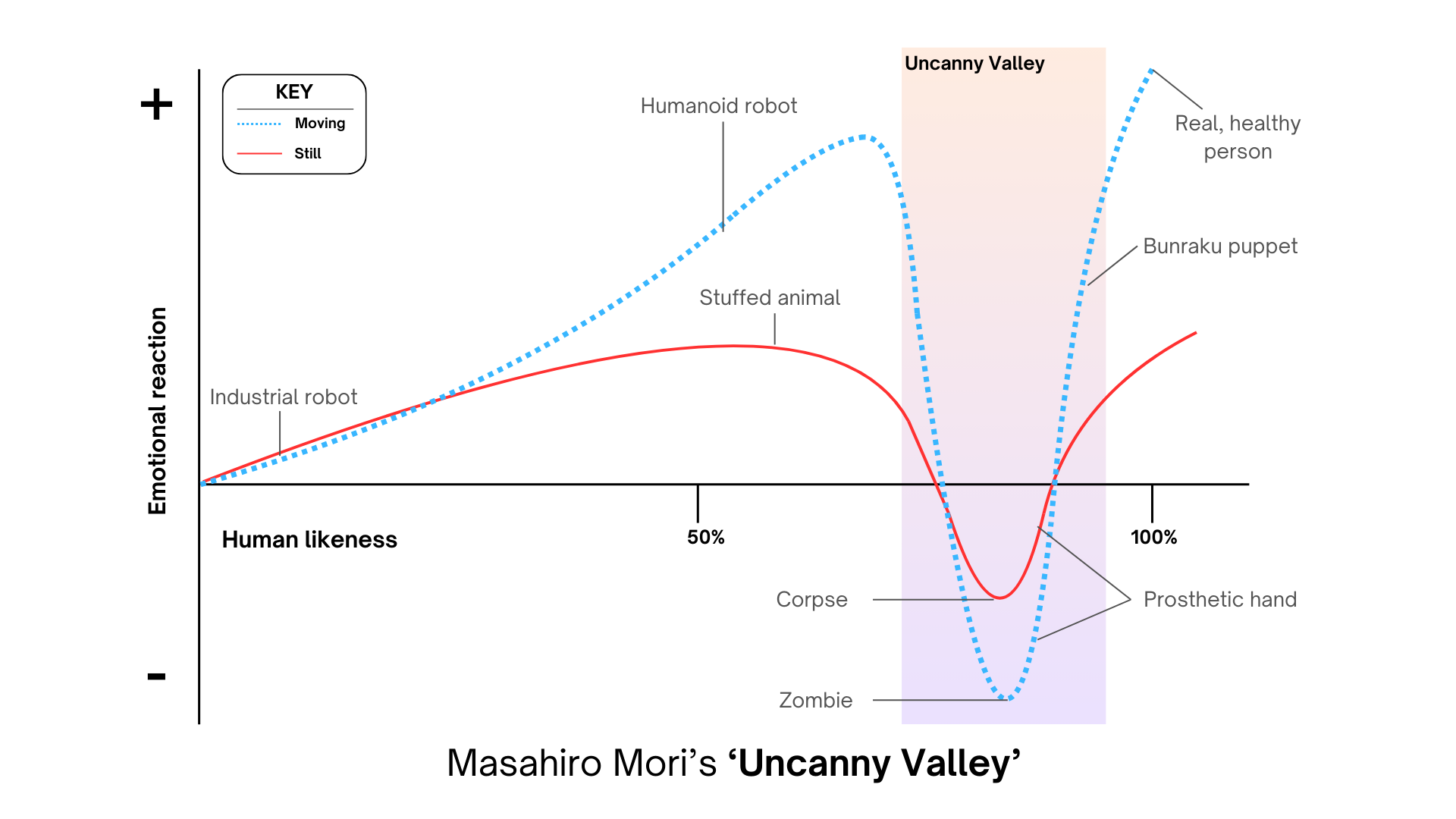

Anthropomorphism and The Uncanny Valley

Anthropomorphism: giving human-like characteristics to non-human entities.

Traditionally, animal characters in the media (e.g. Winnie the Pooh) were given such features. Robots are now given ‘friendly’ appearances to make interaction with humans more natural.

Examples: Sophia - displays uncanny lifelike expressions, ‘Pepper’ - adorable facial expression

Uncanny Valley: hypothesized emotional response to robots’ anthropomorphism, describing the unsettling feeling a human has when interacting with lifelike humanoid robots.

The concept was introduced by professor Masahiro Mori in 1970. He argued human-like robots would be more appealing until a certain point (i.e. uncanny valley) otherwise it will produce a negative reaction.

The proposition of the uncanny valley presents dilemmas for robot designers – while lifelike robots can be accepted in certain circumstances (e.g. training sessions) , they will likely not be generally accepted.

Complexity of Human and Environmental Interactions

The field of robotics aims to create robots that can effectively work with humans as well as being able to predict changes in their environment.

Cobots: robots designed to work alongside humans and augment their capabilities.

Because most cobots will work in the service sector (e.g. shop), they must be capable of understanding human emotions, language and behavior.

Nonetheless, it is challenging to both develop robots to interact with the environment and imitate human action.

Usually, when a robot is trained in a particular environment a minimal alteration might require robots to relearn and adapt to the modified environment.

In addition, walking requires complex technologies to mimic human mobility.

Real - World Example: Autonomous vehicle accident (2018)

A pedestrian was killed by an autonomous car after its safety driver failed to pay attention to the road and thus, did not stop the steering wheel on time.

Uneven and Undeveloped Laws, Regulations and Governance

Most guiding principles on robots are still based in Asimov’s 1940 Laws of robotics. However, recent development have risen new concern and need for clarification:

Nowadays, robots are more varied, therefore an agreed definition needs to be established.

Laws would not apply to all robots - for example, a military robot would not comply with Asimov’s first law (robots should not harm humans).

Countries with advances in robotics are prioritizing legislation, especially for drones, autonomous vehicles and manufacturing robots. Some examples include:

Robot Ethics Charter - South Korea (2007): inspired by Asimov laws, it was envisioned as an ethical guide on robot development and designed to reduce problems associated with service and social robots for the elderly.

UK (2016): published a standards guide for ethical design and application of robots. Although the document is not legally binding, it aimed to raise awareness of dilemmas faced in the future.

Laws might change in different countries, although robot designers are still held accountable.

Robot applications are considered to be products and therefore would be prosecuted under product liability laws (e.g. US Restatement of Torts, Third and European Product Liability Directive).

Displacement of Humans in Multiple Contexts and Roles

Real - World Example: Automation and job losses (2019)

Oxford Economics predicted that 20 million manufacturing jobs worldwide could be replaced by robots in 2030.

Automation is replacing lower-skilled jobs. Therefore, governments need to find a balance between promoting innovation but avoiding unemployment growth.

The study focused on manufacturing jobs, however, other industries are also vulnerable to undergo automation, such as:

Food Industry: mainly relying on repetitive physical activity in a predictable environment – conditions in which a robot can thrive.

Education and health care: interpersonal work could potentially be performed by cobots.

Law Enforcement and Security: cobots are already used alongside humans in patrolling, but could soon do this task autonomously.

Nonetheless, as robots become more sophisticated, there will be demand for high-skilled jobs such as: robot engineers, robotic technicians, software developers, robotic operators and robotics sales people.