Brain & Cog Summary week 3

Week 3

Summary

Actueel: November 2024

WEEK 3

CHAPTER 6: OBJECT RECOGNITION

6.1 COMPUTATIONAL PROBLEMS IN OBJECT RECOGNITION

Major concepts about object recognition:

Use terms precisely: When you talk about certain cases or patients it is very important for researchers to be precise when using terms like perceive or recognize

Object perception is unified: the sensory systems are well divided but our perception of objects is unified. Perception requires more than simply perceiving the features of objects.

Perceptual capabilities are enormously flexible and robust: perception does not rely only on the stimuli we perceive.

The product of perception is intimately interwoven with memory: object recognition is more than just binding features together to form a coherent form. There is an interplay between perception and memory. Part of memory retrieval is recognizing that things belong to certain categories.

Object constancy is our ability to recognize an object in countless situations, even if we get different sensory information. The visual information emanating from an object varies as a function of three factors: viewing position, illumination conditions, and context.

Viewing position: Sensory information depends highly on the viewpoint. The human perceptual system is adept at separating changes caused by shifts in viewpoint from changes intrinsic to an object itself. Visual illusions take advantage of the fact that the brain uses its experience to make assumptions about a visual scene. The visual system automatically uses many sensory cues and past knowledge to maintain object constancy.

Illumination: visual parts of an object change according to how shadows/light are cast on it. However, object recognition is insensitive to illumination.

Context: objects are usually surrounded by other objects/backgrounds. It is easy to separate objects from other objects/backgrounds. Our perceptual system quickly partitions the scene into components.

Object recognition must take into account the 3 components of variability, be general enough to support object constancy, and specific enough to pick out slight differences in the objects we perceive.

6.2 MULTIPLE PATHWAYS FOR VISUAL PERCEPTION

Pathways carrying visual information from the retina segregate into multiple processing streams but most information goes to the V1 or striate cortex (located in the occipital lobe).

Output from V1 is contained in two major fiber bundles (fasciculi) carrying the visual information to the parietal and temporal cortex, related to object recognition.

Superior Longitudinal Fasciculus: dorsal path, from the striate cortex to the posterior regions of the parietal lobe (dorsal occipitoparietal stream)

Inferior longitudinal fasciculus: ventral path, from the occipital striate cortex to the temporal lobe. (ventral occipitotemporal stream)

THE “WHAT” AND “WHERE” PATHWAYS

The two pathways process fundamentally different information.

Ventral stream: object perception and recognition (what)

Dorsal stream: spatial perception (where)

Lesion studies in monkeys gave the initial data for the what-where dissociation of the two streams. More recent evidence indicates that what and where pathways are not limited to the visual system. The auditory pathways are also divided in a similar manner.

REPRESENTATIONAL DIFFERENCES IN DORSAL/VENTRAL STREAMS

Dorsal Stream neurons in the parietal lobe may respond similarly to many different stimuli. The receptive fields of these cells are located in the fovea but also in regions excluding the fovea. This helps with detecting the presence and location of a stimulus.

Ventral Stream neurons in the temporal lobe have receptive neurons only in the fovea and can be activated by a stimulus that calls within either the left or right visual field. The receptive field is ideal for object recognition. Cells in the temporal lobe have a diverse pattern of selectivity.

PERCEPTION FOR IDENTIFICATION VS PERCEPTION FOR ACTION

The parietal cortex is central to spatial attention. Lesions are also associated with spatial layout and relations of objects according to space.

Agnosia is an inability to process sensory information even though the sense organs and memory are not defective. To be agnostic means to experience a failure of knowledge or recognition of objects, persons, shapes, sounds, or smells. When the disorder is limited to the visual modality, is it referred to as visual agnosia. This is a deficit in recognizing objects even when the processes for analyzing basic properties such as shape, color, and motion are relatively intact.

Optic ataxia holds that patients can recognize objects, yet they cannot use visual information to guide their actions. When someone with optic ataxia reaches for an object, she doesn't move directly toward it; rather, she gropes about like a person trying to send something in the dark. Optic ataxia is associated with lesions in the parietal cortex.

6.3 SEEING SHAPES AND PERCEIVING OBJECTS

Object perception depends on the analysis of shape, color, texture, and motion, which contribute to normal perception. But, even when the surface features are absent or applied inappropriately, we are still able to recognize the object using the perceptual ability to match the analysis of shape and form to an object, regardless of color, texture, or motion cues.

SHAPE ENCODING

One way to investigate how we encode shapes is to identify areas of the brain that are active when we compare contours that form a recognizable shape versus contours that are just squiggles.

There is an idea that perception involves a connection between sensation and memory in the brain. Researchers explored this question using a PET study designed to isolate the specific mental operations used when people viewed familiar shapes, novel shapes, or stimuli formed by scrambling the shapes to generate random drawings. Viewing both novel and familiar stimuli led to increases in regional cerebral blood flow bilaterally in the lateral occipital cortex (LOC). Many others have also shown that the LOC is critical for shape and object recognition. People have an insensitivity to the specific visual cues that define an object, this is known as cue invariance.

The functional specification of the LOC for shape perception is evident in 6-month-old babies, using functional near-infrared spectroscopy (fNIRS). This system works best when targeting cortical tissue such as the LOC, which is close to the skull.

It was also demonstrated that there is a lower BOLD response when a stimulus is repeated, known as the repetition suppression (RS) effect. It is hypothesized to indicate increased neural efficiency, meaning that the neural response to a stimulus is more efficient (faster) when the pattern has been recently activated.

FROM SHAPES TO OBJECTS

Multistable perception: a perceptual phenomenon in which an observer experiences an unpredictable sequence of spontaneous subjective changes (vase/figures example). Does the representation of individual features or percept change first?

Binocular rivalry: the phenomenon of visual perception in which perception alternates between different images presented to each eye. Researchers made special glasses that present radically different images to each eye and have a shutter that can alternately block the input to one eye and then the other at very rapid rates. It was found that the activity in early visual areas was closely linked to the stimulus while the activity in the higher areas (IT cortex) was linked to percept. The activity of all the cells in the higher-order visual areas of the temporal lobe was tightly correlated with the animal’s perception.

GRANDMOTHER CELLS AND ENSEMBLE CODING

According to hierarchical theories of object recognition, cells in the initial areas of the visual cortex code elementary features such as line orientation and color. The outputs from these cells are combined to form detectors sensitive to higher-order features such as corners or intersections. The types of neurons that can recognize a complex object are called gnostic units.

The term grandmother cell refers to the notion that people’s brains might have a gnostic unit that becomes excited only when their grandmother comes into view.

Several limitations of the experiments that lead to this assumption:

Aside from the infinite number of possible stimuli, the recordings are performed on only a small subset of neurons. The cell could be activated in the same manner by a broader set of stimuli

The gnostic-like cells are not just perceptual, they respond to anything that is relevant to the concept of the perceived stimulus.

Alternative to the grandmother cell hypothesis: object recognition results from activation across complex feature detectors. According to the ensemble hypothesis, recognition is due not to one unit but to the collective activation of many units.

EXPLOITING THE COMPUTATIONAL POWER OF NEURAL NETWORKS

Recent advances in artificial intelligence research show that a layered architecture with extensive connectivity and subject to some simple learning principles is optimal for learning about the rich structure of the environment.

Input layers: representation may be somewhat akin to information in the environment

Hidden layers: additional processing steps in which the information is recombined and re-weighted according to different processing rules

Output layers: representation might correspond to a decision

The key insight to be drawn from research with deep learning networks is that these systems are remarkably efficient at extracting statistical regularities or creating representations that can solve complex problems.

TOP-DOWN EFFECTS ON OBJECT RECOGNITION

It is important to recognize that information processing is not a one-way, bottom-up street. One model of top-down effects emphasizes that input from the frontal cortex can influence processing along the ventral pathway. The frontal lobe generates predictions about what the scene is, using this early scene analysis and knowledge of the current context. Top-down predictions can then be compared with the bottom-up analysis occurring along the ventral pathway of the temporal cortex, making for faster object recognition by limiting the field of possibilities.

MIND READING: DECODING AND ENCODING BRAIN SIGNALS

Decoding: the brain activity provides the coded message and by examining the brain activity we can make predictions about what is perceived. This raises 2 issues:

The ability to decode mental states is limited by our models of how the brain encodes information. Having a good model of what different regions represent can be a powerful constraint on the predictions we make of what the person is seeing.

The ability to decode is limited by the resolution of our measurement systems (spatial/temporal).

Perception is a rapid, fluid process. A good mind-reading system should be able to operate at similar speeds.

6.4 SPECIFICITY OF OBJECT RECOGNITION IN HIGHER VISUAL AREAS

IS FACIAL PROCESSING SPECIAL?

Multiple studies argue that face perception does not use the same processing mechanisms as those used in object recognition, but instead depends on a specialized network of brain regions. Although clinical evidence showed that people could have what appeared to be selective problems in face perception, more compelling evidence of specialized face perception mechanisms comes from neurophysiological studies with nonhuman primates. Neurons in various areas of the monkey brain show selectivity for face stimuli.

There is specificity for faces observed using fMRI studies in humans: a region known as fusiform face area (FFA). This is not the only region in humans that shows a strong BOLD response to visual face stimuli.

Different face-sensitive regions are specialized for processing certain types of information that can be gleaned from faces.

An alternative hypothesis: level of expertise. Activation in the fusiform cortex, which is made up of more than just the FFA, is in fact greater when people view objects for which they have some expertise. If participants are trained to make fine discriminations between novel objects, the fusiform response increases as expertise develops.

DIVING DEEPLY INTO FACIAL PERCEPTION

Single-cell recording in lateral (LAT) and anterior (ANT) face areas within the IT cortex showed not only that the shape and appearance dimensions were critical in describing the tuning properties of the cells, but also that the two areas had differential specializations. The activity level of cells in the LAT region varied strongly as a function of the shape; in contrast, the activity level of cells in the ANT region varied strongly as a function of appearance.

DOES THE VISUAL SYSTEM CONTAIN OTHER CATEGORY-SPECIFIC SYSTEMS?

Evolutionary pressures led to the development of a specialized system for face perception. One region of the ventral pathway, the parahippocampus, was consistently engaged when the control stimuli contained pictures of scenes such as landscapes. The parahippocampal place area (PPA) is activated when people are making judgments about spatial properties or relations. Patients with lesions in the PPA become disoriented in new environments.

The extrastriate body are (EBA) and the fusiform body are (FBA) are important for recognizing parts of the body.

6.5 FAILURES IN OBJECT RECOGNITION

Patients with visual agnosia have provided a window into the processes that underlie object recognition. By analyzing the subtypes of visual agnosia and their associated deficits, we can draw inferences about the processes that lead to object recognition. Although the term visual agnosia has been applied to a number of distinct disorders associated with different neural deficits, patients with visual agnosia generally have difficulty recognizing objects that are presented visually or require the use of visually based representations.

SUBTYPES OF VISUAL AGNOSIA

Apperceptive visual agnosia: the recognition problem is one of developing a coherent percept: The basic components are there, but they can’t be assembled. Elementary visual functions such as acuity, color vision, and brightness discrimination remain intact. The object recognition problems become especially evident when a patient is asked to identify objects on the basis of limited stimulus information.

Integrative visual agnosia: a subtype of apperceptive visual agnosia, perceives the parts of an object but is unable to integrate them into a coherent whole. When asked to identify objects that overlap with each other.

Associative visual agnosia: perception occurs without recognition. It is the inability to link a percept with its semantic information, such as its name, properties, or functions. A patient can perceive objects with the visual system but cannot understand them or assign meaning to them.

ORGANIZATIONAL THEORIES OF CATEGORY SPECIFICITY

Cases of agnosia with patients that exhibit object recognition deficits that are selective for specific categories/domains of objects (living vs nonliving things). If associative agnosia represents a loss of knowledge about visual properties, then we might suppose that a category-specific disorder results from a selective loss within, or a disconnection from, this knowledge system. Brain injuries that produce visual agnosia in humans do not destroy the connections to semantic knowledge. Because the damage is not total, it seems reasonable that circumscribed lesions might destroy tissue devoted to processing similar types of information.

Sensory/functional hypothesis: The core assumption here is that conceptual knowledge is organized primarily around representations of sensory properties (e.g., form, motion, color), as well as motor properties associated with an object, and that these representations depend on modality-specific neural subsystems.

domain-specific hypothesis: conceptual knowledge is organized primarily by categories that are evolutionarily relevant to survival and reproduction. Dedicated neural systems evolved because they enhanced survival by more efficiently processing specific categories of objects.

DEVELOPMENTAL ORIGINS OF CATEGORY SPECIFICITY

The visual experience is not necessary for category specificity to develop within the organization of the ventral stream. The difference between animals and nonliving objects must reflect something more fundamental than what can be provided by visual experience. The authors suggest that category-specific regions of the ventral stream are part of larger neural circuits innately prepared to process information about different categories of objects.

6.6 PROSOPAGNOSIA

Prosopagnosia: impairment in recognizing faces. Prosopagnosia requires that the deficit be specific to the visual modality, like other visual agnosias. Prosopagnosia is usually observed in patients who have lesions in the ventral pathway, especially occipital regions associated with face perception and the fusiform face area (FFA). In many cases the lesions are bilateral: from strokes affecting the posterior cerebral artery or from encephalitis or carbon monoxide poisoning. Prosopagnosia can also occur after unilateral lesions; in these cases, the damage is usually in the right hemisphere.

DEVELOPMENTAL DISORDERS WITH FACE RECOGNITION DEFICITS

Congenital prosopagnosia (CP) is defined as a lifetime impairment in face recognition that cannot be attributed to a known neurological condition.

Holistic processing is a form of perceptual analysis that emphasizes the overall shape of an object. This mode of processing is especially important for face perception. We can recognize a face by the overall conjuration of its features, and not by the individual features itself. Analysis-by-parts processing is a form of perceptual analysis that emphasizes the component parts of an object. This mode of processing is important for reading, when we decompose the overall shape into its constituent parts.

PROCESSING ACCOUNTS OF PROSOPAGNOSIA

There is a double dissociation of brain systems for face and object recognition. Face perception appears to be unique in one special way: It is accomplished by more holistic processing. We benefit little from noting general features such as word length or handwriting, we have to recognize the individual letters to differentiate between words. Object recognition falls somewhere between the two extremes of words and faces, defining numbers can be done using individual features but also a holistic approach.

very different patterns of activation during word perception from those observed in studies of face perception.

agnosia for objects can co-occur with either alexia or prosopagnosia, but we should expect not to find cases in which face perception and reading are impaired while object perception remains intact.

Thatcher effect: recognizing individual feature differences in inverted faces is more challenging.

The visual word form area: Letter strings do not activate the FFA in the right fusiform gyrus; rather, the activation is centered more dorsally, most prominent in the left hemisphere’s fusiform gyrus (independent of whether the words are presented in the left or right visual field).

CHAPTER 7: ATTENTION

7.1 SELECTIVE ATTENTION AND THE ANATOMY OF ATTENTION

William James captured key characteristics of the attentional phenomena:

It is voluntary (we choose to focus on things consciously)

It is selective (inability to attend to everything at once)

It has a limited capacity

Arousal refers to the global physiological and psychological state, as a continuum from deep sleep to hyperalertness (intense fear).

Selective attention is not a global brain state. Selective attention is the ability to prioritize and attend to some things and not others.

Attention can be:

Goal-driven (top-down control)

Stimulus-driven (bottom-up control)

Several cortical areas are important for attention: portions of the superior frontal cortex, posterior parietal cortex, and posterior superior temporal cortex, as well as more medial brain structures, including the anterior cingulate cortex. The superior colliculus in the midbrain and the pulvinar nucleus of the thalamus, located between the midbrain and the cortex, are involved in the control of attention.

Attention influences how we process sensory inputs, store that information in memory, process it semantically, and act on it.

7.2 THE NEUROPSYCHOLOGY OF ATTENTION

The best-known attention disorder is ADHD and MRI studies of ADHD patients have shown decreased white matter volume throughout the brain, especially in the prefrontal cortex. It has heterogeneous genetic and environmental risk factors, it is characterized by disturbances in neural processing that may result from anatomical variations of white matter throughout the attention network.

NEGLECT

Unilateral spatial neglect is a result of damage in the brain’s attention network in one hemisphere (as a result of stroke). More severe and persistent effects occur when the right hemisphere is damaged. The patient behaves as though the left regions of space and the left parts of objects simply do not exist, and has limited or no awareness of her lesion and deficit.

Despite having normal vision, patients with neglect exhibit deficits in attending to and acting in the direction opposite the side of the unilateral brain damage. Neglect patients show a pattern of eye movements biased in the direction of the right visual field.

NEUROPSYCHOLOGICAL TESTS OF NEGLECT

Neuropsychological tests are used to diagnose neglect:

In the line cancellation test, patients are given a sheet of paper containing many horizontal lines and are asked to bisect them in the middle. Patients with left-sided neglect tend to bisect the lines to the right of the middle.

There is also a related test that asks patients to copy objects or scenes. When you ask a patient to copy a clock with right hemispheric neglect, the patient shows an inability to draw the entire clock and tends to neglect the left side of the clock.

EXTINCTION

Neglect is found for items in visual memory during remembrance of a scene, as well as for items in the external sensory world. Visual field testing shows that neglected patients are not blind in their left visual field: They are able to detect stimuli normally when those stimuli are salient and presented in isolation. Neglect is not a primary visual deficit.

Neglect becomes obvious when the patients are presented with two stimuli simultaneously, one in each hemisphere. This is called extinction; the presence of competing stimuli prevents the patients from detecting the contralesional stimulus. The phenomenon of extinction in neglect patients suggests that sensory inputs are competitive, because when two stimuli presented simultaneously compete for attention.

COMPARING NEGLECT AND BALINT’S SYNDROME

Balint’s syndrome patients demonstrate 3 main deficits:

Simultagnosia: difficulty perceiving the visual field as a whole scene.

Ocular apraxia: deficit in making eye movements (saccades) to scan the visual field

Optic apraxia: deficit in making visually guided movements.

Neglect and Balint’s syndrome are different due to different brain regions being damaged.

Neglect is the result of unilateral lesions of the parietal, posterior temporal, and frontal cortex, and subcortical areas such as the basal ganglia, thalamus, and midbrain

Balint’s syndrome is a result of bilateral occipito-parietal lesions.

7.3 MODELS OF ATTENTION

Attention is divided into 2 main forms which differ in their properties and neural mechanisms:

Voluntary attention (endogenous attention): top-down, goal-driven process. The ability to internally attend to something.

Reflexive attention (exogenous attention): bottom-up, stimulus-driven process. Sensory events capture our attention.

Overt attention: When you turn your head to orient toward a stimulus

Covert attention: Involve changes in internal neural processing and not merely the aiming of sense organs to better pick up information.

HERMANN VON HELMHOLTZ AND COVERT ATTENTION

THE COCKTAIL PARTY EFFECT

Selective auditory attention enables you to participate in a conversation at a busy restaurant or party while ignoring the rest of the sounds around you. Cherry investigated this by using a dichotic listening study setup. Cherry discovered that under such conditions, for the most part, participants could not report any details of the speech in the unattended ear. Voluntary attention affected what was processed by the participants.

Bottlenecks in information processing stages seem to occur at stages of perceptual analysis that have a limited capacity. Are the effects of selective attention evident early in sensory processing or only later, after sensory and perceptual processing are complete? Does the brain faithfully process all incoming sensory inputs to create a representation of the external world biased by the current goals and stored knowledge of your internal worlds?

EARLY SELECTION MODELS VS LATE SELECTION MODELS

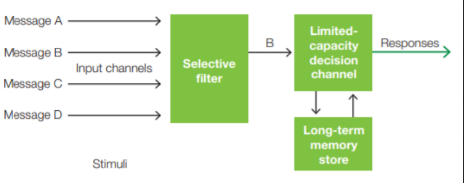

In Broadbent’s model, the sensory inputs that can enter higher levels of the brain for processing are screened early in the information-processing stream by a gating mechanism so that only the “most important,” or attended, events pass through.

Early Selection is the idea that a stimulus can be selected for further processing before perceptual analysis of the stimulus is complete.

Late selection is the idea that the perceptual system first processes all inputs equally, and then selection takes place at higher stages of information processing that determine whether the stimuli gain access to awareness, are encoded in memory, or initiate a response.

The cocktail party experiments showed that unattended information could be consciously perceived (salient/interesting information). Ignored information is therefore not completely lost. Information from the unattended channel was not completely blocked from higher analysis but was degraded or attenuated instead. It can reach higher stages of analysis but with greatly reduced signal strength.

QUANTIFYING THE ROLE OF ATTENTION IN PERCEPTION

One way of measuring the effect of attention on information processing is to examine how participants respond to target stimuli under differing conditions of attention.

Cueing Tasks: the focus of attention is manipulated by the information in the cues provided. One popular method is to provide cues that direct the participant´s attention to a particular location or target feature before presenting the task-relevant target stimulus.

Endogenous cueing is when the orienting of attention to the cue is voluntary and driven by the participant's goal. In contrast, an exogenous cue automatically captures attention because of its physical features. When a cue correctly predicts the location of the subsequent target, it is a valid trial. Sometimes, though, because the target may be presented at a location not indicated by the cue, the participant is misled in an invalid trial.

Also, the researcher may include some cues that give no information about the most likely location of the impending target - this is the neutral cue.

According to most theories, a highly predictive cue induces participants to direct their covert attention internally, shining a sort of mental “spotlight” of attention onto the cued visual field location. The spotlight is a metaphor to describe how the brain may attend to a spatial location.

7.4 NEURAL MECHANISMS OF ATTENTION AND PERCEPTUAL SELECTION

Attention is not only a visual phenomenon. Selective attention operates in all sensory modalities.

VOLUNTARY VISUOSPATIAL ATTENTION

The P1 wave is a sensory wave generated by neural activity in the visual cortex and, therefore, its sensitivity to spatial attention supports early-selection models of attention.

Single neuron studies: selective spatial attention affected the firing rates of V4 neurons (in monkeys).

Different neurons display characteristics of receptive field properties: some are called simple cells others are called complex cells. Simple cells exhibit orientation tuning and respond to contrast borders and are situated and active relatively early in the hierarchy of neural processing in V1.

Visuospatial attention modulates the blood flow in the visual cortex and these hemodynamic changes could be related to the ERP effects observed on the P1 wave of the ERP.

Visuospatial attention can influence stimulus processing at many stages of cortical visual processing from V1 to the IT cortex.

The biased competition model accounts for attentional effects at the single-neuron level: It predicts that the neuronal response to simultaneously presented stimuli is a weighted average of the response to isolated stimuli, and that attention biases the weights in favor of the attended stimulus.

When different stimuli in a visual scene fall within the receptive field of a visual neuron, the bottom-up signals from the two stimuli compete to control the neuron’s firing. Attention can help resolve this competition by favoring one stimulus.

Given that the sizes of neuronal receptive fields increase as you go higher in the visual hierarchy, there is a greater chance for competition between different stimuli within a neuron’s receptive field and, therefore, a greater need for attention to help resolve the competition.

SUBCORTICAL ATTENTION EFFECTS

Neuronal projections extend from the visual cortex (V1 neurons) back to the thalamus. These projections synapse on neurons in the perigeniculate nucleus (thalamic reticular nucleus, TRN) that surrounds the lateral geniculate nucleus (LGN). The connections modulate information flow from the thalamus to the cortex.

Research shows that highly focused visuospatial attention can modulate activity in the thalamus. TRN neurons synapse with the LGN neurons with inhibitory signals.

Attention involves either activating or inhibiting signal transmission from the LGN to the visual cortex via the TRN circuitry. Either a descending neural signal from the cortex or a separate signal from subcortical inputs travels to the TRN neurons. These inputs to the TRN can excite the TRN neurons, thereby inhibiting information transmission from LGN to the visual cortex; alternatively, the inputs can suppress the TRN neurons, thus increasing transmission from LGN to the visual cortex. The latter mechanism is consistent with the increased neuronal responses observed for the neurons in LGN and V1 when coding the location of an attended stimulus.

REFLEXIVE VISUOSPATIAL ATTENTION

This is reflexive attention, and it is activated by stimuli that are salient (conspicuous) in some way. The more salient the stimulus, the more easily our attention is captured.

Reflexive cueing (exogenous cueing) are study that examines how a task-irrelevant event somewhere in the visual field, like a flash of light, affects the speed of responses to subsequent task-relevant target stimuli that might appear at the same or some other location.

Inhibition of return (IOR): when more than about 300 ms pass between the task-irrelevant light flash and the target, the response to stimuli is slower when they appear in the vicinity of where the flash has been.

Early occipital P1 wave is larger for targets that quickly follow a sensory cue at the same location, versus trials in which the sensory cue and target occur at different locations. As the time after cuing grows longer, however, this effect reverses and the P1 response diminishes, and may even be inhibited, just as in measurements of reaction time.

This shows that both reflexive (stimulus-driven) and voluntary (goal-directed) shifts in spatial attention induce similar physiological modulations in early visual processing.

VISUAL SEARCH

How are voluntary and reflexive spatial attention mechanisms related to visual search?

Pop-out: When the target can be identified by a single feature

Conjunction search: If the target shares features with the distractors, it cannot be distinguished by a single feature and thus the time it takes to determine whether the target is present or absent in the array increases with the number of distractors in the array.

Feature integration theory of attention: spatial attention must be directed to relevant stimuli in order to integrate the features into the perceived object, and it must be deployed in a sequential (serial) manner for each item in the array.

Conjunction search affects the P1 wave in much the same way that cued spatial attention does, thus spatial attention is employed during visual search: modulations of early cortical visual processing.

FEATURE ATTENTION

Does spatial attention automatically move freely from item to item until the target is located, or does visual information in the array help guide the movements of spatial attention among the array items? Researchers compared spatial attention and feature attention in a voluntary cueing paradigm. Prior knowledge from the cue produced the typical voluntary curing effect for spatial attention: participants were more accurate at detecting the presence of the target at the cued location compared to when the cue did not signal one location over another.

There is independent processing of spatial and feature attention. Different ERP patterns were produced.

Selective attention, in modality-specific cortical areas, alters the perceptual processing of inputs before the completion of feature analysis.

fMRI studies identified specialized areas of the human visual cortex that process features (motion/color/shape).

Feature-based selective attention acts at relatively early stages of visual cortical processing with relatively short latencies after stimulus onset. (spatial attention is faster than feature-based). Feature-based attention occurs with longer latencies (100ms after stimulus onset) and at later stages of visual hierarchy (extrastriate cortex). Feature attention may provide the first signal that triggers spatial attention to focus on a location.

OBJECT ATTENTION

Can attention also act on higher-order stimulus representations? When searching for objects we look for conjunctions of features called object properties, elementary stimuli that when combined yield an identifiable object or person. Behavioral work has demonstrated evidence for object-based attention mechanisms.

The spread of attention is facilitated within the confines of an object, or there is an additional cost to moving attention between objects, or both.

The presence of objects influences the way spatial attention is allocated in space: In essence, attention spreads within the object, thereby leading to some activity for uncued locations on the object as well.

Attention facilitates the processing of all the features of the attended object, and attending one feature can facilitate the object representation in object-specific regions such as the FFA.

Even when spatial attention is not involved, object representations can be the level of perceptual analysis affected by goal-directed attentional control.

7.5 ATTENTIONAL CONTROL NETWORKS

Attention can be either goal-directed (top-down) or stimulus (bottom-up).

Top-down control: neuronal projections from attentional control systems contact neurons in sensory-specific cortical areas to alter their excitability. The response in the sensory areas to a stimulus may be enhanced if the stimulus is given high priority, or attenuated if it is irrelevant to the current goal.

Attentional control systems are involved in modulating thoughts and actions, as well as sensory processes. Current models of attentional control suggest that two separate cortical systems are at play in supporting different attentional operations during selective attention.

Dorsal attention network: primary and voluntary attention (spatial location, features, object properties)

Ventral attention network: stimulus novelty/salience

These two subsystems interact and cooperate to produce behavior (disrupted by neglect).

DORSAL ATTENTION NETWORK

The dorsal attention network is active during engaging in voluntary attention. The dorsal frontoparietal attention network is bilateral and includes the superior frontal cortex, inferior parietal cortex (located in the posterior parietal lobe), superior temporal cortex, and portions of the posterior cingulate cortex and insula.

Key cortical nodes: frontal eye fields (FEFs, located at the junction of the precentral and superior frontal sulcus in each hemisphere) and the supplementary eye fields (SEFs) in the frontal cortex; the intraparietal sulcus (IPS), superior parietal lobule (SPL), and precuneus (PC) in the posterior parietal lobe; and related regions.

active only when the participants were instructed (cued) to covertly attend either right or left locations.

when the targets appeared after the cue, a different pattern of activity was observed.

When participants were only passively viewing the presented cues, the frontoparietal brain regions were not activated, even though the visual cortex was engaged in processing the visual features of the passively viewed cues.

FRONTAL CORTEX AND ATTENTIONAL CONTROL

The frontal cortex (source) has a modulatory influence on the visual cortex (site).

FEF: Researchers stimulated FEF neurons with very low currents that were too weak to evoke saccadic eye movements, but strong enough to bias the selection of targets for eye movements. = dorsal system is involved in generating task-specific, goal-directed attentional control signals.

Inferior frontal junction: plays an important role in the top-down control of feature-based attention.

PARIETAL CORTEX AND CONTROL OF ATTENTION

The areas along the intraparietal sulcus (IPS) and the superior parietal lobule (SPL) in the posterior parietal lobe are the other major cortical regions that belong to the dorsal attention network.

IPS (LIP): involved in saccadic eye movements and in visuospatial attention. It is concerned with the location and salience of objects.

Activity in the LIP provides a salience or priority map. A salience map combines the maps of different individual features (color, orientation, movement) of a stimulus, resulting in an overall topographic map that shows how conspicuous a stimulus is, compared to those surrounding it.

VENTRAL ATTENTION NETWORK

The ventral network is strongly lateralized to the right hemisphere and includes the posterior parietal cortex of the temporoparietal junction (TPJ) and the ventral frontal cortex (VFC) made up of the inferior and middle frontal gyri.

The dorsal and ventral networks interact with one another. The dorsal attention network, with its salience maps in the parietal cortex, provides the TPJ with behaviorally relevant information about stimuli, such as their visual salience. Together, the dorsal and ventral attention networks cooperate to make sure attention is focused on behaviorally relevant information, with the dorsal attention network focusing attention on relevant locations and potential targets, and the ventral attention network signaling the presence of salient, unexpected, or novel stimuli, enabling us to reorient the focus of our attention.

SUBCORTICAL COMPONENTS OF ATTENTIONAL CONTROL NETWORKS

Subcortical networks that include the superior colliculi and the pulvinar of the thalamus are related to attentional processes, as damage in these areas can produce deficits in attention.

Superior Colliculus: midbrain structure made up of many layers of neurons, receiving direct inputs from the retina and other sensory systems, as well as from the basal ganglia and the cerebral cortex (receiving input primarily from the contralateral side of space). It projects multiple outputs to the thalamus and the motor system that, among other things, control the eye movements involved in changing the focus of overt attention. It also appears to be involved in inhibitory processes during visual search.

Pulvinar of the thalamus: Located in a posterior region of the thalamus, it is composed of several cytoarchitectonically distinct subnuclei, each of which has specific inputs and outputs to cortical areas of all the lobes.

Knowt

Knowt