L2_tools

Frameworks

TensorFlow, Keras, and PyTorch are essential frameworks for deep learning. Python serves as the primary configuration language, supported by C++/CUDA backends for performance. Deployment is possible in various languages, including Haskell, C#, Julia, Java, R, Ruby, Rust, Scala, and Perl, offering flexibility in application. NumPy is beneficial for numerical operations but not strictly required.

This Week's Tasks:

Set up a deep learning environment (refer to GitHub for setup details).

Study Chapter 12 (pages 403-411).

Review TensorFlow basics, focusing on core concepts like tensors and operations.

Get an introduction to tensors, their properties, and uses.

Read Chapter 14 to delve deeper into specific topics.

Computing Resources

Utilize hardware accelerators like GPUs for computationally intensive tasks:

Personal computers equipped with NVIDIA GPUs or M-series Macs.

Cloud services such as Google Colab (offering T4 GPUs without cost) and Kaggle Notebooks (providing P100 GPUs for free).

Research group hardware, especially if connected to HVL/UiB, for more advanced setups.

Low-Level TensorFlow

TensorFlow is similar to NumPy but includes GPU support and JIT compilation. Tensors, which are multidimensional arrays, form the core objects. Usage examples:

import numpy as np

x = np.array([[1,2,3], [4,5,6]], dtype=np.float32)

print(x)

import tensorflow as tf

x = tf.constant([[1,2,3], [4,5,6]], dtype=tf.float32)

print(x)

Tensors and Variables

Tensors are immutable and hold constant values, suitable for input data. Variables are mutable and used for updating values like model weights. Example:

y = tf.Variable([[1,2,3], [4,5,6]], dtype=tf.float32, name="My first variable")

y[0,1].assign(50)

Simple Operations

Math operations include element-wise addition and multiplication, as well as matrix multiplication:

a = b + c # Element-wise addition

a = b * c # Element-wise multiplication (Hadamard product)

a = b @ c # Matrix multiplication

Reduce functions in TensorFlow differ from those in NumPy. For example:

x = tf.constant([[1,2,3], [4,5,6]])

print(x)

tf.math.reduce_sum(x, axis=0) # Output: <tf.Tensor: shape=(3,), dtype=int32, numpy=array([5, 7, 9], dtype=int32)>

tf.math.reduce_sum(x, axis=1) # Output: <tf.Tensor: shape=(2,), dtype=int32, numpy=array([ 6, 15], dtype=int32)>

tf.math.reduce_sum(x, axis=None) # Output: <tf.Tensor: shape=(), dtype=int32, numpy=21>

Shapes and Broadcasting

Broadcasting in TensorFlow operates similarly to NumPy, allowing operations between tensors of different shapes. Here’s an example:

x = tf.constant([1,2,3], dtype=tf.float32)

x + 1 # Output: <tf.Tensor: shape=(3,), dtype=float32, numpy=array([2., 3., 4.], dtype=float32)>

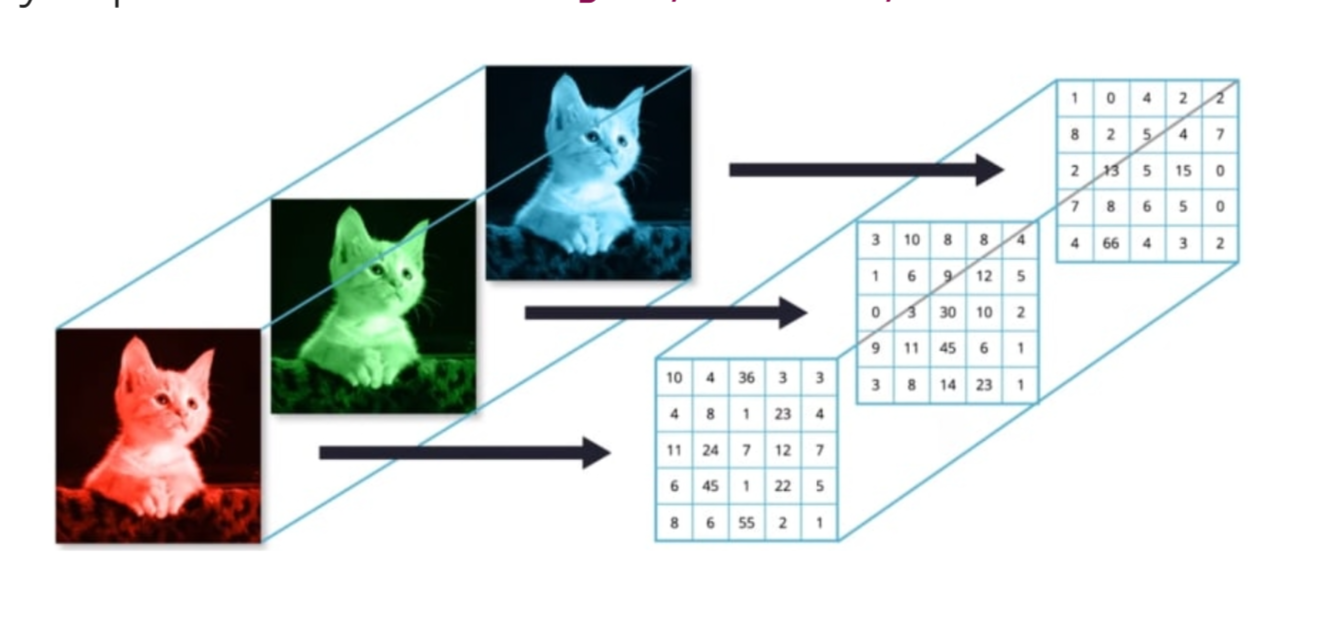

Image Shapes and Minibatches

Images are represented in the format [height, width, channel].

Minibatch gradient descent adds a batch dimension [batch, height, width, channel]. Adjustments for single data points are done as follows:

img = tf.expand_dims(img, 0) # [24, 24, 3] -> [1, 24, 24, 3]

img = tf.squeeze(img) # [1, 24, 24, 3] -> [24, 24, 3]

GPU Usage

TensorFlow automatically selects the fastest available compute device. To enforce GPU usage:

with tf.device('CPU:0'):

a = tf.Variable([[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]])

b = tf.Variable([[1.0, 2.0, 3.0]])

with tf.device('GPU:0'):

k = a * b

print(k)

Automatic Differentiation

TensorFlow's automatic differentiation computes derivatives. For instance, calculating the derivative of x^2 + 2x - 5:

def f(x):

return x**2 + 2*x - 5

x = tf.Variable(1.0)

with tf.GradientTape() as tape:

y = f(x)

d_dx = tape.gradient(y, x)

print(d_dx) # Output: <tf.Tensor: shape=(), dtype=float32, numpy=4.0>

This computes the derivative 2x + 2, which evaluates to 4 at x=1.

Keras Framework

The Keras framework offers high-level components for neural network construction and training. Key modules include:

keras.layers: Contains various layer types and activation functions.keras.callbacks: Used to monitor, modify, or halt the training process based on specified conditions.keras.optimizers: Provides optimization algorithms such as Adam or SGD.keras.metrics: Includes performance metrics like accuracy and loss.keras.losses: Defines loss functions for evaluating model performance.keras.datasets: Features small datasets for experimentation and testing.keras.applications: Offers pre-trained networks suited for a range of tasks.