Day #4 - Computation

Models of Computation

RASP model: Random access stored program model

Memory Access: Assumes random access memory (RAM), you can directly access any memory location in constant time

Stored Program: The program instructions are stored in memory just like data. This allows for self-modifying code (program can change its instructions while running)

Instruction Set: The model includes basic operations like reading/writing memory, arithmetic operations (addition, subtraction, etc.), and conditional jumps

Turing Complete: The RASP model is equivalent to a Turing machine, meaning it can compute anything that is computable

Registers: Assumes a small number of registers for temporary storage (indexes), but the actual instructions reside in main memory

Markov Algorithms:

Definition: Apply a set of rules to a string of characters until no more rules can be applied. This approach allows for the manipulation and transformation of strings in a systematic manner.

Example:

String: "abc"

Rules:

Replace "a" with "ab"

Replace "b" with "c"

Replace "c" with "a"

Process: "abc" → "abbc" → "abcc" → "abaa"

Lambda Calculus:

Definition: Mathematical logic system for functions using lambda expressions to define and apply functions.

Basic Operations: Create functions (abstraction), apply functions (application), rename variables (renaming).

Example:

Function: λx.x+1

Apply to 2: (λx.x+1) 2 → 2+1 → 3

Turning Machines (Finite automata):

Finite Automata:

Simple model, reads input, changes state based on rules.

Turing Machine:

More powerful model with an infinite tape and a head that reads, writes, and moves along the tape.

Example:

Tape: "1101"

Robot (Turing Machine) reads the tape and changes states according to rules.

Rule: If the symbol is "1" and the state is "A", change to state "B" and move right.

Process:

Reads "1" (state "A"), changes to state "B", moves right.

Reads "1" (state "B"), changes to state "C", moves right.

Reads "0" (state "C"), and so on.

The Chomsky Hierarchy

The Chomsky Hierarchy is a classification of different types of formal grammars in computational theory. It includes four levels, from the simplest to the most complex.

Type 0: Recursively Enumerable Languages

Definition: The most general type, where the grammar can generate any language that a Turing machine can recognize.

Example: A grammar that can generate the language of all strings where the number of 1s equals the number of 0s (e.g., "0011", "1100").

Type 1: Context-Sensitive Languages

Definition: Grammars where rules are applied depending on the context of the nonterminal symbols.

Example: A grammar that ensures all "a"s are followed by "b"s, and the number of "a"s matches the number of "b"s (e.g., "aabb", "aaabbb").

Type 2: Context-Free Languages

Definition: Grammars where rules are applied regardless of the context of the nonterminal symbols.

Example: A grammar that generates balanced parentheses (e.g., "()", "(())", "()()").

Type 3: Regular Languages

Definition: The simplest type, where rules are very restricted and can be represented by finite automata.

Example: A grammar that generates strings of "a"s followed by "b"s (e.g., "ab", "aabbb").

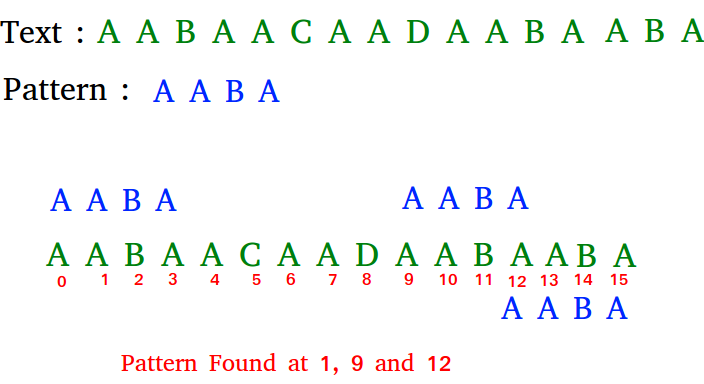

String Matching

String matching: Finding where a specific sequence of characters (pattern P) appears within a larger sequence (text T).

Goal: Locate all occurrences of pattern P in text T.

Applications:

Search engines: Helps in efficiently finding information.

DNA sequencing: Identifies specific genetic patterns.

Data compression: Enhances performance and accuracy by recognizing patterns.

Finite Automata

Finite Automata

Finite Automata: Models of computation used to recognize patterns within input sequences.

Transducers (informal): Devices that transform input sequences into output sequences, often used in applications such as text processing.

Mealy Machines: A type of finite state machine that produces outputs based on the current state and the current input.

Example: Vending machine system

Where the output (dispensing a product) directly depends on both the current state (amount of money inserted) and the input (button pressed).

Moore Machines: A type of finite state machine where the output is determined solely by the current state and not by the input sequence.

Example: Automatic light control system

In this case, the output (light on/off) depends only on the current state (people in room) regardless of any external inputs.

Transducers (formal): G-tuple ( Q, Σ, q, d, O, G)

Deterministic Finite Automaton (DFA)

DFA: A type of finite automaton.

Directed Graph (Digraph): Represents DFA as a graph where:

Nodes (States): Represent different conditions or statuses.

Edges (Transitions): Represent the movement from one state to another based on input characters.

Deterministic: Each state has exactly one outgoing edge for every letter in the alphabet (Σ).

Example:

Alphabet (Σ): {a, c, t, g}

Pattern (P): "AAC"

Process: DFA checks each letter in the input and follows the corresponding edge to a new state.

Simple DFA: If input is "AAGTC", the DFA will transition through states to check if "AAC" appears.

Nondeterministic Finite Automaton (NFA)

NFA: Another type of finite automaton.

Edges (Transitions):

Can transition on an empty string (λ), meaning the machine can change state without consuming any input.

Can have multiple edges for the same input character, allowing multiple possible transitions.

Example:

Alphabet (Σ): {a, c, t, g}

Pattern (P): "AAC"

Process: NFA can explore multiple paths at once. If one path leads to matching "AAC", it accepts the input.

Simple NFA: If input is "AAGTC", the NFA will consider different paths to check if "AAC" appears

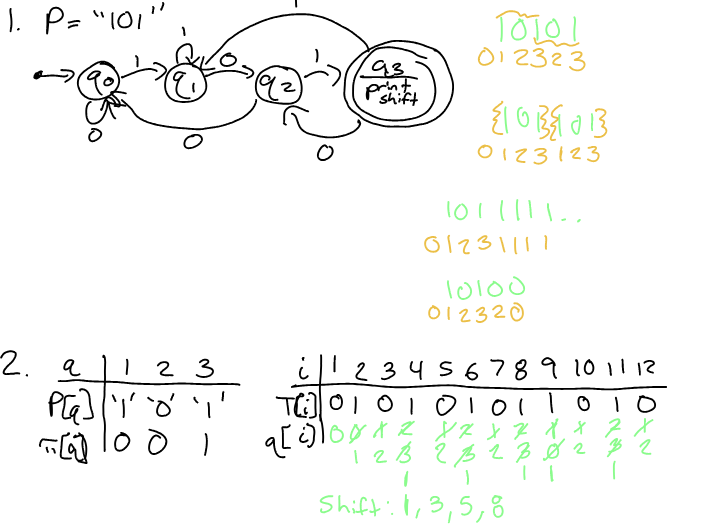

Knuth-Morris-Pratt

Prefix Function: Encapsulates the knowledge of how a pattern matches itself to avoid repeating work within the text

Languages and Grammar

Statement (word): A string of finite length of elements in a vocabulary

Vocabulary: a finite, nonempty set of symbols

Syntax: form of a statement

Semantics: meaning of a statement

Formal Language: Rules of syntax for organizing tokens syntactically-correct statements, with no ambiguity

Empty String: λ, ∈

All words over a vocabulary: v*

A language over a vocabulary: Lc v*

A phrase-structure grammar: G = (V,T,S,P)

V: Vocabulary

T: Terminal symbols | T c V

S: Start Symbol | S ∈ V

P: Productions: A Set of rules for converting nonterminal symbol into terminal symbols

N: Nonterminal symbols| N = V - T

Example:

G = {V, T, S, P} = V = {a, b, A, B, S}, T={a, b}

S = S, P = {S → ABa, A→BB, B→ab, AB→B}

L(G) = {“S → ABa → BBBa → abBBa → ababBa → abababa”, “S → ABa → ba”

L(G) ={ba,abababa}

L(G) = { w (word) ∈ T* (all sequences of foraminal) | S →* (arbitrary # of products) w

Linear Grammar

Definition: A type of formal grammar where each production rule has at most one non-terminal symbol on the right-hand side.

In right regular grammar, this placeholder appears on the right side of a rule (like finishing a sentence with a placeholder).

In left regular grammar, this placeholder appears on the left side of a rule (like starting a sentence with a placeholder).

Simple Example

Right Regular Example:

Rule: A→aB

Expansion: If "A" is the starting symbol, it can become "aB".

Left Regular Example:

Rule: A→Ba

Expansion: If "A" is the starting symbol, it can become "Ba".

Acceptors: recognizers: FAs with accepting states that recognize/decide if input strings belong to the language the FA recognizes/decides

Acceptor: 5-tuple ( Q, Σ, q, F, d)

See above for Q, Σ, q, F, and d definitions, which outline the components of the finite automaton used in this computation process.

F: accepting (finishing) states; F >= Q

Backus-Naur Form

BNF: Specifies type-2 (context form) grammar formally

“: : =” - in a production

<…> - wrapper for non-terminal symbols

| - Separates RHS production results

Example ALGOL 60 identifiers (i.e, variable names + other symbols)

<id> ::= <letter> | <id><letter> <id><digit>

<letter> ::= a | b | c | … | x | y | z

<digit> ::= 1 | 2 | 3 | … | 7 | 8 | 9

Produce x99a

<id> ::= <id><letter>

<letter> ::= a

<id> ::= <id><digit>

<digit> ::= 9

<id> ::= <id><digit>

<digit> ::= 9

<id> ::= <letter>

<letter> ::= x

ends to a → 9a → 99a → x99a

small problem - toy - give back problem from last quiz being a transducer and accepter of each