CogPsych 1a

1/64

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

65 Terms

What is the goal of cognitive psychology?

TO develop formal theories about cognition that can be tested

What is a theory

a principle that explains a body of facts

set of sentences written in formal language from which things can be derived

specifies causal relations between states

must predict

must specify structures of interest and how things react between them (eg X exists because of Y, must be notable)

(eg evolution theory, how biological state arises as function of genetic sequence)

What is a theory NOT

a description

a set of data

not a diagram

how do we develop good theories of mind

good science = testable theories

understand intelligent systems

what is an intelligent system

a system that responds to its environment under some constraint or set of goals

must be able to adapt to changes in environment (eg lancet fluke therefore isn’t intelligent)

what are Marr’s three levels to understand how does it work

1) computational theory

2) representation and algorithm

3) implementation

What is Marr’s computational level theory

describe What problem is it solving (and why)

what are constraints on solution the system has

What is the problem getting solved / how is the function being computed

What is Marr’s representation and algorithm theory

how does it get from one state to other

identify things system is representing and how it represents that info

how it manipulates these things to turn input state into output state

what is the algorithm that the system is running?

take input to system, whats the output, what are the stages in between

What is Marr’s physical implementation theory

how are these representations and algorithms realised in the hardware of the device itself (eg neurons, harddrive)

What is the cash register example for Marr’s 3 levels

computational = what is the problem it is solving? arithmetic

Rep and Alg= representation by arabic numerals, need to specify an operation

Implementation = 10 notch metal wheels turned by control structure

Does Marr argues that it is better to move down levels or up?

Down

eg washing machine in space

easier to know function first

What is the computational theory model?

some sort of functional state or functional transformation

describe the math transformation that takes it from input to output

eg your memory gets worse by 10% for every hour of sleep missed

What is a computational theory model example

learning via association - we are describing how this happens

Rescola-Wagner model

describes associate strength between the US, i(() and the CS, (j)

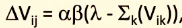

delta Vij is the change of strength of association between ij

a = salience of i= how easy (high) it is for system to detect i

b= salience of j

lambda is learnability of Vij

Vik is associative strength between US (i) and other CSs (k) which also predict i

how much will association Vij change as function of co-occurence of ij in environment, changes based on how salient they are, how they are seen to be associated in world, how learnable Vij is against Vik (subtractive relation)

more things Vik, harder for Vij

What is the rep and alg theory model?

we have smth that specifies relationship between input and output

content (what are you representing, eg a chair has a certain list of features)

and format (how traits should be organised)

specifies algorithm for transforming input representations into output representations

-precise series of operations

What is a rep and alg model example

Rescorla-Wagner model

what is content of a - what does it mean to rep salience of smth and how is it represented

what is i? it is a symbol, holistic pattern, etc

what processes operate on a and how do these interact with other representations

What is a physical implementation model example

how do neurons behave, learn connections, learn associations

What does Newell argue?

cog psych deals with phenomena

we discover that people do X

we deal with phenomena as set of oppositions (eg is way we do X affected by x or ~x) (eg memory issue, is this affected by people wearing a red hat or a blue hat)

we need better constraints on how we ask these questions, we need formal situations to tell us what are the good questions- we need strong constraining theories

what is a model

a logical representation of a system in a formal language that describes- and is simpler- than the actual system

what is cognition

a set of processes that give rise to intelligent behaviour

a good theory of cognition will

account for the problems the mind is solving

account for how the mind solves those problems

Turing’s Machine

conceptually allows you to solve any issue

anything that can be done by a finite amount of precise steps can be done by a Turing machine

4 components of a Turing Machine

1) machine table

2) machine state

3 tape of unbound length

4) read-write head

Turing machine- machine table

a list of instructions of the form “if in state x, and input is y, then do z”

program or algorithm

Turing machine- machine state

a current state of the machine, represented as x

Turing machine- tape of unbound length

a tape divided into discreet parts, each part containing or with the ability to contain a 0 or a 1

finite in length

input and output of the machine

Turing machine-read-write head

device that can read current slot on tape, move tape forwards or backwards, and erase or write a 1 or 0 to slot on tape

what interacts with input and output of machine

what does mathematically random mean

the thing cannot be shortened

shortest description of thing is itself

10/3= 3.3333 = 3.·3

pi is random because the shortest of itself is itself

Universal Turing Machine (UTM)

can imitate any Turing machine

eg programming languages, computers etc are UTMs

are minds UTMs?

minds are weaker than UTMs

mind only carries out actions that can be carried out in principle (tautological)

mind may be able to be imitated by a UTM

could in principle simulate mind as computer program

How do we ‘solve’ unsolvable problems

we use our own representations to guess what is going on - we use our solvable methods (previous ideas) to approximate solutions (heuristics) to problems

what is the computational theory of mind

view that the human mind is best understood as a computer system as it operates by performing computations

we represent and process info all the time, the algorithm is a specification for representing and manipulating it

what are computations

any type of info processing that can be repped mathematically

what is an information processing system

take input info, transforms into another state (output) via an algorithmic process

algorithm

formally describable procedure (can write it in lang of maths)

or a set of instructions for performing an operation in finite number of ways

what is the goal of computational theory of mind

to figure out the algorithms and representations they operate on

what is an algorithmic account of mind

it specifies the means by which the mind represents info and the processes by which is manipulates these representations

what is the mind if not computational?

algorithmic as it still takes input and produces output

but you then believe mind is not understandable (but if mind is irregular / random we cannot shorten/compress it and understand it in a systematic way)

Turing’s test of machine intelligence

3 rooms

in room 3 there is a tester who sits at an input-output device and asks questions through terminal and receives answers

2 other rooms- one with human; one is computing device

if tester can’t tell which is human and which is computer, machine is as intelligent as human

Issues with Turing’s test of machine intelligence

it is dependent on the tester and how well they can decipher who is the human

is being able to fool a tester a good test of intelligence - other aspects of intelligence

tests performance (what the machine does), not competence (what machine is capable of doing)

it is a. behaviourist test

smart programmers can anticipate questions and invent answers

What is the Chinese Room (Searle) procedure

Against a computational theory of mind

there is a room with an English monolingual in it

they have an ‘in’ slot: squiggles on paper come in

an ‘out’ slot: person can write squiggles out

blackboard where they write numbers

set of English instructions eg:

if X on blackboard + input: W —> output: draw Y —> replace X on blackboard with Z

What is the Chinese Room (Searle) conclusions

seems like there’s a very smart chinese person in room —> but nor person nor room are intelligent yet still pass Turing Test

this is a replication of a turing machine, saying that neither computers nor the person in the chinese room have human intelligence

what is an issue with the chinese room

Searle’s definition of intelligence is to speak Mandarin… but the room does- therefore room is technically intelligent

eg it is like saying read-write head doesn’t do computation, when actually it is the whole machine that does this computation

there is no operational definition of consciousness - can only assume smth is consciousness- it has no observable consequences so can’t be observed (bit confusing)

different types of models in psych

math models (Rescorla-wagner), models of personality (computational)

process models- cog and behavioural neuroscience (Turin)

2 types of process models

1) symbolic

2) connectionist

what is a symbolic process model /representations

-represents knowledge as symbolic data structures

-basic or atomic elements

-rules for composing elements to make complex structures (eg languages)

-manipulate data structures with variabilised rules

eg variables S T U V , operators & and | , rules for propositions (legal proposition = any variable) (any two legal propositions can be combined by an operator) —> infinite options

what is a connectionist process model

represent knowledge as patterns of activation within a set of units (nodes) in a network

processing is carried out by passing activation between nodes

what are the symbolic models processes

there are symbolic operations on the data structures

there is an application of symbolic rules

eg if larger than (x,y) then can occlude (x,y)

what are the 3 production system components (this is a prototypical symbolic model)

*+mathemetical rules but doesn’t mean actually true just has to match cognition

1) a base set of known facts (represented as data structures within a data base (eg elephant is larger than housecat)

2) a set of inference rules (eg if x is larger than z, then larger is (x,z)

3) how data matches to rule, you need an executive control system that randomly chooses which one (check this lec 3 , 4228)

what are the operations of a production system

current state= current contents of data base (eg known knowledge)

state space= the set of all possible states (all propositions that could exist by having its knowledge base interact with its rule set)

goal state= the state you want the data base to be in (what you want to know about the world)

state transition rules= moving from one state to another (your inference rules that fire and allow you to change your data base)

search= algorithm for travelling through state space, lets you find best path moving from current state to goal state

what are the advantages of symbolic models

computational power

you can define variablised and universally quantified rules, doesn’t actually matter if you have ever seen x y z before

what are the disadvantages of symbolic models

might be too rigid to capture human behaviour (we don’t always apply rules, fail to capture shades of meaning, not very automatic processing)

we don’t know how they’re learned ( we know new representations are built from existing ones, but where do these structures come from first?)

no graceful degradation with damage- if you take out one rule, the whole thing collapses

not immediately obvious how you would take a symbolic representation and assign it to the brain- how does our brain represent it?

what is the connectionist model?

models composed of networks of interconnected nodes

like neurons in your brain, simple processors

they mimic populations of neurons in your brain

representation: pattern of activation on nodes or neurons

what is an example of a connectionist model

encode “lion= —> feline, wild, carnivore

“octopus”—> cephalopod, carnivore

you teach netwrokt hat lions like antelope so there is a positive connection between units reprsenting “lion” and “lion eats antelope”

how does processing work in the connectionist model

nodes pass activation over weighted connections

positive weights = excitatory connections

need to review!!!!

connectionist model

what are the 7 sins of memory

transience

absent-mindedness

blocking

misattribution

suggestibility

bias

persistence

transience

memories fade over time

absent-mindedness

forget what we are trying to remember

blocking

tip of the tongue, can’t retrieve it

misattribution

misremember the source of smth

suggestibility

can create false memories (eg using misleading questions)

bias

memories that are consistent with self image rather than facts

persistence

events from your life you wish you could forget

eg ptsd