Exam 3: Psychology of Learning

1/113

Earn XP

Description and Tags

Flashcard deck for 2025 - 2026 Exam 3 in PSY:3040 at the University of Iowa

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

114 Terms

What is a common feature of habituation, sensitization, and Pavlovian conditioning?

Organism’s behavior does not influence stimulus presentation schedule

What does the occurrence of an event depend on in instrumental conditioning?

The organism’s behavior

How does instrumental conditioning work?

An individual must perform an action (instrumental behavior) to get a specific result (a reinforcer is necessary)

How does the response-reinforcer contingency hold?

When a reinforcer is delivered only if instrumental behavior has been performed

How did Thorndike go beyond simply collecting anecdotes?

He created the Puzzle Box where animals had to perform a specific behavior to get out and receive reinforcer

How did some of Thorndike’s Puzzle Boxes work?

In one, cats had to make a simple/single response (pull loop), in another, cats had to perform sequence of events to get fish

What was the result of Thorndike’s Puzzle Box trials?

Cats generally got faster at getting out of the boxes

Why did cats get better at getting out of the boxes?

The wrong behaviors decreased and the right behaviors increased

What is operant conditioning?

A form of instrumental conditioning → Organisms had to operate on the environment to get the reinforcer

How did Skinner’s box differ from Thorndike’s box?

The organism is free to respond at any time in Skinner’s box unlike in Thorndike’s where there was a schedule

What kind of tasks did Thorndike use for his experiments?

Discrete-trial tasks

What kind of tasks did Skinner use for his experiments?

Free-operant tasks

What are some key components of Skinner’s experiments?

His box minimized the amount of experimenter contact, generally a rat would push lever for food, the rate of responding was the key measure of learning (Thorndike looked at latency to respond)

How does newness affect instrumental conditioning?

Best to perform familiar responses in new situations or new ways

What is an example of instrumental learning in new locations?

Running in a T-maze, rats already know how to run, but they need to learn where to run to get food

What is the key takeaway in instrumental conditioning in new situations?

This may require forming new responses from familiar component (rats had to do something to press level like stand on hind legs)

Why did rat mazes fall out of style in neuro studies?

Rats have a great sense of smell and previous rats can leave trails to help others

Can we shape responses that may have never occurred before?

Yes because behavior is highly variable

Why is variability good?

Because it allows us to adapt and change to better thing

What is shaping?

The process of reinforcing successive approximations of a desired behavior to gradually guide a person or animal toward a specific goal

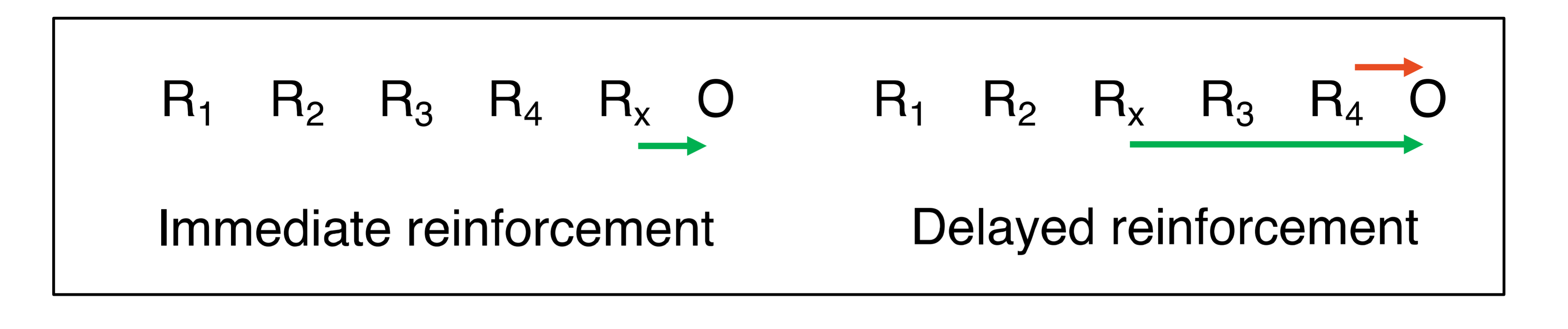

Response-reinforcer contiguity

Reinforcer needs to be delivered immediately after target response

Why is it bad if reinforcement is delayed?

Other intervening behaviors can occur and be reinforced instead of the wanted response

What is a stimulus associated with a primary reinforcer?

A secondary reinforcer (conditioned reinforcers)

How can the immediate delivery of a conditioned reinforcer affect delayed reinforcement?

This can overcome the delayed reinforcement of a primary reinforcer

What are conditioned reinforcers used for?

To bridge delays to primary reinforcers (clicker training)

What is Thorndike’s Law of Effect?

Behaviors followed by satisfying consequences are more likely to be repeated, while behaviors followed by unsatisfying consequences are less likely to occur again

What type of conditioning is instrumental conditioning?

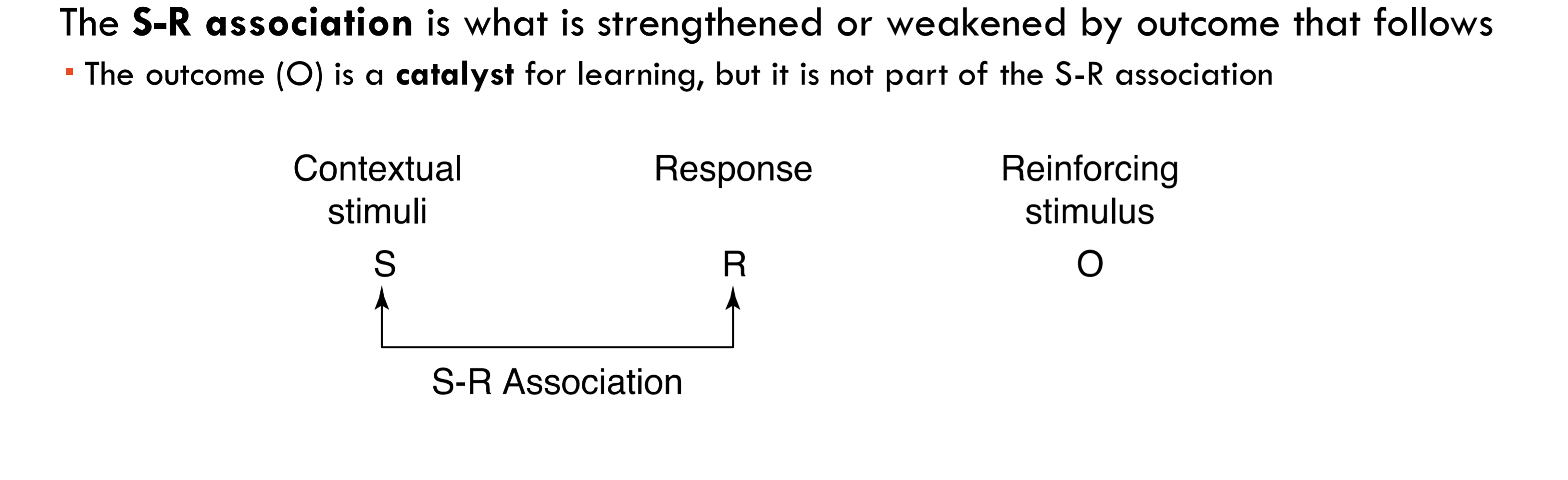

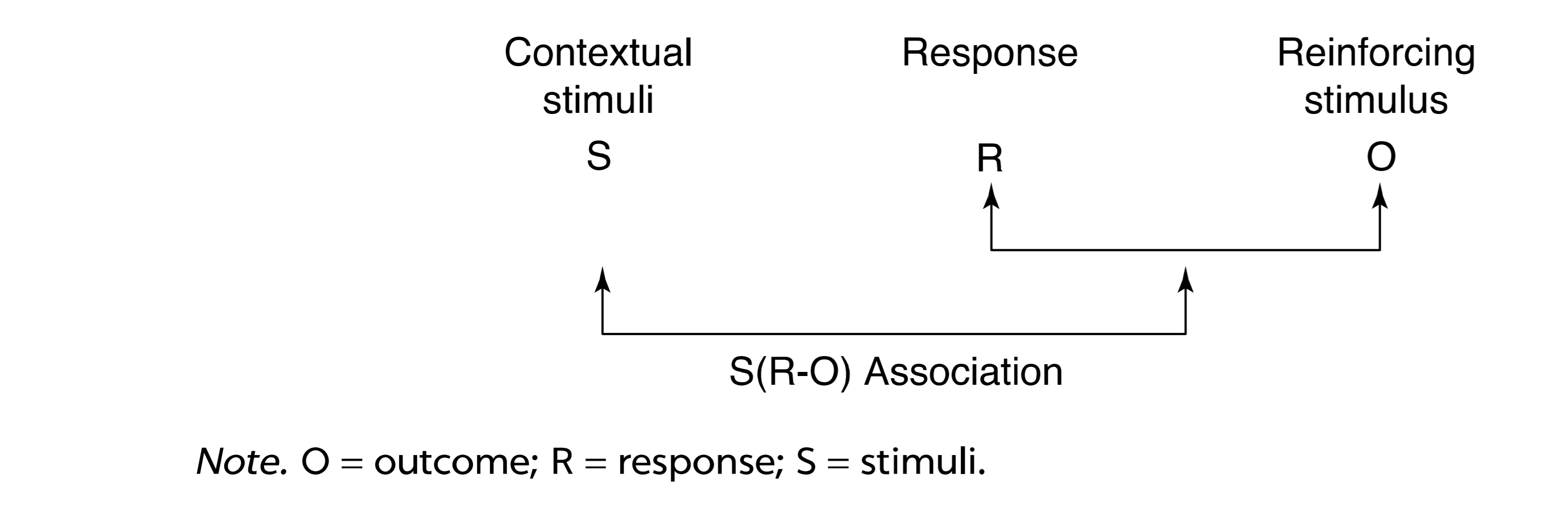

S-R learning (Pavlovian is S-S in procedure, Instrumental is not S-R in procedure)

What is Thorndike’s conceptual framework for instrument conditioning?

S: STIMULI in environment

R: instrumental RESPONSE

O: reinforcer or OUTCOME after response

Why is instrumental learning S-R according to Thorndike?

S-R bond is automatically and mechanically formed (not necessarily true)

How do satisfiers affect Thorndike’s Law of Effect?

When behavior is followed by satisfying outcome, behavior is more likely to be repeated, when behavior is followed by annoying outcome, behavior is less likely to be repeated

The S-R association is what is strengthened/weakened by outcome that follows (what does image look like?)

See image

What does the Law of Effect hold?

Response occurs simply because a stimulus occurs (automatic/unthinking, did not believe in cues/anticipation)

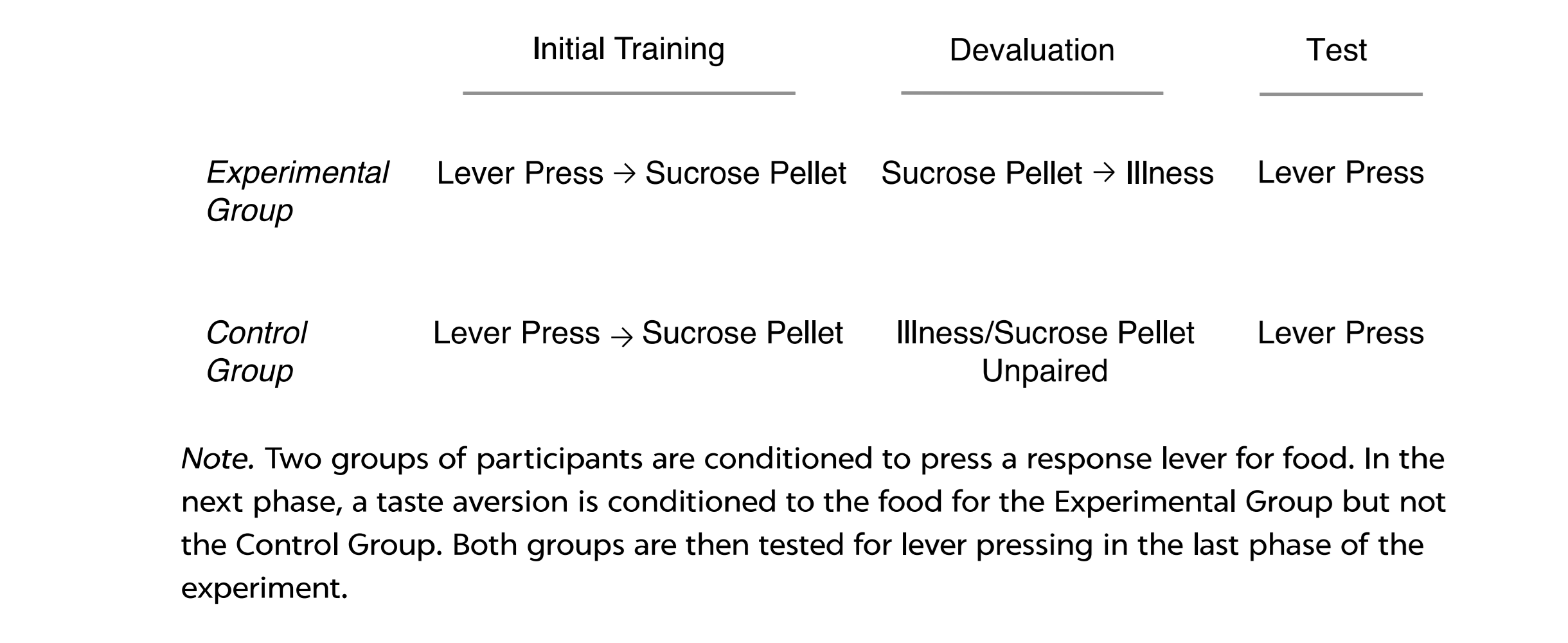

Because Thorndike says instrumental conditioning is not goal-directed, what kind of studies have been created?

S-R association is created with the reinforcer later devalued (reinforcer devaluation)

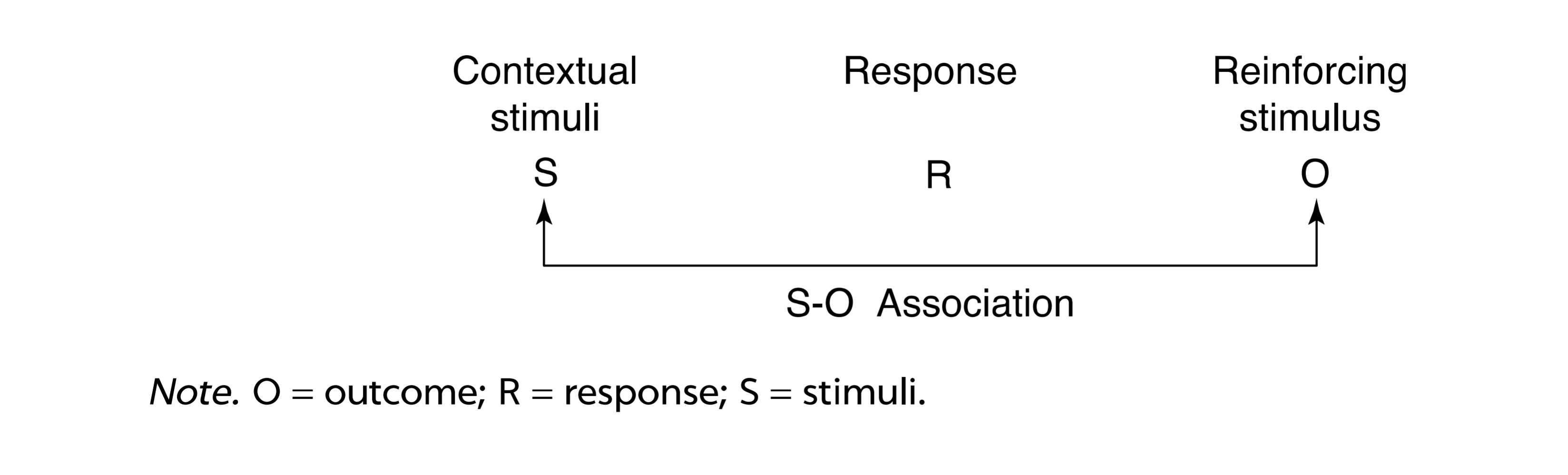

What does an S-O association look like?

What does a R-O association look like?

What is the implication for biological constraints on instrumental conditioning?

They occur when the response is incompatible with stimulus-elicited behaviors (pigs root coin in to dirt rather than into bank)

What are biological constraints on learning due to?

Seemingly Pavlovian S-O associations (misbehavior)

What are the implications for neural mechanisms of instrumental conditioning?

Mechanisms are more complex than seen in Pavlovian conditioning, it is difficult to focus on 1 type of association, experiments have to focus on one association and control for another

What is a schedule of reinforcement?

A rule that specifies when the occurrence of operant response is reinforced

What are some things schedules of reinforcement determine?

Rate of responding, temporal pattern, how persistent response will be in extinction, and whether responding will be sensitive to devaluation

What are typically investigated using free-operant method?

Rate and temporal pattern of responding

How long do free-operant conditioning experiments last?

About an hour or until rate and temporal pattern stabilize

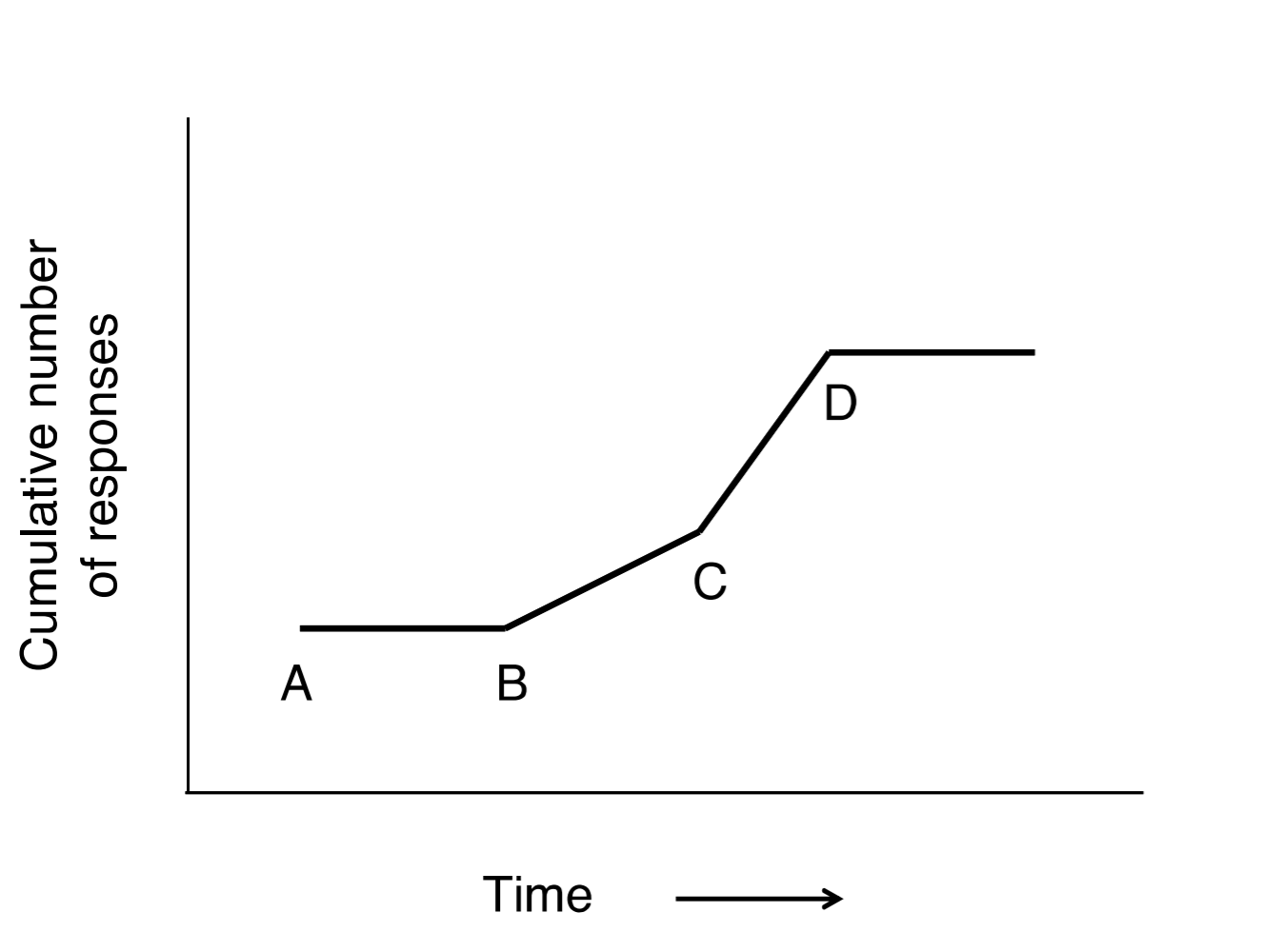

What kind of graph is temporal pattern of responding represented by?

A cumulative record

What does a cumulative record look like?

How does a simple schedule hold?

When reinforcement depends on number of response/how much time has passed since last reinforcer

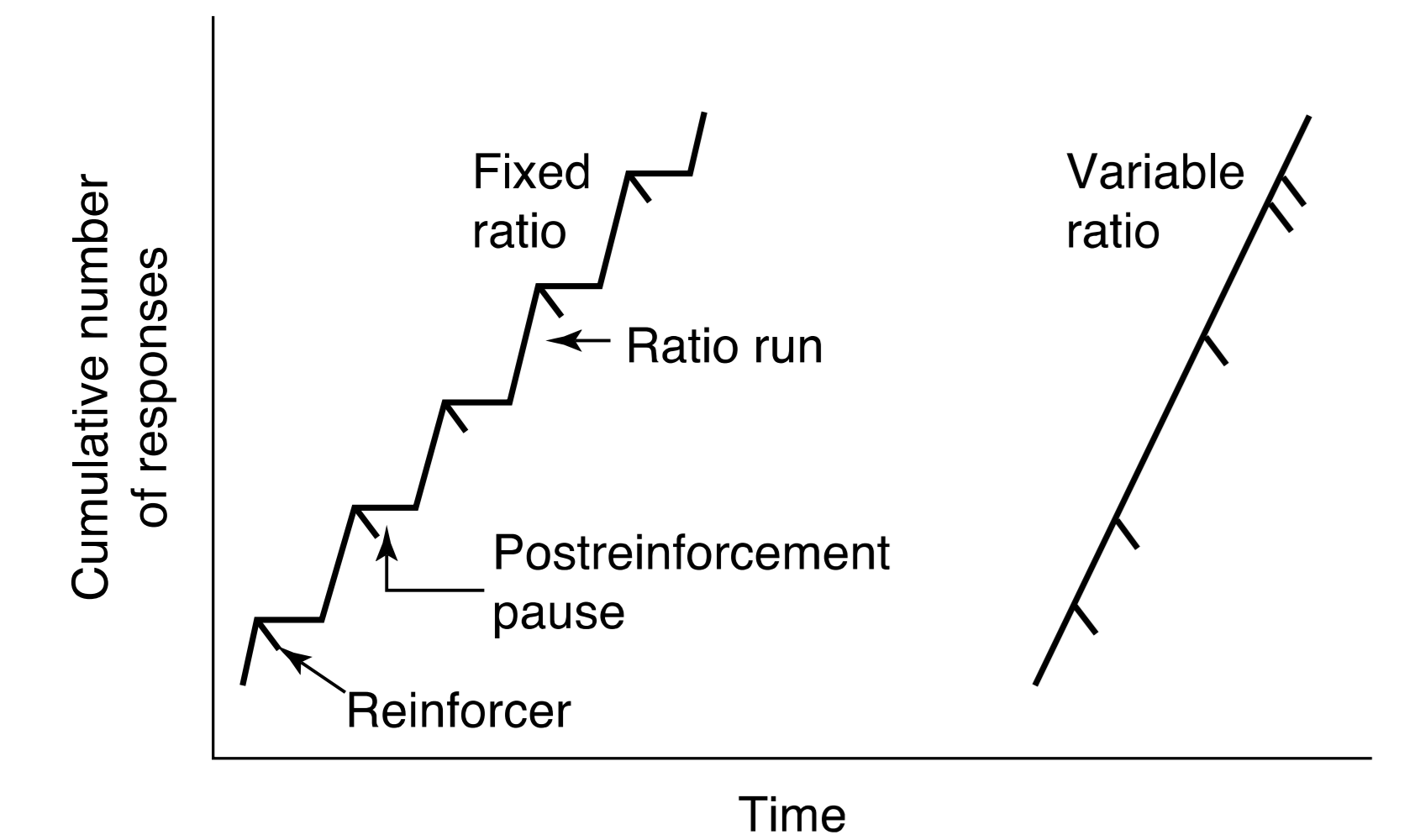

How does a ratio schedule work?

It requires some number of responses to get a reinforcer

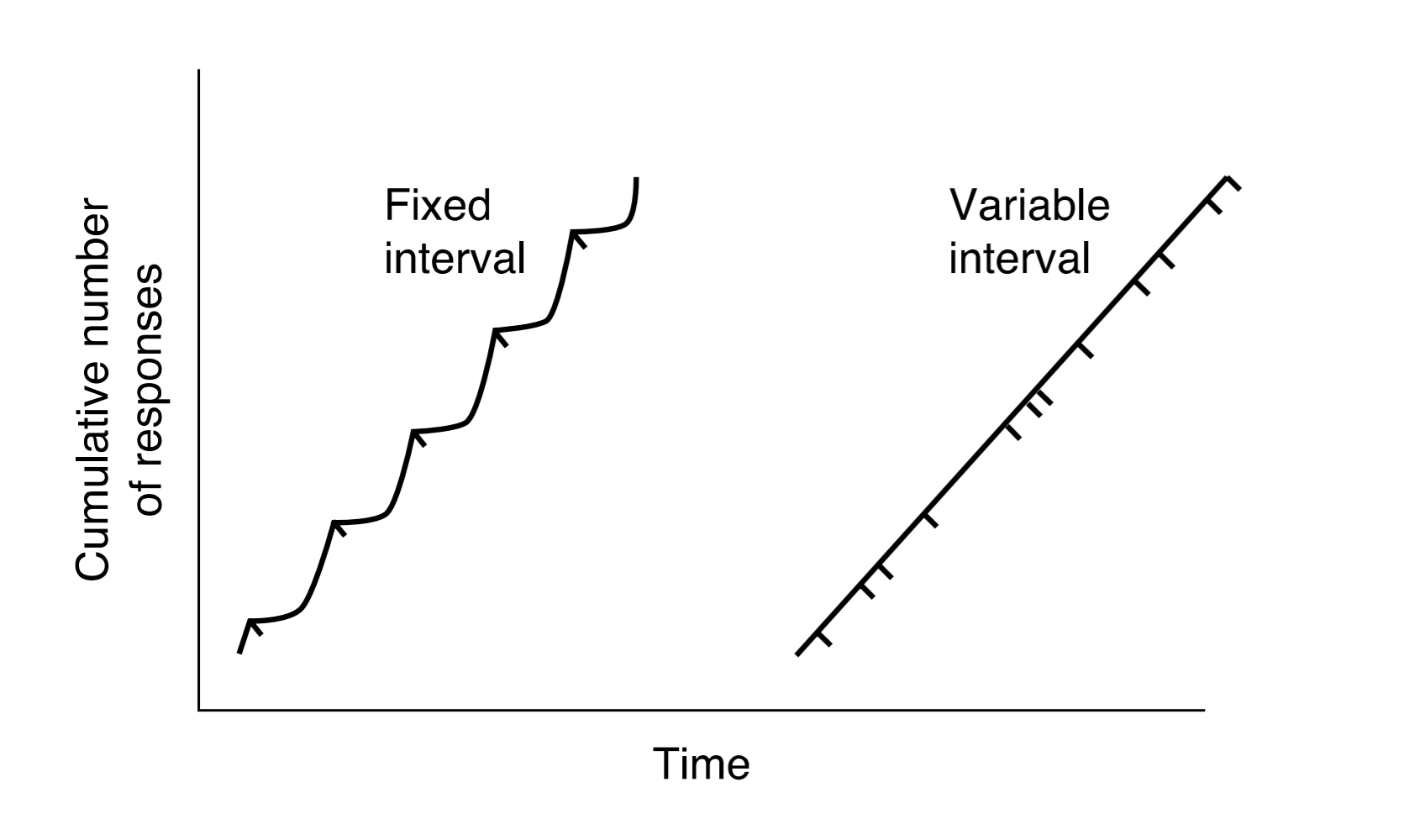

How does an interval schedule work?

It requires a certain amount of time to pass before given a reinforcer

What defines a fixed-ratio schedule?

You have to respond a certain amount of times before getting a reinforcer (speed = quicker reinforcer)

What defines a variable-ratio schedule?

You have to respond an indeterminant amount of times with an average ratio to get a reinforcer

How do you abbreviate “Fixed Ratio Schedule?”

FR with number as number of responses (So FR.5 for example)

What is the postreinforcement pause?

Organisms typically pause after a reinforcer (higher ratio = longer pauses)

What is a ratio run?

A steady rate of responding that occurs after postreinforcement pause until given next reinforcer

What are some examples of variable ratio schedules?

Slot machines, pulling lever unknown amount of times before jackpot

How do you abbreviate “Variable Ratio Schedule?”

VR with number demoting mean number of responses (So VR.38 for example)

What do VR schedule make?

High rates of responding without notable pauses (much more realistic)

How do we graphically compare FR and VR?

What defines a fixed-interval schedule?

A set amount of time has to pass before you can get reinforcer

What defines a variable-interval schedule?

An average amount of time has to pass before you can get a reinforcer

Why are reinforcers not automatically delivered?

A response has to happen before

What is set up time?

The amount of time it takes for animal to make response (reinforcer stays available this whole time)

How do we abbreviate Fixed Interval Schedules?

FI with amount of time required (So FI.4min for example)

What does efficient responding require an organism to have?

The ability to perceive time

How to abbreviate Variable Interval Schedule?

VI with mean time that has to pass (So VI.2min schedule for example)

What makes the greatest resistance to extinction?

VI

What is a limited hold?

A way FI and VI schedules can be arranged so reinforcer is only available for a certain period of time (this increases rate of responding)

How can we graphically represent FI and VI?

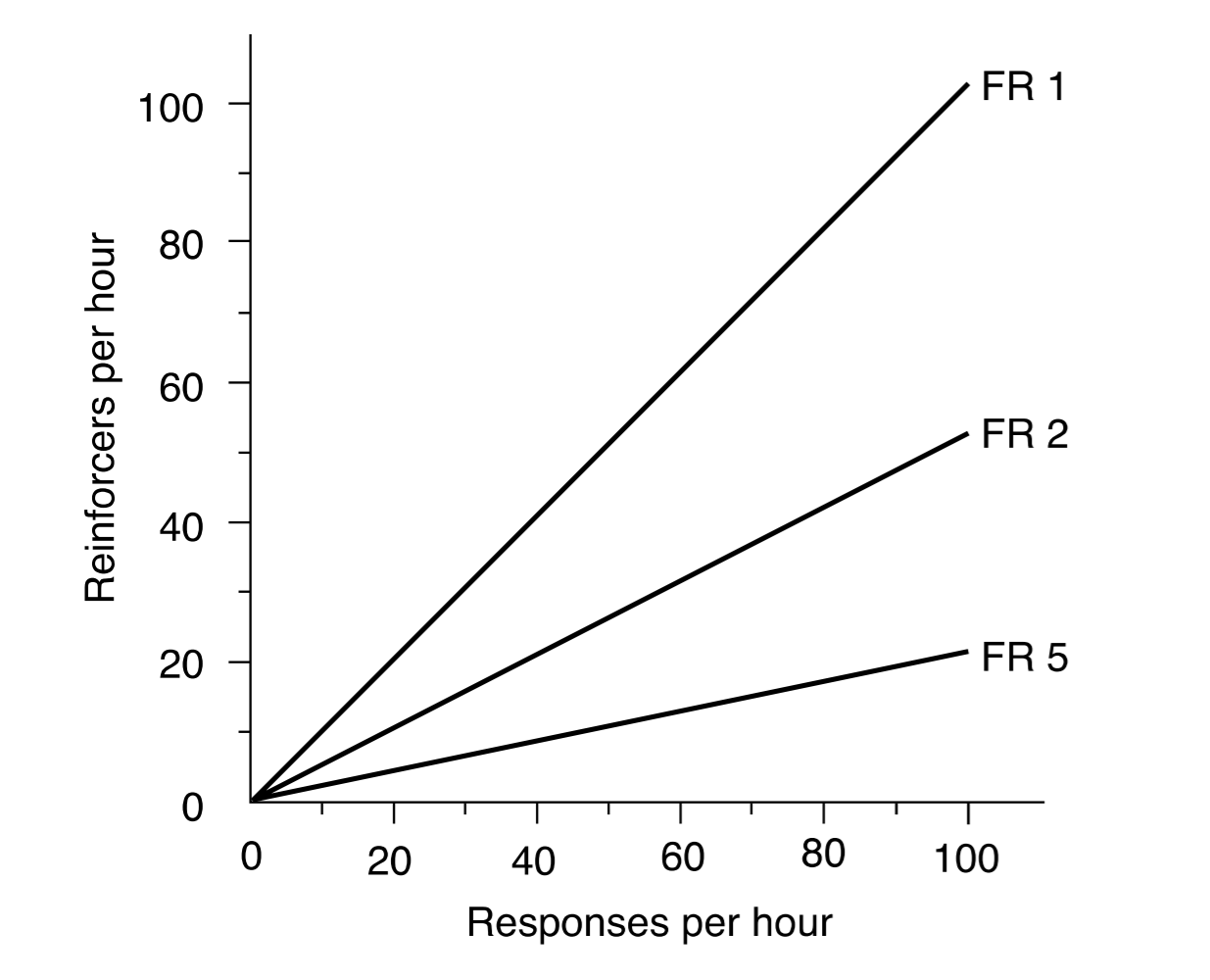

What is feedback function?

The relationship between rate of response and rate of reinforcement (best way to understand ratio schedules)

How can we graphically represent feedback function?

What is IRT?

Interresponse time or the time between responses

Ratio schedules reinforce what?

Short IRTs and high rates of responding

Why can ratio schedules be stressful for the organism?

Because responding faster = greater payoff

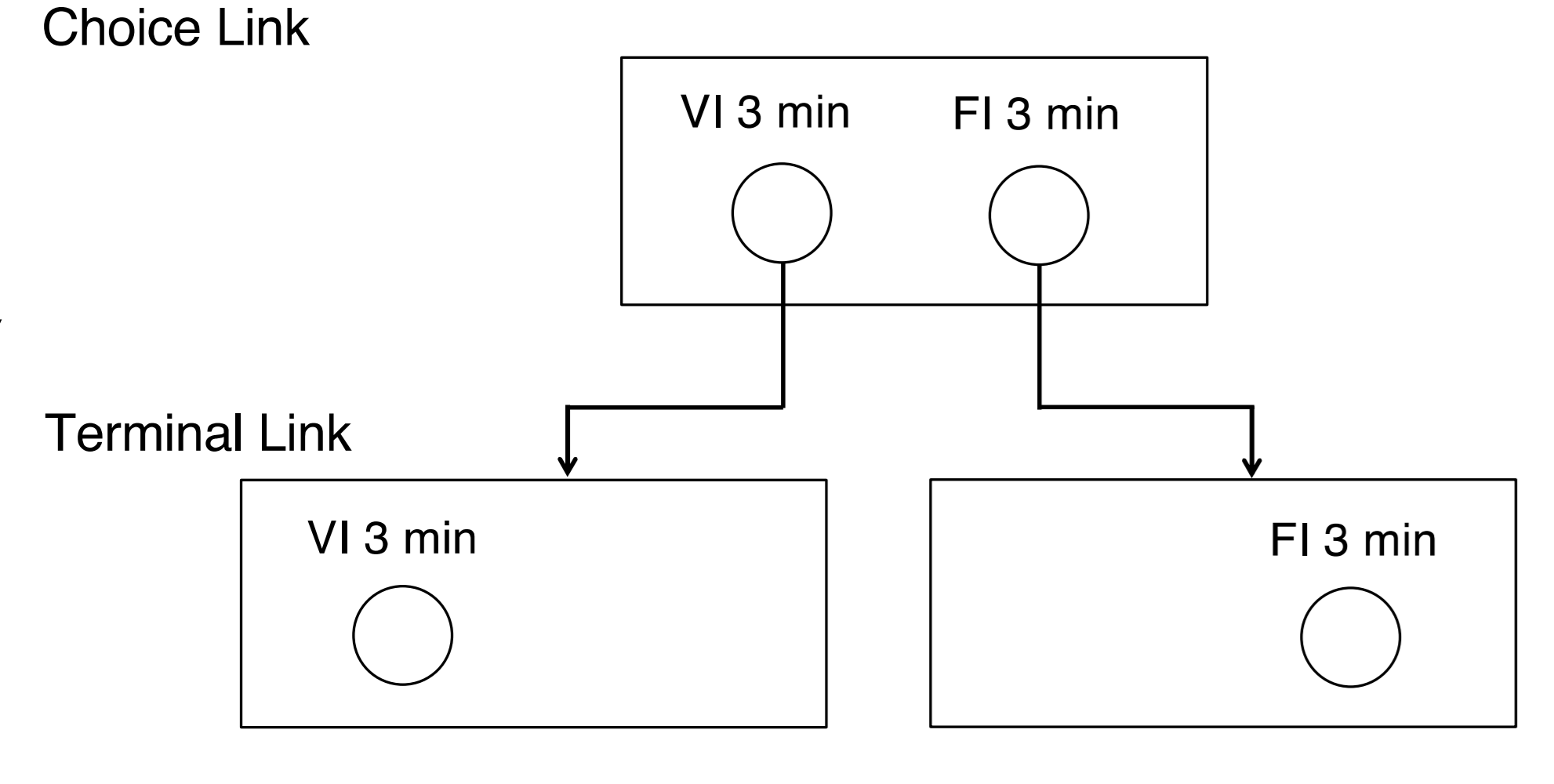

What is a concurrent schedule?

An organism has at least 2 responses simultaneously available

What is needed of response options in concurrent schedules?

Independent schedules (A on VI.5min and B on VR 15)

What do concurrent schedules tell us about choice?

That organisms has the ability to switch between A and B options

What are important factors to consider in concurrent schedules?

Effort needed, time and effort of switching, reinforcement attractiveness, schedule of reinforcement for each response

If effort and reinforcer are identical for each response and switching is easy…

Only difference is schedule of reinforcement

What is Matching Law?

Relative rate of responding to A is equal to relative rate of reinforcement obtained for making A

What does a concurrent chain schedule look like?

Once one option is picked, other becomes unavailable

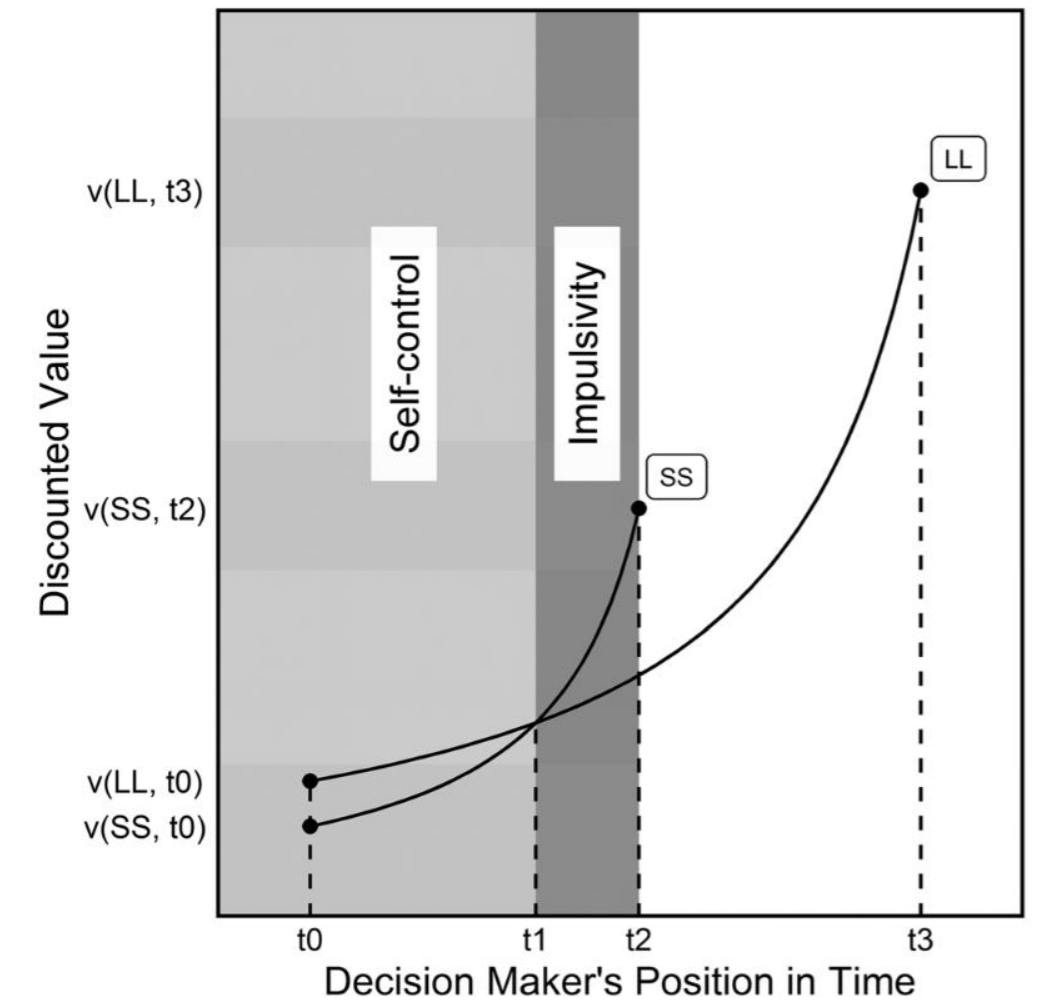

What is Self Control?

When one choice offers a small reward sooner (SS) and another offers a large reward later (LL)

How do we represent self control graphically?

What is Delay Discounting Function?

The longer you have to wait for a reinforcer, the less valuable it becomes

How can we relate tracking to self control?

Sign tracking: poor self control

Goal tracking: good self control

What must a good theory have?

Consistency with research findings, stimulation of new research that evaluates and enhances theory precision, and new insights and new ways of thinking

What questions must a good theory answer?

What makes something a reinforcer, how can we predict whether something will be an effective reinforcer, how does this reinforcer produce its effects, and what mechanisms are responsible for increasing probability of a reinforced response

Thorndike proposed the first theory of reinforcement. What was this?

A positive reinforcer makes a satisfying state of affairs

What did Thorndike’s theory not explain?

What makes something effective as a reinforcer

What does Thorndike’s Law of Effect answer?

The second idea that a reinforcer strengthens on an existing but improbable S-R association

What did Skinner focus on in his experiments?

Functional relationships (can be directly measured and observed)

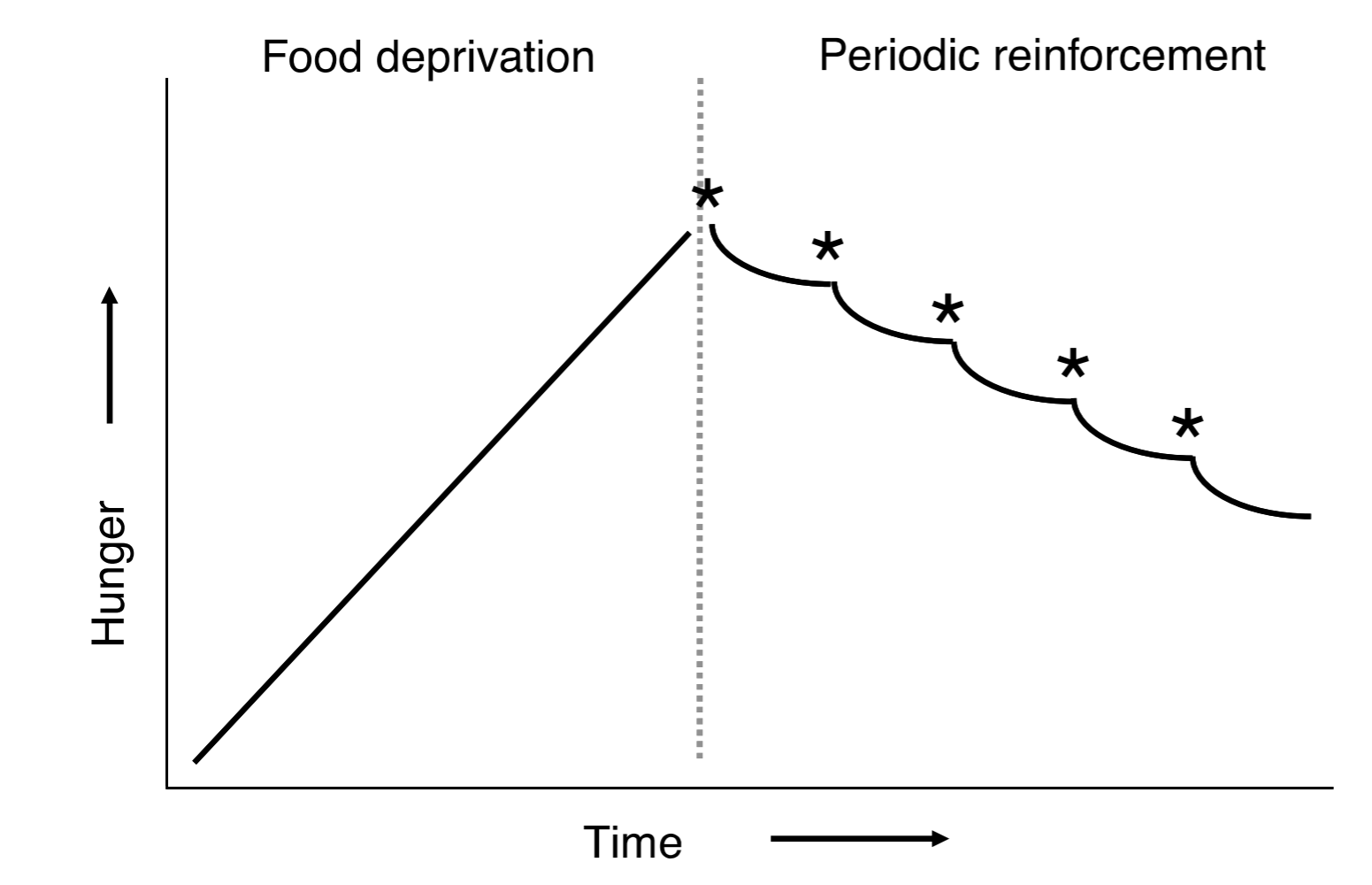

What is the Hull and Drive Reduction Theory?

behavior is motivated by biological needs, which create an unpleasant state of tension called a "drive"

What is homeostasis?

Where body is in a state of balance based on needs like food, water, blood-sugar, etc

What is a drive-state?

When there is a homeostatic imbalance to provide motivation to return to homeostasis (hunger = search for food)

What is Drive Reduction Theory?

Something is effective as a reinforcer if it reduces current drive state (even if small and temporary)

How can we graphically represent Drive Reduction Theory?

What is a primary reinforcer?

Stimuli that are effective at reducing biological drives without prior training

Do all stimuli that serve as effective reinforcers satisfy a biological need?

No, money for example

What is a secondary or conditioned reinforcer?

A stimulus that has been associated with a primary reinforcer

What is a conditioned or acquired drive?

When a stimulus also elicits a drive state (hungry again even though you ate large meal when dessert comes to table)

What can Clark Hull’s theory explain?

Aversively motivated behaviors and drug addiction

Can Hull’s theory explain all instances of reinforcement?

No, look at sensory reinforcement

What is sensory reinforcement?

When you present a stimulus unrelated to any biological need/drive (give prisoner with nothing to do The Wall Street Journal, they read it and even request is bc they are bored)